AttributeError: module 'tensorflow' has no attribute 'layers'

The code you're using was written in Tensorflow v1.x, and is not compatible as it is with Tensorflow v2. The easiest solution is probably to downgrade to a version of tensorflow v1 to run the code as it is.

An other option would be to could follow this guide to migrate the code from v1 to v2.

A third option would be to use the tf.compat module to get some retro-compatibility. For example, tf.layers does not exist anymore in Tensorflow v2. You can use tf.compat.v1.layers (see for example the Conv2D function) instead, but this is a temporary fix, as these functions will be removed in a future version.

Related videos on Youtube

peter3275g

Updated on June 04, 2022Comments

-

peter3275g almost 2 years

I am trying to implement the VGG but am getting the above odd error. I am running TFv2 on Ubuntu. Could this be because I am not running CUDA?

The code is from here.

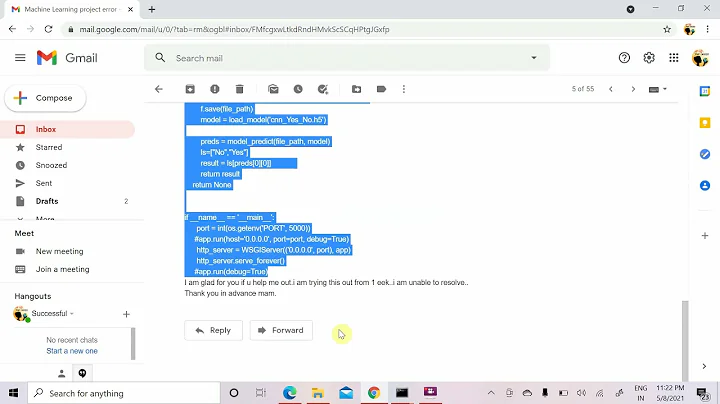

from __future__ import absolute_import from __future__ import division from __future__ import print_function # Imports import time import numpy as np import tensorflow as tf import matplotlib.pyplot as plt # tf.logging.set_verbosity(tf.logging.INFO) from tensorflow.keras.layers import Conv2D, Dense, Flatten np.random.seed(1) mnist = tf.keras.datasets.mnist (train_data, train_labels), (eval_data, eval_labels) = mnist.load_data() train_data, train_labels = train_data / 255.0, train_labels / 255.0 # Add a channels dimension train_data = train_data[..., tf.newaxis] train_labels = train_labels[..., tf.newaxis] index = 7 plt.imshow(train_data[index].reshape(28, 28)) plt.show() time.sleep(5); print("y = " + str(np.squeeze(train_labels[index]))) print ("number of training examples = " + str(train_data.shape[0])) print ("number of evaluation examples = " + str(eval_data.shape[0])) print ("X_train shape: " + str(train_data.shape)) print ("Y_train shape: " + str(train_labels.shape)) print ("X_test shape: " + str(eval_data.shape)) print ("Y_test shape: " + str(eval_labels.shape)) print("done") def cnn_model_fn(features, labels, mode): # Input Layer input_height, input_width = 28, 28 input_channels = 1 input_layer = tf.reshape(features["x"], [-1, input_height, input_width, input_channels]) # Convolutional Layer #1 and Pooling Layer #1 conv1_1 = tf.layers.conv2d(inputs=input_layer, filters=64, kernel_size=[3, 3], padding="same", activation=tf.nn.relu) conv1_2 = tf.layers.conv2d(inputs=conv1_1, filters=64, kernel_size=[3, 3], padding="same", activation=tf.nn.relu) pool1 = tf.layers.max_pooling2d(inputs=conv1_2, pool_size=[2, 2], strides=2, padding="same") # Convolutional Layer #2 and Pooling Layer #2 conv2_1 = tf.layers.conv2d(inputs=pool1, filters=128, kernel_size=[3, 3], padding="same", activation=tf.nn.relu) conv2_2 = tf.layers.conv2d(inputs=conv2_1, filters=128, kernel_size=[3, 3], padding="same", activation=tf.nn.relu) pool2 = tf.layers.max_pooling2d(inputs=conv2_2, pool_size=[2, 2], strides=2, padding="same") # Convolutional Layer #3 and Pooling Layer #3 conv3_1 = tf.layers.conv2d(inputs=pool2, filters=256, kernel_size=[3, 3], padding="same", activation=tf.nn.relu) conv3_2 = tf.layers.conv2d(inputs=conv3_1, filters=256, kernel_size=[3, 3], padding="same", activation=tf.nn.relu) pool3 = tf.layers.max_pooling2d(inputs=conv3_2, pool_size=[2, 2], strides=2, padding="same") # Convolutional Layer #4 and Pooling Layer #4 conv4_1 = tf.layers.conv2d(inputs=pool3, filters=512, kernel_size=[3, 3], padding="same", activation=tf.nn.relu) conv4_2 = tf.layers.conv2d(inputs=conv4_1, filters=512, kernel_size=[3, 3], padding="same", activation=tf.nn.relu) pool4 = tf.layers.max_pooling2d(inputs=conv4_2, pool_size=[2, 2], strides=2, padding="same") # Convolutional Layer #5 and Pooling Layer #5 conv5_1 = tf.layers.conv2d(inputs=pool4, filters=512, kernel_size=[3, 3], padding="same", activation=tf.nn.relu) conv5_2 = tf.layers.conv2d(inputs=conv5_1, filters=512, kernel_size=[3, 3], padding="same", activation=tf.nn.relu) pool5 = tf.layers.max_pooling2d(inputs=conv5_2, pool_size=[2, 2], strides=2, padding="same") # FC Layers pool5_flat = tf.contrib.layers.flatten(pool5) FC1 = tf.layers.dense(inputs=pool5_flat, units=4096, activation=tf.nn.relu) FC2 = tf.layers.dense(inputs=FC1, units=4096, activation=tf.nn.relu) FC3 = tf.layers.dense(inputs=FC2, units=1000, activation=tf.nn.relu) """the training argument takes a boolean specifying whether or not the model is currently being run in training mode; dropout will only be performed if training is true. here, we check if the mode passed to our model function cnn_model_fn is train mode. """ dropout = tf.layers.dropout(inputs=FC3, rate=0.4, training=mode == tf.estimator.ModeKeys.TRAIN) # Logits Layer or the output layer. which will return the raw values for our predictions. # Like FC layer, logits layer is another dense layer. We leave the activation function empty # so we can apply the softmax logits = tf.layers.dense(inputs=dropout, units=10) # Then we make predictions based on raw output predictions = { # Generate predictions (for PREDICT and EVAL mode) # the predicted class for each example - a vlaue from 0-9 "classes": tf.argmax(input=logits, axis=1), # to calculate the probablities for each target class we use the softmax "probabilities": tf.nn.softmax(logits, name="softmax_tensor") } # so now our predictions are compiled in a dict object in python and using that we return an estimator object if mode == tf.estimator.ModeKeys.PREDICT: return tf.estimator.EstimatorSpec(mode=mode, predictions=predictions) '''Calculate Loss (for both TRAIN and EVAL modes): computes the softmax entropy loss. This function both computes the softmax activation function as well as the resulting loss.''' loss = tf.losses.sparse_softmax_cross_entropy(labels=labels, logits=logits) # Configure the Training Options (for TRAIN mode) if mode == tf.estimator.ModeKeys.TRAIN: optimizer = tf.train.GradientDescentOptimizer(learning_rate=0.001) train_op = optimizer.minimize(loss=loss, global_step=tf.train.get_global_step()) return tf.estimator.EstimatorSpec(mode=mode, loss=loss, train_op=train_op) # Add evaluation metrics (for EVAL mode) eval_metric_ops = { "accuracy": tf.metrics.accuracy(labels=labels, predictions=predictions["classes"])} return tf.estimator.EstimatorSpec(mode=mode, loss=loss, eval_metric_ops=eval_metric_ops) print("done2") mnist_classifier = tf.estimator.Estimator(model_fn=cnn_model_fn, model_dir="/tmp/mnist_vgg13_model") print("done3") train_input_fn = tf.compat.v1.estimator.inputs.numpy_input_fn(x={"x": train_data}, y=train_labels, batch_size=100, num_epochs=100, shuffle=True) print("done4") mnist_classifier.train(input_fn=train_input_fn, steps=None, hooks=None) print("done5") eval_input_fn = tf.estimator.inputs.numpy_input_fn(x={"x": eval_data}, y=eval_labels, num_epochs=1, shuffle=False) print("done6") eval_results = mnist_classifier.evaluate(input_fn=eval_input_fn) print(eval_results)-

Beez about 4 yearsDon't know much about tensorflow, but could it be because you declare tensorflow as tf, so why don't you try

from tf.keras.layers import..... -

Beez about 4 yearsor possibly like this

from tensorflow.python.keras.layers import....check out stackoverflow.com/questions/47262955/…

-

-

Teque5 almost 3 yearsThis is wrong now. TF v2.2+ now supports python 3.8.

-

enesdemirag over 2 yearsFinally, thank god..

enesdemirag over 2 yearsFinally, thank god..

![[Solved] AttributeError: 'module' object has no attribute](https://i.ytimg.com/vi/0EO08QEL0Q8/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLCZM9WzE2C-5Gs3TqGwnAW7QHFodA)