AWS ECS 503 Service Temporarily Unavailable while deploying

Solution 1

So, the issue seems to lie in the port mappings of my container settings in the task definition.

Before I was using 80 as host and 8080 as container port. I thought I need to use these, but the host port can be any value actually. If you set it to 0 then ECS will assign a port in the range of 32768-61000 and thus it is possible to add multiple tasks to one instance. In order for this to work, I also needed to change my security group letting traffic come from the ALB to the instances on these ports.

So, when ECS can run multiple tasks on the same instance, the 50/200 min/max healthy percent makes sense and it is possible to do a deploy of new task revision without the need of adding new instances. This also ensures the zero-downtime deployment.

Thank you for everybody who asked or commented!

Solution 2

Since you are using AWS ECS may I ask what is the service's "minimum health percent" and "maximum health percent"

Make sure that you have "maximum health percent" of 200 and "minimum health percent" of 50 so that during deployment not all of your services go down.

Please find the documentation definition of these two terms:

Maximum percent provides an upper limit on the number of running tasks during a deployment enabling you to define the deployment batch size.

Minimum healthy percent provides a lower limit on the number of running tasks during a deployment enabling you to deploy without using additional cluster capacity.

A limit of 50 for "minimum health percent" will make sure that only half of your services container gets killed before deploying the new version of the container, i.e. if the desired task value of the service is "2" than at the time of deployment only "1" container with old version will get killed first and once the new version is deployed the second old container will get killed and a new version container deployed. This will make sure that at any given time there are services handling the request.

Similarly a limit of 200 for "maximum health percent" tells the ecs-agent that at a given time during deployment the service's container can shoot up to a maximum of double of the desired task.

Please let me know in case of any further question.

Solution 3

With your settings, you application start up should take more then 30 seconds in order to fail 2 health checks and be marked unhealthy (assuming first check immediately after your app went down). And it will take at least 2 minutes and up to 3 minutes then to be marked healthy again (first check immediately after your app came back online in the best case scenario or first check immediately before your app came back up in the worst case).

So, a quick and dirty fix is to increase Unhealthy threshold so that it won't be marked unhealthy during updates. And may be decrease Healthy threshold so that it is marked healthy again quicker.

But if you really want to achieve zero downtime, then you should use multiple instances of your app and tell AWS to stage deployments as suggested by Manish Joshi (so that there are always enough healthy instances behind your ELB to keep your site operational).

Solution 4

How i solved this was to have a flat file in the application root that the ALB would monitor to remain healthy. Before deployment, a script will remove this file while monitoring the node until it registers OutOfService.

That way all live connection would have stopped and drained. At this point, the deployment is then started by stopping the node or application process. After deployment, the node is added back to the LB by adding back this flat file and monitored until it registers Inservice for this node before moving to the second node to complete same step above.

My script looks as follow

# Remove Health Check target

echo -e "\nDisabling the ELB Health Check target and waiting for OutOfService\n"

rm -f /home/$USER/$MYAPP/server/public/alive.html

# Loop until the Instance is Out Of Service

while true

do

RESULT=$(aws elb describe-instance-health --load-balancer-name $ELB --region $REGION --instances $AMAZONID)

if echo $RESULT | grep -qi OutOfService ; then

echo "Instance is Deattached"

break

fi

echo -n ". "

sleep $INTERVAL

done

Solution 5

You were speaking about Jenkins, so I'll answer with the Jenkins master service in mind, but my answer remains valid for any other case (even if it's not a good example for ECS, a Jenkins master doesn't scale correctly, so there can be only one instance).

503 bad gateway

I often encountered 503 gateway errors related to load balancer failing healthchecks (no healthy instance). Have a look at your load balancer monitoring tab to ensure that the count of healthy hosts is always above 0.

If you're doing an HTTP healthcheck, it must return a code 200 (the list of valid codes is configurable in the load balancer settings) only when your server is really up and running. Otherwise the load balancer could put at disposal instances that are not fully running yet.

If the issue is that you always get a 503 bad gateway, it may be because your instances take too long to answer (while the service is initializing), so ECS consider them as down and close them before their initialization is complete. That's often the case on Jenkins first run.

To avoid that last problem, you can consider adapting your load balancer ping target (healthcheck target for a classic load balancer, listener for an application load balancer):

- With an application load balancer, try with something that will always return 200 (for Jenkins it may be a public file like /robots.txt for example).

- With a classic load balancer, use a TCP port test rather than a HTTP test. It will always succeed if you have opened the port correctly.

One node per instance

If you need to be sure you have only one node per instance, you may use a classic load balancer (it also behaves well with ECS). With classic load balancers, ECS ensures that only one instance runs per server. That's also the only solution to have non HTTP ports accessible (for instance Jenkins needs 80, but also 50000 for the slaves).

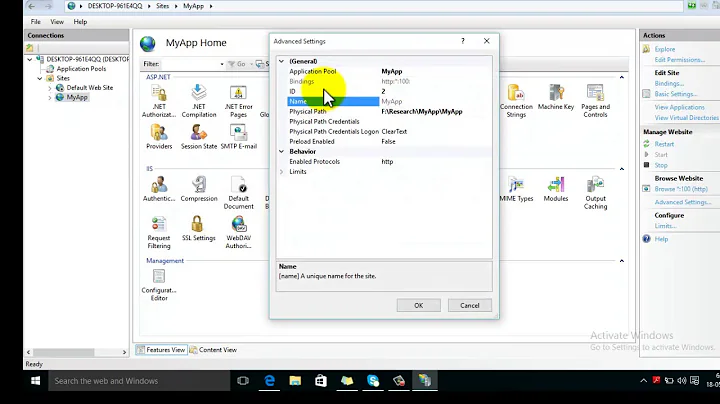

However, as the ports are not dynamic with a classic load balancer, you have to do some port mapping, for example:

myloadbalancer.mydomain.com:80 (port 80 of the load balancer) -> instance:8081 (external port of your container) -> service:80 (internal port of your container).

And of course you need one load balancer per service.

Jenkins healthcheck

If that's really a Jenkins service that you want to launch, you should use the Jenkins Metrics plugin to obtain a good healthcheck URL.

Install it, and in the global options, generate a token and activate the ping, and you should be able to reach an URL looking like this: http://myjenkins.domain.com/metrics/mytoken12b3ad1/ping

This URL will answer the HTTP code 200 only when the server is fully running, which is important for the load balancer to activate it only when it's completely ready.

Logs

Finally, if you want to know what is happening to your instance and why it is failing, you can add logs to see what the container is saying in AWS Cloudwatch.

Just add this in the task definition (container conf):

Log configuration: awslogs

awslogs-group: mycompany (the Cloudwatch key that will regroup your container logs)

awslogs-region: us-east-1 (your cluster region)

awslogs-stream-prefix: myservice (a prefix to create the log name)

It will give you more insight about what is happening during a container initialization, if it just takes too long or if it is failing.

Hope it helps!!!

Related videos on Youtube

vargen_

Engineer, engineering manager, entrepreneur, father of two.

Updated on July 21, 2020Comments

-

vargen_ over 3 years

vargen_ over 3 yearsI am using Amazon Web Services EC2 Container Service with an Application Load Balancer for my app. When I deploy a new version, I get 503 Service Temporarily Unavailable for about 2 minutes. It is a bit more than the startup time of my application. This means that I cannot do a zero-downtime deployment now.

Is there a setting to not use the new tasks while they are starting up? Or what am I missing here?

UPDATE:

The health check numbers for the target group of the ALB are the following:

Healthy threshold: 5 Unhealthy threshold: 2 Timeout: 5 seconds Interval: 30 seconds Success codes: 200 OKHealthy threshold is 'The number of consecutive health checks successes required before considering an unhealthy target healthy'

Unhealthy threshold is 'The number of consecutive health check failures required before considering a target unhealthy.'

Timeout is 'The amount of time, in seconds, during which no response means a failed health check.'

Interval is 'The approximate amount of time between health checks of an individual target'UPDATE 2: So, my cluster consists of two EC2 instances, but can scale up if needed. The desired and minimum count is 2. I run one task per instance, because my app needs a specific port number. Before I deploy (jenkins runs an aws cli script) I set the number of instances to 4. Without this, AWS cannot deploy my new tasks (this is another issue to solve). Networking mode is bridge.

-

kosa almost 7 yearsWhat are your ALB to ECS health check polling interval? My guess is you have this number in minutes which is causing the ALB refresh delay.

-

vargen_ almost 7 years@kosa thank you for your comment! I added the numbers of the target group health check. Do you think the interval is too big?

vargen_ almost 7 years@kosa thank you for your comment! I added the numbers of the target group health check. Do you think the interval is too big? -

kosa almost 7 years5 * 30 seconds = 2 and half minutes it takes for ALB to switch to healthy state, which roughly fits in your observation. If you bring down these numbers you will see quick response.

-

vargen_ almost 7 years@kosa shouldn't this mean that my new instances stay in unhealthy state longer? So an instance starts as unhealthy and if the interval is higher, it will become healthy later? And till then, the old instances are still kept in the ALB?

vargen_ almost 7 years@kosa shouldn't this mean that my new instances stay in unhealthy state longer? So an instance starts as unhealthy and if the interval is higher, it will become healthy later? And till then, the old instances are still kept in the ALB? -

kosa almost 7 yearsThis is one part of the problem, there is another part TTL (time to live) setting, this setting will cache the DNS settings. Combination of these will decide 1) When new instance is available 2) When to forward the request new instance.

-

vargen_ almost 7 years@kosa I don't quite know where to set that TTL and to what value. Could you point me to the right place?

vargen_ almost 7 years@kosa I don't quite know where to set that TTL and to what value. Could you point me to the right place? -

kosa almost 7 yearsTTL setting will be in Route53 hostedzone records.

-

vargen_ almost 7 years@kosa how is the Route53 setting affecting the ALB using the instances? In the records, my domain is an alias for the ALB and its setting has no TTL. In Route53 I'm only setting where the domains are pointing, not what will happen with the load balancer. Correct me if I'm wrong.

vargen_ almost 7 years@kosa how is the Route53 setting affecting the ALB using the instances? In the records, my domain is an alias for the ALB and its setting has no TTL. In Route53 I'm only setting where the domains are pointing, not what will happen with the load balancer. Correct me if I'm wrong.

-

-

vargen_ almost 7 yearsThank you for the response! The minimum and maximum healthy settings are just as you wrote.

vargen_ almost 7 yearsThank you for the response! The minimum and maximum healthy settings are just as you wrote. -

vargen_ almost 7 yearsThank you for your response! Some questions: why would my old instances go into unhealthy state? Aren't the new instances starting as unhealthy? Why would the ALB kill the old instances while the new ones aren't in healthy state?

vargen_ almost 7 yearsThank you for your response! Some questions: why would my old instances go into unhealthy state? Aren't the new instances starting as unhealthy? Why would the ALB kill the old instances while the new ones aren't in healthy state? -

vargen_ almost 7 yearsThank you for your response! This method sounds doable, but I think it's a bit complicated, and there should be a more off the shelf way to do zero downtime deployments with ELBs. In my setup, I've set a very simple endpoint (which always return 200 if the app is running) as the health check. So if the app is not yet up, the health check will fail. Shouldn't that be enough?

vargen_ almost 7 yearsThank you for your response! This method sounds doable, but I think it's a bit complicated, and there should be a more off the shelf way to do zero downtime deployments with ELBs. In my setup, I've set a very simple endpoint (which always return 200 if the app is running) as the health check. So if the app is not yet up, the health check will fail. Shouldn't that be enough? -

Innocent Anigbo almost 7 yearsThat is good but the issue with it is that you won't be able to perform a deployment without downtime. this is because, as soon as you stop your APP, the ELB doesn't automatically start redirecting Traffic to second node behind the LB. It will wait until after the next healthcheck interval depending on what you have set this to be. At this point the users will see 502. But you can mitigate this by implementing the solution i described above. But first enable connection draining on the ELB as described here docs.aws.amazon.com/elasticloadbalancing/latest/classic/…

-

Innocent Anigbo almost 7 yearsYou might just enable only the connection draining described in the link I sent above if you are doing a manual deployment. But if you are doing an automated deployment, you still need a way to tell your deployment to wait until ec2 is marked as OutOfService before stopping the APP and InService before start deployment on second node which is what the script will do for you. Else you might have two nodes with status OutOfService behind the LB

-

Manish Joshi almost 7 years@vargen_ This is weird as with ideally with these settings during deployment not all containers would go down. May I know what is the "desired task" set to for your services? and how many ECS instances you have in the cluster? Also what docker networking you are using(host or bridge). It might be the case that 2 containers are not able to come up simultaneously for your application(old version and new version) because of some port conflict or some other issue.

Manish Joshi almost 7 years@vargen_ This is weird as with ideally with these settings during deployment not all containers would go down. May I know what is the "desired task" set to for your services? and how many ECS instances you have in the cluster? Also what docker networking you are using(host or bridge). It might be the case that 2 containers are not able to come up simultaneously for your application(old version and new version) because of some port conflict or some other issue. -

Seva almost 7 yearsThat's weird. ALB won't kill your instances - only mark them unhealthy, but I assume that's what you meant. New instances start unhealthy and will stay unhealthy until you deploy your app on them, start it and wait for them to pass 5 heath checks. Do you wait for all 4 instances to be marked healthy before updating your app? Deployment and ALB are independent from each other. AFAIK Deployment will simply stage updates so that certain number of instances stay running at all times, but it won't check if they are marked healthy in ALB yet.

-

Seva almost 7 yearsGiven it takes quite some time to restart your app. And that ALB will keep routing traffic to instances already taken down by the update until they fail enough health checks and are marked "unhealthy". May I suggest changing deployment procedure to following - using jenkins and cli add two instances with new version of app installed, wait for them to be marked healthy, then remove old instances from ALB and shut them down. Then see Innocent Anigbo answer on how to shut down old ones gracefully. And you'll need to make sure auto scaling uses the updated version too.

-

vargen_ almost 7 yearsThank you for your response! If I understand correctly, the ALB should be able to do the deployment like this: it starts up new task(s) with the new application version, then waits till those become healthy. When it happens, it drains connections on tasks with the older application version and drives traffic to the new tasks. When this is done, it can safely stop the tasks with the old version. This way there should be no downtime. I don't want to manage the instance start/stop myself, I am just creating a new task revision and updating the service with that.

vargen_ almost 7 yearsThank you for your response! If I understand correctly, the ALB should be able to do the deployment like this: it starts up new task(s) with the new application version, then waits till those become healthy. When it happens, it drains connections on tasks with the older application version and drives traffic to the new tasks. When this is done, it can safely stop the tasks with the old version. This way there should be no downtime. I don't want to manage the instance start/stop myself, I am just creating a new task revision and updating the service with that. -

vargen_ almost 7 yearsWhat I do to deploy is to create a new revision of my taks definition and update my service to use this new revision. If I understand correctly, from here it's the task of ECS to switch the tasks in the ALB to the new ones (if the pass the health check). Why would I need to manually start/stop instances?

vargen_ almost 7 yearsWhat I do to deploy is to create a new revision of my taks definition and update my service to use this new revision. If I understand correctly, from here it's the task of ECS to switch the tasks in the ALB to the new ones (if the pass the health check). Why would I need to manually start/stop instances? -

vargen_ almost 7 yearsThank you very much for your detailed answer! I checked the healthy hosts count and it was above 0 for the past week, and I had a few deployments made in that period. One thing: I don't want Jenkins to run in ECS but I am deploying to ECS with the help of Jenkins (it runs a job which calls AWS CLI to do the magic, plus a few other things). I need to use an Application Load Balancer, because I need some of its functionalities. My health check is asking my application a very simple question what it can answer very quickly (without DB lookup or similar). It only works when app is started.

vargen_ almost 7 yearsThank you very much for your detailed answer! I checked the healthy hosts count and it was above 0 for the past week, and I had a few deployments made in that period. One thing: I don't want Jenkins to run in ECS but I am deploying to ECS with the help of Jenkins (it runs a job which calls AWS CLI to do the magic, plus a few other things). I need to use an Application Load Balancer, because I need some of its functionalities. My health check is asking my application a very simple question what it can answer very quickly (without DB lookup or similar). It only works when app is started. -

Innocent Anigbo almost 7 yearsdocs.aws.amazon.com/elasticloadbalancing/latest/classic/… what Im saying is that, when you enable connection draining as described in link above, when you stop the application on node1 to update the code, the ALB will wait until all connections in flight are drained (i.e request are completed before making the ALB as OutofService). The ALB will however stop sending further request to this node, but will not abruptly stop already connected users request. This way the users will never see 502 or white page. Enabling connection draining is a tick box in the ALB config

-

Innocent Anigbo almost 7 yearsThen on the second point - Even though you have enabled connection draining as above and ALB is now successfully sending users request to node2 while you complete maintenance on node 01, you want to make sure that node1 code or revision are updated and back into the LB pool before starting deployment on node2. The ALB won't be able to do this for you. That is where the script i pasted in the answer section comes into play. this part is required if you are doing an automated deployment where you can't eyeball the ALB (for inservice and outofservice).I hope that is clear enough

-

vargen_ almost 7 yearsI am not sure what you mean about maintenance. What I do is I create a new revision of my task (using the new app version) and then update my service to use that new task revision. I would think AWS handles the rest.

vargen_ almost 7 yearsI am not sure what you mean about maintenance. What I do is I create a new revision of my task (using the new app version) and then update my service to use that new task revision. I would think AWS handles the rest. -

arvymetal over 6 yearsAh OK! Sorry for the misinterpretation about Jenkins. Well it seems you have solved your issue, congrats!

-

arvymetal over 6 yearsConcerning ECS deployments, I don't know how smooth and satisfying is your procedure, but just to share something for that I've stumbled upon and that works like a charm, if your Jenkins master can run docker containers: the image silintl/ecs-deploy (hub.docker.com/r/silintl/ecs-deploy).

-

vargen_ over 6 yearsThis image looks great, thanks! Though, I think doing blue-green deployments is only necessary if you run one task per instance. I was doing that, but realized that I can easily switch to multiple tasks per instance thus being able to use the built-in zero-downtime deployment of ECS.

vargen_ over 6 yearsThis image looks great, thanks! Though, I think doing blue-green deployments is only necessary if you run one task per instance. I was doing that, but realized that I can easily switch to multiple tasks per instance thus being able to use the built-in zero-downtime deployment of ECS. -

arvymetal over 6 yearsIndeed that's ECS that handles the zero downtime deployment. The blue/green part is just that it waits for a defined time to check if the new service has started, otherwise, it cancels the deployment (instead of leaving a service trying to start in loop), and marks the job as failed.

-

vargen_ over 6 yearsIndeed, that's also a nice feature. Thank you!

vargen_ over 6 yearsIndeed, that's also a nice feature. Thank you! -

Beanwah over 5 yearsDoes this work with Fargate and the awsvpc networking? I haven't seen anywhere where to do a container port mapping. I have the same issue where my health checks are constantly failing, and the tasks keep getting restarted since it thinks they are unavailable. I finally, just for now, allowed a 404 response as a valid response to the health check on the load balancer just so my service could continue working.

-

vargen_ over 5 years@Beanwah I don't really know Fargate and awsvpc. The port mappings are in Create Task -> Container definitions -> Add container. For Fargate, this is written:

vargen_ over 5 years@Beanwah I don't really know Fargate and awsvpc. The port mappings are in Create Task -> Container definitions -> Add container. For Fargate, this is written:Host port mappings are not valid when the network mode for a task definition is host or awsvpc. To specify different host and container port mappings, choose the Bridge network mode. -

Beanwah over 5 yearsYes thanks. When I tried switching to Bridge network mode it says that isn't valid for Fargate based Tasks/Services. Round and round we go... :)

-

Anthony Holland over 5 years@Beanwah for my practical purposes I have solved this problem, by changing the port used on the container. To be clear about what I mean: in my case I am using Apache Tomcat so I just edited the Tomcat server.xml file so that Tomcat is serving HTTP on port 80. Then I rebuilt my war file, rebuilt my docker image, pushed it to AWS, and specified port 80 in my task definition. In other words, I don't know of a way to map the ports, but if you can configure your container, you can solve the problem.