Decimal data type not storing the values correctly in both spark and Hive

Solution 1

DECIMAL in Hive V0.12 meant "a large floating point". Just like NUMBER(38) in Oracle.

But in later versions there has been a major change and DECIMAL without any specification of scale/precision now means "a large integer". Just like a NUMBER(10,0) in Oracle.

Reference

- Hive language manual / data types

- a lengthy PDF document labeld "Hive Decimal Precision/Scale Support" somewhere in cwiki.apache.org

Bottom line: you have to explicitly define how many digits you want, which is exactly what the ANSI SQL standard expected decades ago. For instance, DECIMAL(15,3) will accomodate 12 digits in the integer part + 3 digits in the decimal part (i.e. 15 digits w/ a comma in a arbitrary position).

Solution 2

Both Spark and Hive have a default precision of 10 and scale of zero for Decimal type. Which means if you do not specify the scale, there will be no numbers after the decimal point.

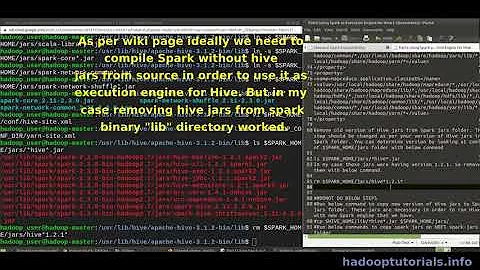

Related videos on Youtube

newSparkbabie

Updated on June 04, 2022Comments

-

newSparkbabie almost 2 years

I am having a problem storing with the decimal data type and not sure if it is a bug or I am doing something wrong

The data in the file looks like this

Column1 column2 column3 steve 100 100.23 ronald 500 20.369 maria 600 19.23when I infer the schema in the spark using the csv reader its taking the data type of column3 as string ,So I am converting it in to decimal and saving it as table.

Now when I access the table it is showing the output in the following way eliminating the decimals

Column1 column2 column3 steve 100 100 ronald 500 20 maria 600 19I also tested the same thing in Hive by creating a local table with column3 as decimal and loaded it with the data and again the same thing it is not storing them as decimal.

Any help in this regard would be appreciated.

Here is the code for the above one

In spark The schema of the file

root |-- DEST_AIRPORT_ID: integer (nullable = true) |-- DEST_AIRPORT_SEQ_ID: integer (nullable = true) |-- DEST_CITY_MARKET_ID: integer (nullable = true) |-- DEST string: string (nullable = true) |-- DEST_CITY_NAME: string (nullable = true) |-- DEST_STATE_ABR: string (nullable = true) |-- DEST_STATE_FIPS: integer (nullable = true) |-- DEST_STATE_NM: string (nullable = true) |-- DEST_WAC: integer (nullable = true) |-- DEST_Miles: double (nullable = true)Code

from pyspark import SparkContext sc =SparkContext() from pyspark.sql.types import * from pyspark.sql import HiveContext sqlContext = HiveContext(sc) Data=sqlContext.read.format("com.databricks.spark.csv").options(header="true").options(delimiter=",").options(inferSchema="true").load("s3://testbucket/Data_test.csv") Data1=Data.withColumnRenamed('DEST string','DEST_string') Data2 =Data1.withColumn('DEST_Miles',Data1.DEST_Miles.cast('Decimal')) Data2.saveAsTable('Testing_data', mode='overwrite',path='s3://bucketname/Testing_data')Schema after converting in to decimal

root |-- DEST_AIRPORT_ID: integer (nullable = true) |-- DEST_AIRPORT_SEQ_ID: integer (nullable = true) |-- DEST_CITY_MARKET_ID: integer (nullable = true) |-- DEST string: string (nullable = true) |-- DEST_CITY_NAME: string (nullable = true) |-- DEST_STATE_ABR: string (nullable = true) |-- DEST_STATE_FIPS: integer (nullable = true) |-- DEST_STATE_NM: string (nullable = true) |-- DEST_WAC: integer (nullable = true) |-- DEST_Miles: decimal (nullable = true)For the Hive

create table Destination( DEST_AIRPORT_ID int, DEST_AIRPORT_SEQ_ID int, DEST_CITY_MARKET_ID int, DEST string, DEST_CITY_NAME string, DEST_STATE_ABR string, DEST_STATE_FIPS string, DEST_STATE_NM string, DEST_WAC int, DEST_Miles Decimal(10,0) ); INSERT INTO TEST_DATA SELECT * FROM TESTING_data;Let me know if you still need more information.

Thanks Thanks