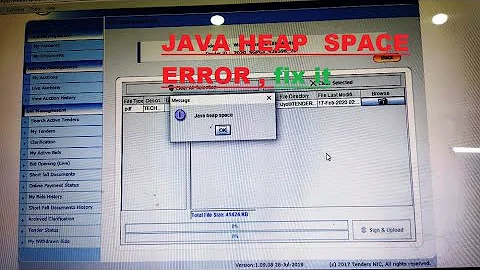

EMR 5.x | Spark on Yarn | Exit code 137 and Java heap space Error

Here is the solution for the above issues.

exit code 137 and Java heap space Error is mainly related to memory w.r.t the executors and the driver. Here is something I have done

to increase the driver memory

spark.driver.memory 16Gincreasethe storage memory

fraction spark.storage.memoryFraction 0.8- increase the executor memory

spark.executor.memory 3G

One very important thing I would like to share which actually made a huge impact in performance is something like below:

As I mentioned above I have a single file (.csv and gzip of 3.2GB) which after unzipping becomes 11.6 GB. To load gzip files, spark always starts a single executor(for each .gzip file) as it can not parallelize (even if you increase partitions) as gzip files are not splittable. this hampers the whole performance as spark first read the whole file (using one executor) into the master ( I am running spark-submit in client mode) and then uncompress it and then repartitions (if mentioned to re-partition).

To address this, I used s3-dist-cp command and moved the file from s3 to hdfs and also reduced the block size so as to increase the parallelism. something like below

/usr/bin/s3-dist-cp --src=s3://bucket-name/path/ --dest=/dest_path/ --groupBy='.*(additional).*' --targetSize=64 --outputCodec=none

although, it takes little time to move the data from s3 to HDFS, the overall performance of the process increases significantly.

Related videos on Youtube

braj

Leading a team of data scientist who are motivated to delivered awesome data products.

Updated on June 04, 2022Comments

-

braj almost 2 years

braj almost 2 yearsI have been getting this error

Container exited with a non-zero exit code 137while running spark on yarn. I have tried couple of techniques after going through but didnt help. The spark configurations looks like below:spark.driver.memory 10G spark.driver.maxResultSize 2G spark.memory.fraction 0.8I am using yarn in client mode.

spark-submit --packages com.databricks:spark-redshift_2.10:0.5.0 --jars RedshiftJDBC4-1.2.1.1001.jar elevatedailyjob.py > log5.out 2>&1 &The sample code :

# Load the file (its a single file of 3.2GB) my_df = spark.read.csv('s3://bucket-name/path/file_additional.txt.gz', schema=MySchema, sep=';', header=True) # write the de_pulse_ip data into parquet format my_df = my_df.select("ip_start","ip_end","country_code","region_code","city_code","ip_start_int","ip_end_int","postal_code").repartition(50) my_df.write.parquet("s3://analyst-adhoc/elevate/tempData/de_pulse_ip1.parquet", mode = "overwrite") # read my_df data intp dataframe from parquet files my_df1 = spark.read.parquet("s3://bucket-name/path/my_df.parquet").repartition("ip_start_int","ip_end_int") #join with another dataset 200 MB my_df2 = my_df.join(my_df1, [my_df.ip_int_cast > my_df1.ip_start_int,my_df.ip_int_cast <= my_df1.ip_end_int], how='right')Note: the input file is a single gzip file. It's unzipped size is 3.2GB

![[FIX] How to Solve java.lang.OutOfMemoryError Java Heap Space](https://i.ytimg.com/vi/vmI8rdV9EOo/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLCVtnnA5nDdmmE6CDyv7tJSsxx2Uw)