Exhausting Linux machine TCP socket limit (~70k)?

Solution 1

If there are processes binding to INADDR_ANY, then some systems will try to pick ports only from the range of 49152 to 65535. That could account for your ~15k limit as the range is exactly 16384 ports.

You may be able to expand that range by finding the instructions for your OS here:

Solution 2

This is a limitation of TCP protocol. The port is an unsigned short int (0-65535). The solution is to use different IP addresses.

If the software can not be changed you can use virtualization. Create VMs that are bridged (not NATed) and that use public IPs so they will not be NATed later.

Check with netstat that the listeners use the IP on a interface and not all addresses (0.0.0.0):

sudo netstat -tulnp|grep '0\.0\.0\.0'

Related videos on Youtube

mo.

Updated on September 18, 2022Comments

-

mo. over 1 year

mo. over 1 yearI am the founder of torservers.net, a non-profit that runs Tor exit nodes. We have a number of machines on Gbit connectivity and multiple IPs, and we seem to be hitting a limit of open TCP sockets across all those machines. We're hovering around ~70k of total TCP connections in total (~10-15k per IP), and Tor is logging "Error binding network socket: Address already in use" like crazy. Is there any solution for this? Does BSD suffer from the same problem?

We run for Tor processes, each of them listening to a different IP. Example:

# NETSTAT=`netstat -nta` # echo "$NETSTAT" | wc -l 67741 # echo "$NETSTAT" | grep ip1 | wc -l 19886 # echo "$NETSTAT" | grep ip2 | wc -l 15014 # echo "$NETSTAT" | grep ip3 | wc -l 18686 # echo "$NETSTAT" | grep ip4 | wc -l 14109I have applied the tweaks I could find on the internet:

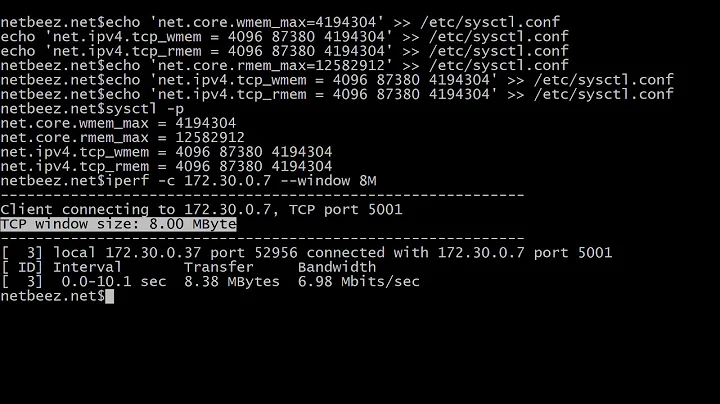

# cat /etc/sysctl.conf net.ipv4.ip_forward = 0 net.ipv4.tcp_syncookies = 1 net.ipv4.tcp_synack_retries = 2 net.ipv4.tcp_syn_retries = 2 net.ipv4.conf.default.forwarding = 0 net.ipv4.conf.default.proxy_arp = 0 net.ipv4.conf.default.send_redirects = 1 net.ipv4.conf.all.rp_filter = 0 net.ipv4.conf.all.send_redirects = 0 kernel.sysrq = 1 net.ipv4.icmp_echo_ignore_broadcasts = 1 net.ipv4.conf.all.accept_redirects = 0 net.ipv4.icmp_ignore_bogus_error_responses = 1 net.core.rmem_max = 33554432 net.core.wmem_max = 33554432 net.ipv4.tcp_rmem = 4096 87380 33554432 net.ipv4.tcp_wmem = 4096 65536 33554432 net.core.netdev_max_backlog = 262144 net.ipv4.tcp_no_metrics_save = 1 net.ipv4.tcp_moderate_rcvbuf = 1 net.ipv4.tcp_orphan_retries = 2 net.ipv4.tcp_tw_reuse = 1 net.ipv4.tcp_tw_recycle = 0 net.ipv4.tcp_max_orphans = 262144 net.ipv4.tcp_max_syn_backlog = 262144 net.ipv4.tcp_fin_timeout = 4 vm.min_free_kbytes = 65536 net.ipv4.netfilter.ip_conntrack_max = 196608 net.netfilter.nf_conntrack_tcp_timeout_established = 7200 net.netfilter.nf_conntrack_checksum = 0 net.netfilter.nf_conntrack_max = 196608 net.netfilter.nf_conntrack_tcp_timeout_syn_sent = 15 net.nf_conntrack_max = 196608 net.ipv4.tcp_keepalive_time = 60 net.ipv4.tcp_keepalive_intvl = 10 net.ipv4.tcp_keepalive_probes = 3 net.ipv4.ip_local_port_range = 1025 65535 net.core.somaxconn = 262144 net.ipv4.tcp_max_tw_buckets = 2000000 net.ipv4.tcp_timestamps = 0 # sysctl fs.file-max fs.file-max = 806854 # ulimit -n 500000 # cat /etc/security/limits.conf * soft nofile 500000 * hard nofile 500000 -

mo. over 12 yearsWe already use different IP addresses. Each of the IPs has around 10-15k open connections.

mo. over 12 yearsWe already use different IP addresses. Each of the IPs has around 10-15k open connections. -

Mircea Vutcovici over 12 yearsDoes

sudo netstat -tulnp|grep '0\.0\.0\.0'return many results? Can you paste the output of:sudo netstat -tulnp|grep '0\.0\.0\.0'|wc -l -

mo. over 12 years

mo. over 12 years# netstat -tulnp|grep '0\.0\.0\.0' | wc -l56# netstat -tulnp| wc -l64 -

Mircea Vutcovici over 12 yearsThis means that you do not have to many listeners, so the client endpoint sockets are the limitation.

-

Mircea Vutcovici over 12 yearsCan you please run:

netstat -tun|grep '0\.0\.0\.0' | wc -l -

mo. over 12 yearsThat one returns zero. I am not sure what you're up to, and I don't think it has anything to do with listening sockets at all.

mo. over 12 yearsThat one returns zero. I am not sure what you're up to, and I don't think it has anything to do with listening sockets at all.netstat -tulnp | wc -lreturns65total listening sockets at the moment. We run four instances of Tor, each of them listening on a different IP. If I runnetstat -ntaand grep it for the four IPs, they sum up to a total of around ~69k, which is the total number of open sockets at the moment. Tor only uses TCP, that's why I'm talking about "TCP sockets" above. (netstat -tun | wc -lis69891) -

Mircea Vutcovici over 12 yearsI think that all those 69k sockets are using the same IP - the primary address. They should use all available IPs.

-

mo. over 12 years"If I run netstat -nta and grep it for the four IPs, they sum up to a total of around ~69k" (and are each around 10-15k).

mo. over 12 years"If I run netstat -nta and grep it for the four IPs, they sum up to a total of around ~69k" (and are each around 10-15k). -

mo. over 12 yearsI have updated the original post to hopefully better reflect this.

mo. over 12 yearsI have updated the original post to hopefully better reflect this. -

Mircea Vutcovici over 12 yearsI think this is a problem in the source code of the tor application. When you are receiving those errors, from the server try to connect outside. If you do not receive "Address already in use", then it is a bug in the tor server.

-

mo. about 12 yearsFor Linux, this would be

mo. about 12 yearsFor Linux, this would benet.ipv4.ip_local_port_range = 1025 65535, which is in the list of sysctl settings already applied. I can also actually see connections from lower ports across all IPs. -

Seth Noble about 12 yearsAnother possibility is that the connections are not dropping cleanly, causing ports to be left in a TIME_WAIT state. How quickly are these connections cycling? In other words, how long does a typical TCP session last?

-

mo. about 12 yearsThanks. Yes, indeed there is a high number of relatively short-lived TCP sessions. But,

mo. about 12 yearsThanks. Yes, indeed there is a high number of relatively short-lived TCP sessions. But,netstat -ntainludes connections in TIME_WAIT: At the moment, 24k of 67k connections - across all interfaces! - are in TIME_WAIT. Still, I don't see why this should be a restriction? My tweaks also include a very short timeoutnet.ipv4.tcp_fin_timeout = 4. -

Seth Noble about 12 yearsWow, that's a lot of TIME_WAITs for a 4 second timeout. I see you have net.ipv4.tcp_tw_reuse set. You could also try net.ipv4.tcp_tw_recycle. serverfault.com/questions/342741/… stackoverflow.com/questions/6426253/… However, if you have access to the source code I suggest examining how the TCP sessions are being closed. A TIME_WAIT state usually occurs when a connection is closed while data is still in transit or not cleanly closed.

-

mo. about 12 yearsI have already tried net.ipv4.tcp_tw_recycle over a longer time period, it did not help. The high number of TIME_WAITs is to be expected, the Tor service uses a lot of short-lived connections. I don't see why they should pose a problem, and I still don't see where the limitation comes from. The source code of Tor is available, but I am not familiar with it enough to dig into this.

mo. about 12 yearsI have already tried net.ipv4.tcp_tw_recycle over a longer time period, it did not help. The high number of TIME_WAITs is to be expected, the Tor service uses a lot of short-lived connections. I don't see why they should pose a problem, and I still don't see where the limitation comes from. The source code of Tor is available, but I am not familiar with it enough to dig into this. -

Seth Noble about 12 yearsTIME_WAIT is a factor simply because any port in that state is unavailable. If there is a spike in traffic, the number of TIME_WAITs could become so high that there are not enough free ports available for new connections. That would cause a burst of

already in useerrors. But because your connection times are short, samples withnetstatmay not capture that moment. Given all the circumstances, spreading across more nodes/addresses may be the most practical solution.