How does anaconda pick cudatoolkit

Solution 1

Is the cuda version shown above is same as cuda toolkit version?

It has nothing to do with CUDA toolkit versions.

If so why is it same in all the enviroments [sic]?

Because it is a property of the driver. It is the maximum CUDA version that the active driver in your system supports. And when you try and use CUDA 10.2, it is why nothing works. Your driver needs to be updated to support CUDA 10.2.

Solution 2

This isn't really answering the original question, but the follow up ones:

tensorflow and pytorch can be installed directly through anaconda without explicitly downloading the cudatoolkit from nvidia. It only needs gpu driver installed. In this case nvcc is not installed still it works fine. how does it work in this case?

In general, GPU packages on Anaconda/Conda-Forge are built using Anaconda's new CUDA compiler toolchain. It is made in such a way that nvcc and friends are split from the rest of runtime libraries (cuFFT, cuSPARSE, etc) in CUDA Toolkit. The latter is packed in the cudatoolkit package, is made as a run-dependency (in conda's terminology), and is installed when you install GPU packages like PyTorch.

Then, GPU packages are compiled, linked to cudatoolkit, and packaged, which is the reason you only need the CUDA driver to be installed and nothing else. The system's CUDA Toolkit, if there's any, is by default ignored due to this linkage, unless the package (such as Numba) has its own way to look up CUDA libraries in runtime.

It's worth mentioning that the installed cudatoolkit does not always match your driver. In that event, you can explicitly constrain its version (say 10.0):

conda install some_gpu_package cudatoolkit=10.0

what happens when the environment in which tensorflow is installed is activated? Does conda create environment variables for accessing cuda libraries just when the environment is activated?

Conda always sets up some env vars when an env is activated. I am not fully sure about tensorflow, but most likely when it's built, it's linked to CUDA runtime libraries (cudatoolkit in other words). So, when launching tensorflow or other GPU apps, they will use the cudatoolkit installed in the same conda env.

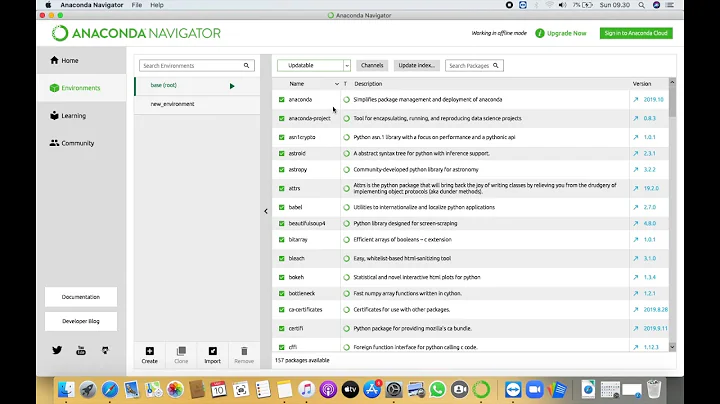

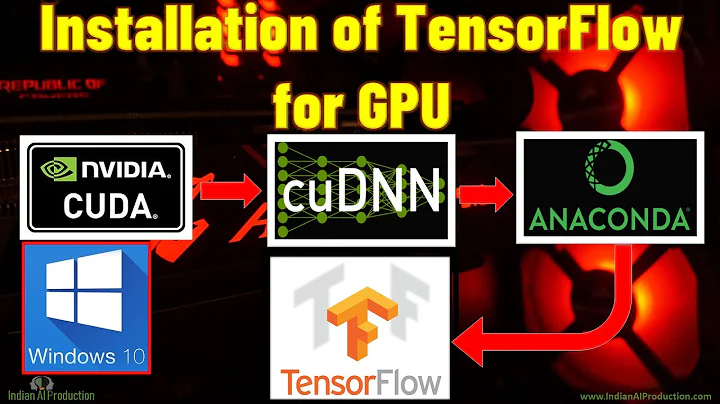

Related videos on Youtube

bananagator

Updated on June 04, 2022Comments

-

bananagator almost 2 years

I have multiple enviroments of anaconda with different cuda toolkits installed on them.

env1 has

cudatoolkit 10.0.130env2 has

cudatoolkit 10.1.168env3 has

cudatoolkit 10.2.89I found these by running

conda liston each environment.When i do

nvidia-smii get the following output no matter which environment i am in+-----------------------------------------------------------------------------+ | NVIDIA-SMI 435.21 Driver Version: 435.21 CUDA Version: 10.1 | |-------------------------------+----------------------+----------------------+ | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | |===============================+======================+======================| | 0 GeForce RTX 208... Off | 00000000:01:00.0 On | N/A | | 0% 42C P8 7W / 260W | 640MiB / 11016MiB | 2% Default | +-------------------------------+----------------------+----------------------+Is the cuda version shown above is same as cuda toolkit version? If so why is it same in all the enviroments?

In env3 which has

cudatoolkit version 10.2.89, i tried installing cupy library using the commandpip install cupy-cuda102. I get the following error when i try to do it.ERROR: Could not find a version that satisfies the requirement cupy-cuda102 (from versions: none) ERROR: No matching distribution found for cupy-cuda102I was able to install using

pip install cupy-cuda101which is for cuda 10.1. Why is it not able to find cudatoolkit 10.2?The reason i am asking this question is because, i am getting an error

cupy.cuda.cublas.CUBLASError: CUBLAS_STATUS_NOT_INITIALIZEDwhen i am running a deep learning model. I am just wondering if cudatoolkit version has something to do with this error.Even if this error is not related to cudatoolkit version i want to know how anaconda uses cudatoolkit. -

Leo Fang about 4 yearsLet me add two things: 1.

Leo Fang about 4 yearsLet me add two things: 1.cudatoolkiton anaconda only comes with the CUDA runtime libraries. Neither driver nor nvcc is included. 2.cupy-cuda102is not yet available. This is a known issue that the team is working on. -

bananagator about 4 years@LeoFang tensorflow and pytorch can be installed directly through anaconda without explicitly downloading the cudatoolkit from nvidia. It only needs gpu driver installed. In this case nvcc is not installed still it works fine. how does it work in this case? what happens when the the environment in which tensorflow is installed is activated? Does conda create environment variables for accessing cuda libraries just when the environment is activated?

-

aquagremlin about 4 yearsthank you for clarifying the distinction between the local cuda install and the cudatoolkit anaconda installs. However, there is a package called 'cupy' that uses cuda to accelerate numpy calculations. If I start a python terminal, import cupy, and run cupy.show_config() it shows me the CUDA root. I can change the cuda location in my path by modifying the .bashrc file, and cupy will reflect the new location. So it seems even though anaconda's cudatoolkit is a 'cuda unto itself' , the cupy package refers to the natively installed cuda. Is this correct?

-

Leo Fang about 4 years@aquagremlin Sorry I missed your comment. You asked the right person -- I am one of the CuPy's conda-forge recipe maintainers. The issue you raised is currently being resolved: For conda-forge CuPy,

Leo Fang about 4 years@aquagremlin Sorry I missed your comment. You asked the right person -- I am one of the CuPy's conda-forge recipe maintainers. The issue you raised is currently being resolved: For conda-forge CuPy,cupy.show_config()does not correctly print the path of thecudatoolkitinstalled in your conda env (despite the linking to it is correct, meaningcudatoolkit-- instead of your local CUDA installation -- is indeed used), see cupy/cupy#3222. So the answer to your question is, for the moment, yes.