How to create simple custom filter for iOS using Core Image Framework?

Solution 1

OUTDATED

You can't create your own custom kernels/filters in iOS yet. See http://developer.apple.com/library/mac/#documentation/graphicsimaging/Conceptual/CoreImaging/ci_intro/ci_intro.html, specifically:

Although this document is included in the reference library, it has not been updated in detail for iOS 5.0. A forthcoming revision will detail the differences in Core Image on iOS. In particular, the key difference is that Core Image on iOS does not include the ability to create custom image filters.

(Bolding mine)

Solution 2

As Adam states, currently Core Image on iOS does not support custom kernels like the older Mac implementation does. This limits what you can do with the framework to being some kind of combination of existing filters.

(Update: 2/13/2012)

For this reason, I've created an open source framework for iOS called GPUImage, which lets you create custom filters to be applied to images and video using OpenGL ES 2.0 fragment shaders. I describe more about how this framework operates in my post on the topic. Basically, you can supply your own custom OpenGL Shading Language (GLSL) fragment shaders to create a custom filter, and then run that filter against static images or live video. This framework is compatible with all iOS devices that support OpenGL ES 2.0, and can create applications that target iOS 4.0.

For example, you can set up filtering of live video using code like the following:

GPUImageVideoCamera *videoCamera = [[GPUImageVideoCamera alloc] initWithSessionPreset:AVCaptureSessionPreset640x480 cameraPosition:AVCaptureDevicePositionBack];

GPUImageFilter *customFilter = [[GPUImageFilter alloc] initWithFragmentShaderFromFile:@"CustomShader"];

GPUImageView *filteredVideoView = [[GPUImageView alloc] initWithFrame:CGRectMake(0.0, 0.0, viewWidth, viewHeight)];

// Add the view somewhere so it's visible

[videoCamera addTarget:thresholdFilter];

[customFilter addTarget:filteredVideoView];

[videoCamera startCameraCapture];

As an example of a custom fragment shader program that defines a filter, the following applies a sepia tone effect:

varying highp vec2 textureCoordinate;

uniform sampler2D inputImageTexture;

void main()

{

lowp vec4 textureColor = texture2D(inputImageTexture, textureCoordinate);

lowp vec4 outputColor;

outputColor.r = (textureColor.r * 0.393) + (textureColor.g * 0.769) + (textureColor.b * 0.189);

outputColor.g = (textureColor.r * 0.349) + (textureColor.g * 0.686) + (textureColor.b * 0.168);

outputColor.b = (textureColor.r * 0.272) + (textureColor.g * 0.534) + (textureColor.b * 0.131);

gl_FragColor = outputColor;

}

The language used for writing custom Core Image kernels on the Mac is very similar to GLSL. In fact, you'll be able to do a few things that you can't in desktop Core Image, because Core Image's kernel language lacks some things that GLSL has (like branching).

Solution 3

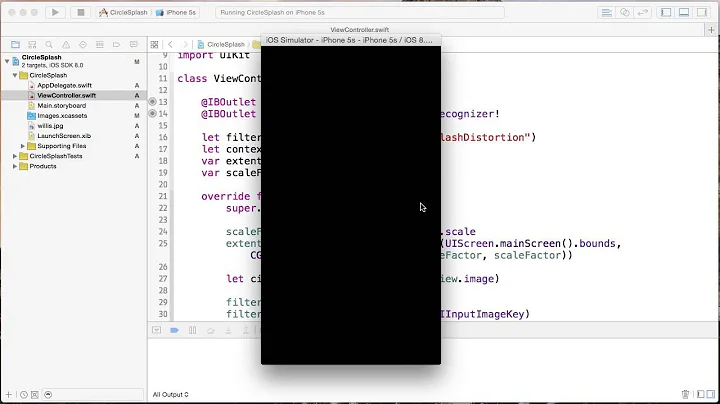

Original accepted answer is depreciated. From iOS 8 you can create custom kernels for filters. You can find more information about this in:

- WWDC 2014 Session 514 Advances in Core Image

- Session 514 transcript

- WWDC 2014 Session 515 Developing Core Image Filters for iOS

- Session 515 transcript

Related videos on Youtube

Matrosov Oleksandr

Updated on June 04, 2022Comments

-

Matrosov Oleksandr almost 2 years

Matrosov Oleksandr almost 2 yearsI want to use in my app an custom filter. Now I know that I need to use Core Image framework, but i not sure that is right way. Core Image framework uses for Mac OS and in iOS 5.0 - I'm not sure that could be used for custom CIFilter effects. Can you help me with this issues? Thanks all!