How to find the max String length of a column in Spark using dataframe?

Solution 1

Use row_number() window function on length('city) desc order.

Then filter out only the first row_number column and add length('city) column to dataframe.

Ex:

val df=Seq(("A",1,"US"),("AB",1,"US"),("ABC",1,"US"))

.toDF("city","num","country")

val win=Window.orderBy(length('city).desc)

df.withColumn("str_len",length('city))

.withColumn("rn", row_number().over(win))

.filter('rn===1)

.show(false)

+----+---+-------+-------+---+

|city|num|country|str_len|rn |

+----+---+-------+-------+---+

|ABC |1 |US |3 |1 |

+----+---+-------+-------+---+

(or)

In spark-sql:

df.createOrReplaceTempView("lpl")

spark.sql("select * from (select *,length(city)str_len,row_number() over (order by length(city) desc)rn from lpl)q where q.rn=1")

.show(false)

+----+---+-------+-------+---+

|city|num|country|str_len| rn|

+----+---+-------+-------+---+

| ABC| 1| US| 3| 1|

+----+---+-------+-------+---+

Update:

Find min,max values:

val win_desc=Window.orderBy(length('city).desc)

val win_asc=Window.orderBy(length('city).asc)

df.withColumn("str_len",length('city))

.withColumn("rn", row_number().over(win_desc))

.withColumn("rn1",row_number().over(win_asc))

.filter('rn===1 || 'rn1 === 1)

.show(false)

Result:

+----+---+-------+-------+---+---+

|city|num|country|str_len|rn |rn1|

+----+---+-------+-------+---+---+

|A |1 |US |1 |3 |1 | //min value of string

|ABC |1 |US |3 |1 |3 | //max value of string

+----+---+-------+-------+---+---+

Solution 2

In case you have multiple rows which share the same length, then the solution with the window function won't work, since it filters the first row after ordering.

Another way would be to create a new column with the length of the string, find it's max element and filter the data frame upon the obtained maximum value.

import org.apache.spark.sql._

import org.apache.spark.sql.functions._

import spark.implicits._

val df=Seq(("A",1,"US"),("AB",1,"US"),("ABC",1,"US"), ("DEF", 2, "US"))

.toDF("city","num","country")

val dfWithLength = df.withColumn("city_length", length($"city")).cache()

dfWithLength.show()

+----+---+-------+-----------+

|city|num|country|city_length|

+----+---+-------+-----------+

| A| 1| US| 1|

| AB| 1| US| 2|

| ABC| 1| US| 3|

| DEF| 2| US| 3|

+----+---+-------+-----------+

val Row(maxValue: Int) = dfWithLength.agg(max("city_length")).head()

dfWithLength.filter($"city_length" === maxValue).show()

+----+---+-------+-----------+

|city|num|country|city_length|

+----+---+-------+-----------+

| ABC| 1| US| 3|

| DEF| 2| US| 3|

+----+---+-------+-----------+

Solution 3

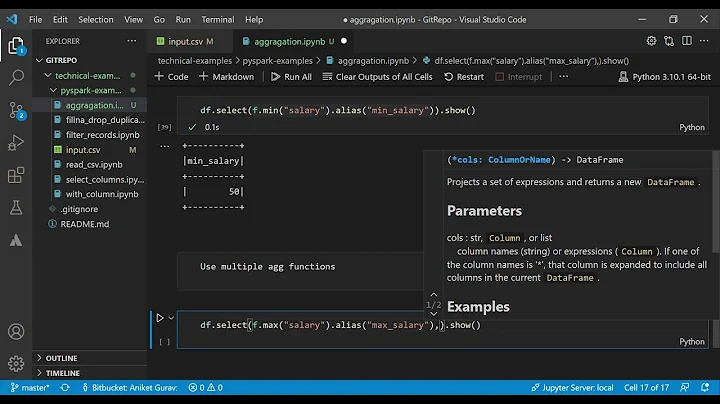

Find a maximum string length on a string column with pyspark

from pyspark.sql.functions import length, col, max

df2 = df.withColumn("len_Description",length(col("Description"))).groupBy().max("len_Description")

Related videos on Youtube

Shashank V C

Updated on September 23, 2022Comments

-

Shashank V C over 1 year

I have a dataframe. I need to calculate the Max length of the String value in a column and print both the value and its length.

I have written the below code but the output here is the max length only but not its corresponding value. This How to get max length of string column from dataframe using scala? did help me out in getting the below query.

df.agg(max(length(col("city")))).show() -

Eugene Lycenok over 2 yearsthis might work well with small datasets, but I don't think ordering the whole data set is the fastest thing to do only to find the maximum and minimum values