How to strip out all of the links of an HTML file in Bash or grep or batch and store them in a text file

Solution 1

$ sed -n 's/.*href="\([^"]*\).*/\1/p' file

http://www.drawspace.com/lessons/b03/simple-symmetry

http://www.drawspace.com/lessons/b04/faces-and-a-vase

http://www.drawspace.com/lessons/b05/blind-contour-drawing

http://www.drawspace.com/lessons/b06/seeing-values

Solution 2

You can use grep for this:

grep -Po '(?<=href=")[^"]*' file

It prints everything after href=" until a new double quote appears.

With your given input it returns:

http://www.drawspace.com/lessons/b03/simple-symmetry

http://www.drawspace.com/lessons/b04/faces-and-a-vase

http://www.drawspace.com/lessons/b05/blind-contour-drawing

http://www.drawspace.com/lessons/b06/seeing-values

Note that it is not necessary to write cat drawspace.txt | grep '<a href=".*">', you can get rid of the useless use of cat with grep '<a href=".*">' drawspace.txt.

Another example

$ cat a

hello <a href="httafasdf">asdas</a>

hello <a href="hello">asdas</a>

other things

$ grep -Po '(?<=href=")[^"]*' a

httafasdf

hello

Solution 3

My guess is your PC or Mac will not have the lynx command installed by default (it's available for free on the web), but lynx will let you do things like this:

$lynx -dump -image_links -listonly /usr/share/xdiagnose/workloads/youtube-reload.html

Output: References

- file://localhost/usr/share/xdiagnose/workloads/youtube-reload.html

- http://www.youtube.com/v/zeNXuC3N5TQ&hl=en&fs=1&autoplay=1

It is then a simple matter to grep for the http: lines. And there even may be lynx options to print just the http: lines (lynx has many, many options).

Solution 4

Use grep to extract all the lines with links in them and then use sed to pull out the URLs:

grep -o '<a href=".*">' *.html | sed 's/\(<a href="\|\">\)//g' > link.txt;

Solution 5

As per comment of triplee, using regex to parse HTML or XML files is essentially not done. Tools such as sed and awk are extremely powerful for handling text files, but when it boils down to parsing complex-structured data — such as XML, HTML, JSON, ... — they are nothing more than a sledgehammer. Yes, you can get the job done, but sometimes at a tremendous cost. For handling such delicate files, you need a bit more finesse by using a more targetted set of tools.

In case of parsing XML or HTML, one can easily use xmlstarlet.

In case of an XHTML file, you can use :

xmlstarlet sel --html -N "x=http://www.w3.org/1999/xhtml" \

-t -m '//x:a/@href' -v . -n

where -N gives the XHTML namespace if any, this is recognized by

<html xmlns="http://www.w3.org/1999/xhtml">

However, As HTML pages are often not well-formed XML, it might be handy to clean it up a bit using tidy. In the example case above this gives then :

$ tidy -q -numeric -asxhtml --show-warnings no <file.html> \

| xmlstarlet sel --html -N "x=http://www.w3.org/1999/xhtml" \

-t -m '//x:a/@href' -v . -n

http://www.drawspace.com/lessons/b03/simple-symmetry

http://www.drawspace.com/lessons/b04/faces-and-a-vase

http://www.drawspace.com/lessons/b05/blind-contour-drawing

http://www.drawspace.com/lessons/b06/seeing-values

Related videos on Youtube

A'sa Dickens

I do a lot of stuff... and as such i am a jack of all trades master of none, but right now i'm mainly an ios engineer for a small company http://justecho.com i do a lot of front end work so i know objc well with storyboards, and xib files all all that stuff... I can be lazy sometimes with answering questions and, rather than looking up the answers, just try to pitch ideas or little helpful hints to look out for. I love league of legends :p

Updated on September 06, 2020Comments

-

A'sa Dickens over 3 years

A'sa Dickens over 3 yearsI have a file that is HTML, and it has about 150 anchor tags. I need only the links from these tags, AKA,

<a href="*http://www.google.com*"></a>. I want to get only the http://www.google.com part.When I run a grep,

cat website.htm | grep -E '<a href=".*">' > links.txtthis returns the entire line to me that it found on not the link I want, so I tried using a

cutcommand:cat drawspace.txt | grep -E '<a href=".*">' | cut -d’”’ --output-delimiter=$'\n' > links.txtExcept that it is wrong, and it doesn't work give me some error about wrong parameters... So I assume that the file was supposed to be passed along too. Maybe like

cut -d’”’ --output-delimiter=$'\n' grepedText.txt > links.txt.But I wanted to do this in one command if possible... So I tried doing an AWK command.

cat drawspace.txt | grep '<a href=".*">' | awk '{print $2}’But this wouldn't run either. It was asking me for more input, because I wasn't finished....

I tried writing a batch file, and it told me FINDSTR is not an internal or external command... So I assume my environment variables were messed up and rather than fix that I tried installing grep on Windows, but that gave me the same error....

The question is, what is the right way to strip out the HTTP links from HTML? With that I will make it work for my situation.

P.S. I've read so many links/Stack Overflow posts that showing my references would take too long.... If example HTML is needed to show the complexity of the process then I will add it.

I also have a Mac and PC which I switched back and forth between them to use their shell/batch/grep command/terminal commands, so either or will help me.

I also want to point out I'm in the correct directory

HTML:

<tr valign="top"> <td class="beginner"> B03 </td> <td> <a href="http://www.drawspace.com/lessons/b03/simple-symmetry">Simple Symmetry</a> </td> </tr> <tr valign="top"> <td class="beginner"> B04 </td> <td> <a href="http://www.drawspace.com/lessons/b04/faces-and-a-vase">Faces and a Vase</a> </td> </tr> <tr valign="top"> <td class="beginner"> B05 </td> <td> <a href="http://www.drawspace.com/lessons/b05/blind-contour-drawing">Blind Contour Drawing</a> </td> </tr> <tr valign="top"> <td class="beginner"> B06 </td> <td> <a href="http://www.drawspace.com/lessons/b06/seeing-values">Seeing Values</a> </td> </tr>Expected output:

http://www.drawspace.com/lessons/b03/simple-symmetry http://www.drawspace.com/lessons/b04/faces-and-a-vase http://www.drawspace.com/lessons/b05/blind-contour-drawing etc.-

A'sa Dickens about 10 yearsbleh it didn't put the links on separate lines......basically links connected by a \n character

A'sa Dickens about 10 yearsbleh it didn't put the links on separate lines......basically links connected by a \n character -

tripleee about 10 yearsAs usual, don't use regex to parse HTML.

tripleee about 10 yearsAs usual, don't use regex to parse HTML.

-

-

A'sa Dickens about 10 yearscat website.htm | grep -Po '(?<=href=")[^"]*' > links.txt is not working telling me i am using grep wrong and showing me how to use it

A'sa Dickens about 10 yearscat website.htm | grep -Po '(?<=href=")[^"]*' > links.txt is not working telling me i am using grep wrong and showing me how to use it -

A'sa Dickens about 10 yearsoh you mentioned that, my you are fast

A'sa Dickens about 10 yearsoh you mentioned that, my you are fast -

A'sa Dickens about 10 yearsi ma reading a file and putting the output into a new file so that would be like grep -Po '(?<=href=")[^"]*' website.htm > links.txt

A'sa Dickens about 10 yearsi ma reading a file and putting the output into a new file so that would be like grep -Po '(?<=href=")[^"]*' website.htm > links.txt -

fedorqui about 10 yearsExactly, @A'saDickens, just redirect as you indicate.

fedorqui about 10 yearsExactly, @A'saDickens, just redirect as you indicate. -

fedorqui about 10 yearsWhich

fedorqui about 10 yearsWhichgrepare you using? Are you in a *nix environment? Just in case, escape"---->grep -Po '(?<=href=\")[^\"]*' -

Ed Morton about 10 years@A'saDickens The grep options used here require GNU grep which may not be present on your UNIX installation.

-

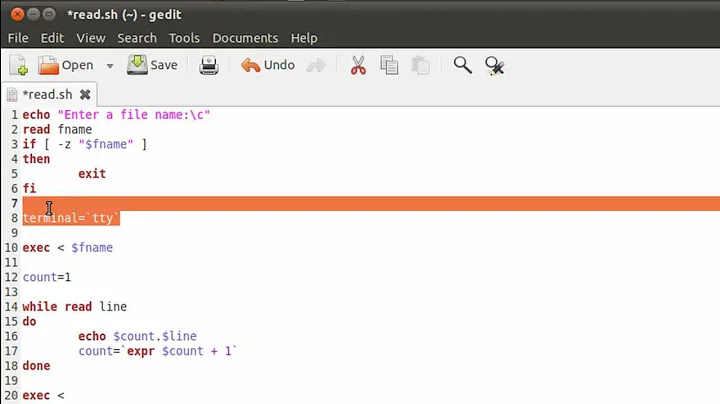

A'sa Dickens about 10 yearsi'm on my mac, i uploaded a picture, maybe that will help

A'sa Dickens about 10 yearsi'm on my mac, i uploaded a picture, maybe that will help -

A'sa Dickens about 10 yearsthis worked for me, unfortunately my html is more complex so i don't get exactly what i want, but that is through the fault of the question XD thank you, this simplifies it so manual work is not a burden

A'sa Dickens about 10 yearsthis worked for me, unfortunately my html is more complex so i don't get exactly what i want, but that is through the fault of the question XD thank you, this simplifies it so manual work is not a burden -

Ed Morton about 10 yearssed is a Stream EDitor. Use it for simple substitutions on a single line. It has a ton of language constructs but the only useful ones are s, g, and p (with -n) - all of the rest became obsolete once awk was invented in the mid-1970s (but for some reason people keep dragging them out and using them).

-

A'sa Dickens about 10 yearsso awk replaced sed ?

A'sa Dickens about 10 yearsso awk replaced sed ? -

Ed Morton about 10 yearsNo, sed is still the best tool for simple substitutions on a single line, it's just that sed was invented before awk so it had a lot of language constructs to let you do very complex things but once awk was invented it had a MUCH improved language for handling complex operations so you no longer needed sed FOR THAT but those sed language constructs were never removed so they still exist today and people use them inexplicably today to create the most monstrously unreadable scripts.

-

Ed Morton about 10 yearsHere's the rules: use

grepto find patterns and print the matching lines, usesedfor simple substitutions on a single line, and useawkfor any other text manipulation.grepandsedwere both created to simplify and are named based on common uses of the old text editored-grepis named afterg/re/pwhich are theedcommands to find a regexp and print the matching line whilesedisStream ED. -

A'sa Dickens about 10 yearsthank you for the information :3 that will help me understand the points when i get around to reading up on it

A'sa Dickens about 10 yearsthank you for the information :3 that will help me understand the points when i get around to reading up on it -

Ed Morton about 10 yearsYou're welcome. There are books out there about sed and awk but you do NOT need a book to learn what sed (or grep) is good at so just get the book "Effective Awk Programming, Third Edition" by Arnold Robbins if you feel an urge to buy a book about parsing text using UNIX tools.

-

TCB13 about 8 years

sed: 1: "s/\(<a href="\|\">)//g": RE error: parentheses not balanced -

patrick almost 8 yearsJust a note to save users of Mac terminals the trouble: the

-Poin the above code sets thegrepup for Perl-style syntax (-P) and printing only the matched lines (-o, cf. docs). The-Pargument might not work on your Mac, the-oshould, but neither is not essential for this to work. -

Maxiller over 3 yearsthanks man! yours was the first of dozen google results that worked on a mac terminal!

![Linux for QA/Testers | What is grep command in Linux |grep command in Unix [Linux tutorial Beginner]](https://i.ytimg.com/vi/LC_1zSAAD_I/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLDCUKIlR2ExtzyKroBPG_AYJaNHHw)