How to submit a spark job on a remote master node in yarn client mode?

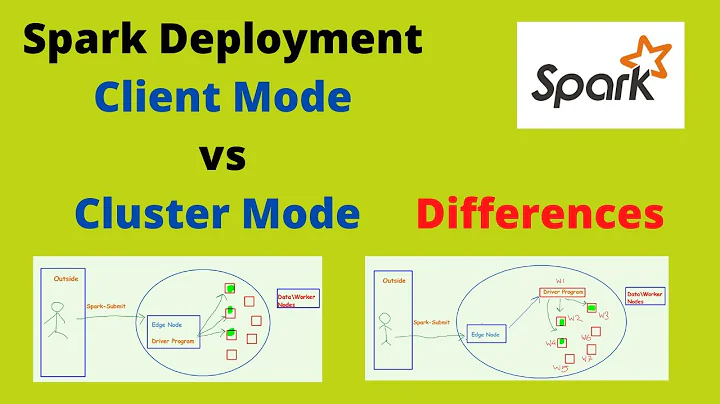

First of all, if you are setting conf.setMaster(...) from your application code, it takes highest precedence (over the --master argument). If you want to run in yarn client mode, do not use MASTER_IP:7077 in application code. You should supply hadoop client config files to your driver in the following way.

You should set environment variable HADOOP_CONF_DIR or YARN_CONF_DIR to point to the directory which contains the client configurations.

http://spark.apache.org/docs/latest/running-on-yarn.html

Depending upon which hadoop features you are using in your spark application, some of the config files will be used to lookup configuration. If you are using hive (through HiveContext in spark-sql), it will look for hive-site.xml. hdfs-site.xml will be used to lookup coordinates for NameNode reading/writing to HDFS from your job.

Related videos on Youtube

Comments

-

Mnemosyne almost 2 years

I need to submit spark apps/jobs onto a remote spark cluster. I have currently spark on my machine and the IP address of the master node as yarn-client. Btw my machine is not in the cluster. I submit my job with this command

./spark-submit --class SparkTest --deploy-mode client /home/vm/app.jarI have the address of my master hardcoded into my app in the form

val spark_master = spark://IP:7077And yet all I get is the error

16/06/06 03:04:34 INFO AppClient$ClientEndpoint: Connecting to master spark://IP:7077... 16/06/06 03:04:34 WARN AppClient$ClientEndpoint: Failed to connect to master IP:7077 java.io.IOException: Failed to connect to /IP:7077 at org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:216) at org.apache.spark.network.client.TransportClientFactory.createClient(TransportClientFactory.java:167) at org.apache.spark.rpc.netty.NettyRpcEnv.createClient(NettyRpcEnv.scala:200) at org.apache.spark.rpc.netty.Outbox$$anon$1.call(Outbox.scala:187) at org.apache.spark.rpc.netty.Outbox$$anon$1.call(Outbox.scala:183) at java.util.concurrent.FutureTask.run(FutureTask.java:266) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) at java.lang.Thread.run(Thread.java:745) Caused by: java.net.ConnectException: Connection refused: /IP:7077Or instead if I use

./spark-submit --class SparkTest --master yarn --deploy-mode client /home/vm/test.jarI get

Exception in thread "main" java.lang.Exception: When running with master 'yarn' either HADOOP_CONF_DIR or YARN_CONF_DIR must be set in the environment. at org.apache.spark.deploy.SparkSubmitArguments.validateSubmitArguments(SparkSubmitArguments.scala:251) at org.apache.spark.deploy.SparkSubmitArguments.validateArguments(SparkSubmitArguments.scala:228) at org.apache.spark.deploy.SparkSubmitArguments.<init>(SparkSubmitArguments.scala:109) at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:114) at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)Do I really need to have hadoop configured as well in my workstation? All the work will be done remotely and this machine is not part of the cluster. I am using Spark 1.6.1.

![Spark Submit Cluster [YARN] Mode](https://i.ytimg.com/vi/3c62-F6bu5k/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLAJOh009FCGgJNkddKZe2DA41ikNw)