Is there a way to flush a POSIX socket?

Solution 1

For Unix-domain sockets, you can use fflush(), but I'm thinking you probably mean network sockets. There isn't really a concept of flushing those. The closest things are:

At the end of your session, calling

shutdown(sock, SHUT_WR)to close out writes on the socket.On TCP sockets, disabling the Nagle algorithm with sockopt

TCP_NODELAY, which is generally a terrible idea that will not reliably do what you want, even if it seems to take care of it on initial investigation.

It's very likely that handling whatever issue is calling for a 'flush' at the user protocol level is going to be the right thing.

Solution 2

What about setting TCP_NODELAY and than reseting it back? Probably it could be done just before sending important data, or when we are done with sending a message.

send(sock, "notimportant", ...);

send(sock, "notimportant", ...);

send(sock, "notimportant", ...);

int flag = 1;

setsockopt(sock, IPPROTO_TCP, TCP_NODELAY, (char *) &flag, sizeof(int));

send(sock, "important data or end of the current message", ...);

flag = 0;

setsockopt(sock, IPPROTO_TCP, TCP_NODELAY, (char *) &flag, sizeof(int));

As linux man pages says

TCP_NODELAY ... setting this option forces an explicit flush of pending output ...

So probably it would be better to set it after the message, but am not sure how it works on other systems

Solution 3

In RFC 1122 the name of the thing that you are looking for is "PUSH". However, there does not seem to be a relevant TCP API implementation that implements "PUSH". Alas, no luck.

Some answers and comments deal with the Nagle algorithm. Most of them seem to assume that the Nagle algorithm delays each and every send. This assumption is not correct. Nagle delays sending only when at least one of the previous packets has not yet been acknowledged (http://www.unixguide.net/network/socketfaq/2.11.shtml).

To put it differently: TCP will send the first packet (of a row of packets) immediately. Only if the connection is slow and your computer does not get a timely acknowledgement, Nagle will delay sending subsequent data until either (whichever occurs first)

- a time-out is reached or

- the last unacknowledged packet is acknowledged or

- your send buffer is full or

- you disable Nagle or

- you shutdown the sending direction of your connection

A good mitigation is to avoid the business of subsequent data as far as possible. This means: If your application calls send() more than one time to transmit a single compound request, try to rewrite your application. Assemble the compound request in user space, then call send(). Once. This saves on context switches (much more expensive than most user-space operations), too.

Besides, when the send buffer contains enough data to fill the maximum size of a network packet, Nagle does not delay either. This means: If the last packet that you send is big enough to fill your send buffer, TCP will send your data as soon as possible, no matter what.

To sum it up: Nagle is not the brute-force approach to reducing packet fragmentation some might consider it to be. On the contrary: To me it seems to be a useful, dynamic and effective approach to keep both a good response time and a good ratio between user data and header data. That being said, you should know how to handle it efficiently.

Solution 4

There is no way that I am aware of in the standard TCP/IP socket interface to flush the data "all the way through to the remote end" and ensure it has actually been acknowledged.

Generally speaking, if your protocol has a need for "real-time" transfer of data, generally the best thing to do is to set the setsockopt() of TCP_NODELAY. This disables the Nagle algorithm in the protocol stack and write() or send() on the socket more directly maps to sends out onto the network....instead of implementing send hold offs waiting for more bytes to become available and using all the TCP level timers to decide when to send. NOTE: Turning off Nagle does not disable the TCP sliding window or anything, so it is always safe to do....but if you don't need the "real-time" properties, packet overhead can go up quite a bit.

Beyond that, if the normal TCP socket mechanisms don't fit your application, then generally you need to fall back to using UDP and building your own protocol features on the basic send/receive properties of UDP. This is very common when your protocol has special needs, but don't underestimate the complexity of doing this well and getting it all stable and functionally correct in all but relatively simple applications. As a starting point, a thorough study of TCP's design features will shed light on many of the issues that need to be considered.

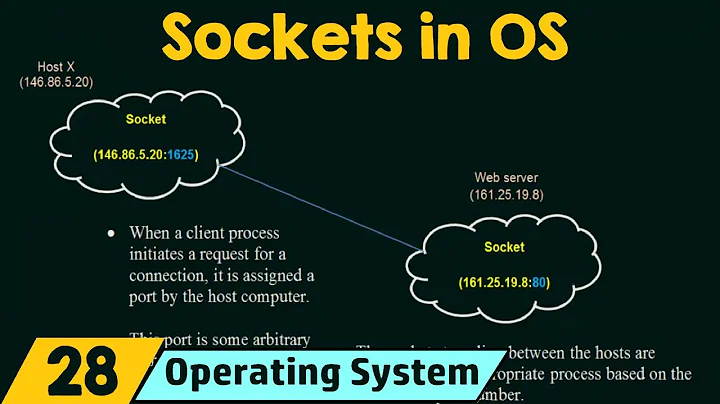

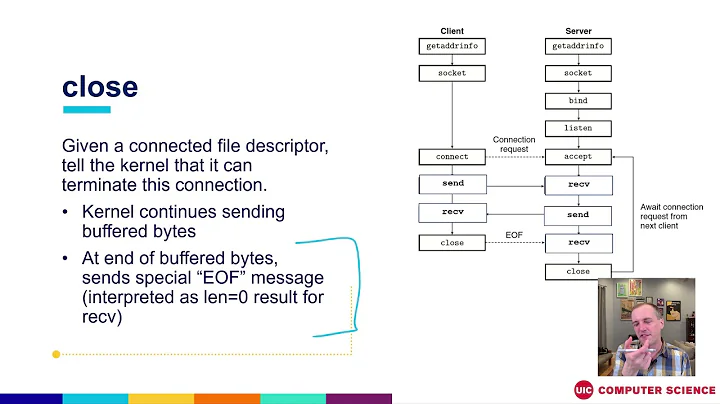

Related videos on Youtube

Gordon Wrigley

I'm currently a Senior Python Contractor. After getting a B.Sc in computer science I kind of stumbled into embedded C programming, to my great surprise I enjoyed it. And fortunately I also seemed to be reasonably good at it. This in turn led me to Python which I must confess is much more enjoyable than embedded C :)

Updated on April 17, 2022Comments

-

Gordon Wrigley about 1 month

Gordon Wrigley about 1 monthIs there a standard call for flushing the transmit side of a POSIX socket all the way through to the remote end or does this need to be implemented as part of the user level protocol? I looked around the usual headers but couldn't find anything.

-

Tall Jeff about 13 yearsI don't see any reason why disabling the Nagle algorithm "is generally a terrible idea". If you know what it does, there are many application protocol situations where disabling Nagle is exactly what you want to do. I suspect you haven't had a situation where you really needed to do that or you don't understand what it really does. In other words, this feature is there for a reason and it can also be disabled for a very good reason.

Tall Jeff about 13 yearsI don't see any reason why disabling the Nagle algorithm "is generally a terrible idea". If you know what it does, there are many application protocol situations where disabling Nagle is exactly what you want to do. I suspect you haven't had a situation where you really needed to do that or you don't understand what it really does. In other words, this feature is there for a reason and it can also be disabled for a very good reason. -

Tall Jeff about 13 yearsI don't think you need a second socket....it would seem reasonable that the far end could send something back on the same socket, no?

Tall Jeff about 13 yearsI don't think you need a second socket....it would seem reasonable that the far end could send something back on the same socket, no? -

MohamedSanaulla over 12 yearsI tried using TCP_NODELAY but i got the following error- ‘TCP_NODELAY’ was not declared in this scope. I used- setsockopt(sockfd, IPPROTO_TCP, TCP_NODELAY, (char *) &flag, sizeof(int));

MohamedSanaulla over 12 yearsI tried using TCP_NODELAY but i got the following error- ‘TCP_NODELAY’ was not declared in this scope. I used- setsockopt(sockfd, IPPROTO_TCP, TCP_NODELAY, (char *) &flag, sizeof(int)); -

chaos over 12 yearsYou'll need to

chaos over 12 yearsYou'll need to#include <linux/tcp.h>or whatever include file providesTCP_NODELAYon your system (tryfgrep -r 'define TCP_NODELAY' /usr/include). -

mmmmmmmm over 11 years@Tall Jeff: Often enough you only need to disable nagle because there is no flushing mechanism!

mmmmmmmm over 11 years@Tall Jeff: Often enough you only need to disable nagle because there is no flushing mechanism! -

mmmmmmmm over 11 yearsNagle could have been a wonderful thing if there was a TCP flush. Since it can optimize it's buffer size to the current segment size. If you disable nagle you have a buffer of your own which is not synchronized to the segment size and so you will send half-filled packets over the network...

mmmmmmmm over 11 yearsNagle could have been a wonderful thing if there was a TCP flush. Since it can optimize it's buffer size to the current segment size. If you disable nagle you have a buffer of your own which is not synchronized to the segment size and so you will send half-filled packets over the network... -

mmmmmmmm over 11 yearsIt does not help you in reducing the send-delays that TCP (especially nagle) produces. But reducing send-delays would be one of the most interesting point in having a flush for TCP.

mmmmmmmm over 11 yearsIt does not help you in reducing the send-delays that TCP (especially nagle) produces. But reducing send-delays would be one of the most interesting point in having a flush for TCP. -

Roman Starkov almost 11 yearsNagle = don't send to wire immediately. No Nagle = send to wire immediately. The in-between option stares right in everyone's face: don't send to wire immediately (Nagle) except when I say to Send Now (the elusive TCP Flush).

Roman Starkov almost 11 yearsNagle = don't send to wire immediately. No Nagle = send to wire immediately. The in-between option stares right in everyone's face: don't send to wire immediately (Nagle) except when I say to Send Now (the elusive TCP Flush). -

R.. GitHub STOP HELPING ICE over 10 years

R.. GitHub STOP HELPING ICE over 10 yearsfflushhas nothing to do with the issue even for unix domain sockets. It's only relevant if you've wrapped your socket with aFILEviafdopen... -

Michael over 9 yearsI'm a bit surprised that there isn't a way to flush AND that Nagle disabling is discouraged. I got rid of a 40ms latency sending a command to a server and getting the reply (reducing it to < 1ms) by disabling Nagle. Still, I'm calling write() with 1-4 bytes at a time, so I imagine it could be even faster if I could explicitly tell when to flush, rather than waiting for some stupid timeout!

Michael over 9 yearsI'm a bit surprised that there isn't a way to flush AND that Nagle disabling is discouraged. I got rid of a 40ms latency sending a command to a server and getting the reply (reducing it to < 1ms) by disabling Nagle. Still, I'm calling write() with 1-4 bytes at a time, so I imagine it could be even faster if I could explicitly tell when to flush, rather than waiting for some stupid timeout! -

Jeremy Friesner about 9 yearsWhat I do is enable Nagle, write as many bytes (using non-blocking I/O) as I can to the socket (i.e. until I run out of bytes to send, or the send() call returns EWOULDBLOCK, whichever comes first), and then disable Nagle again. This seems to work well (i.e. I get low latency AND full-size packets where possible)

Jeremy Friesner about 9 yearsWhat I do is enable Nagle, write as many bytes (using non-blocking I/O) as I can to the socket (i.e. until I run out of bytes to send, or the send() call returns EWOULDBLOCK, whichever comes first), and then disable Nagle again. This seems to work well (i.e. I get low latency AND full-size packets where possible) -

chaos about 9 years@JeremyFriesner: That is slick as hell.

chaos about 9 years@JeremyFriesner: That is slick as hell. -

Stefan almost 9 yearsThis should work with linux at least; when you set TCP_NODELAY it flushes the current buffer, which should, according to the source comments, even flush when TCP_CORK is set.

Stefan almost 9 yearsThis should work with linux at least; when you set TCP_NODELAY it flushes the current buffer, which should, according to the source comments, even flush when TCP_CORK is set. -

mpromonet about 8 yearsI confirm that it works. I prefer to set and reset TCP_DELAY after sending the "important data or end of the current message" because it allow to put this stuff inside a flush socket method.

mpromonet about 8 yearsI confirm that it works. I prefer to set and reset TCP_DELAY after sending the "important data or end of the current message" because it allow to put this stuff inside a flush socket method. -

user207421 almost 8 yearsThis flushes files, not sockets.

user207421 almost 8 yearsThis flushes files, not sockets. -

user207421 almost 8 years@romkyns Nevertheless the actual send() is asynchronous w.r.t. the sending, regardless of the Nagle state.

user207421 almost 8 years@romkyns Nevertheless the actual send() is asynchronous w.r.t. the sending, regardless of the Nagle state. -

Jean-Bernard Jansen about 5 yearsIn the go language, TCP_NODELAY is the default behavior. Did not see anyone complaining about it.

Jean-Bernard Jansen about 5 yearsIn the go language, TCP_NODELAY is the default behavior. Did not see anyone complaining about it. -

Stuart Deal over 4 yearsWhich must be why it works so well on UNIX Domain Sockets

-

hagello almost 4 years@TallJeff there are many application protocol situations where disabling Nagle is exactly what you want to do Would you describe some of them please? Is there any common denominator?

hagello almost 4 years@TallJeff there are many application protocol situations where disabling Nagle is exactly what you want to do Would you describe some of them please? Is there any common denominator? -

hagello almost 4 yearsYou describe a situation where "notimportant" messages are mixed with messages that are to be sent ASAP. For this situation, I guess your solution is a good way to circumvent the delay that might by introduced by Nagle. However, if you do not send multiple messages but one compound message, you should send it with single

hagello almost 4 yearsYou describe a situation where "notimportant" messages are mixed with messages that are to be sent ASAP. For this situation, I guess your solution is a good way to circumvent the delay that might by introduced by Nagle. However, if you do not send multiple messages but one compound message, you should send it with singlesend()call! It's because even with Nagle, the first package is sent immediately. Only subsequent ones are delayed, and they are delayed only until the server acknowledges or replies. So just pack "everything" into the firstsend(). -

Fantastory about 3 yearsIt is true, but in this case, we do not know how large is non important data, nor if there were any delays between single sends. The problem arise when data is send in one direction. For example I work as a proxy - I forward everything I get relay that network will send it optimum way so I do not care. But then comes an important packet, which I want to send as soon as possible. Which is what I understand as flushing.

-

i336_ almost 2 yearsNot for me, I'm getting

i336_ almost 2 yearsNot for me, I'm gettingEINVAL. (As verified by strace on a UDS I just wrote a bunch of bytes to.) -

hagello 15 days@Roman Nagle does not unconditionally equal don't send to wire immediately.

hagello 15 days@Roman Nagle does not unconditionally equal don't send to wire immediately.