Java 8: performance of Streams vs Collections

Solution 1

Stop using

LinkedListfor anything but heavy removing from the middle of the list using iterator.Stop writing benchmarking code by hand, use JMH.

Proper benchmarks:

@OutputTimeUnit(TimeUnit.NANOSECONDS)

@BenchmarkMode(Mode.AverageTime)

@OperationsPerInvocation(StreamVsVanilla.N)

public class StreamVsVanilla {

public static final int N = 10000;

static List<Integer> sourceList = new ArrayList<>();

static {

for (int i = 0; i < N; i++) {

sourceList.add(i);

}

}

@Benchmark

public List<Double> vanilla() {

List<Double> result = new ArrayList<>(sourceList.size() / 2 + 1);

for (Integer i : sourceList) {

if (i % 2 == 0){

result.add(Math.sqrt(i));

}

}

return result;

}

@Benchmark

public List<Double> stream() {

return sourceList.stream()

.filter(i -> i % 2 == 0)

.map(Math::sqrt)

.collect(Collectors.toCollection(

() -> new ArrayList<>(sourceList.size() / 2 + 1)));

}

}

Result:

Benchmark Mode Samples Mean Mean error Units

StreamVsVanilla.stream avgt 10 17.588 0.230 ns/op

StreamVsVanilla.vanilla avgt 10 10.796 0.063 ns/op

Just as I expected stream implementation is fairly slower. JIT is able to inline all lambda stuff but doesn't produce as perfectly concise code as vanilla version.

Generally, Java 8 streams are not magic. They couldn't speedup already well-implemented things (with, probably, plain iterations or Java 5's for-each statements replaced with Iterable.forEach() and Collection.removeIf() calls). Streams are more about coding convenience and safety. Convenience -- speed tradeoff is working here.

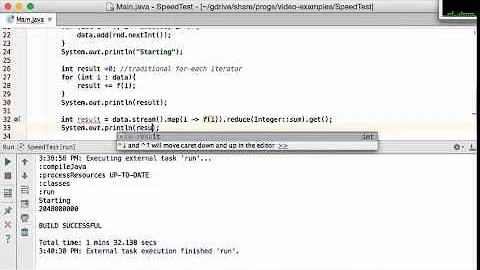

Solution 2

1) You see time less than 1 second using you benchmark. That means there can be strong influence of side effects on your results. So, I increased your task 10 times

int max = 10_000_000;

and ran your benchmark. My results:

Collections: Elapsed time: 8592999350 ns (8.592999 seconds)

Streams: Elapsed time: 2068208058 ns (2.068208 seconds)

Parallel streams: Elapsed time: 7186967071 ns (7.186967 seconds)

without edit (int max = 1_000_000) results were

Collections: Elapsed time: 113373057 ns (0.113373 seconds)

Streams: Elapsed time: 135570440 ns (0.135570 seconds)

Parallel streams: Elapsed time: 104091980 ns (0.104092 seconds)

It's like your results: stream is slower than collection. Conclusion: much time were spent for stream initialization/values transmitting.

2) After increasing task stream became faster (that's OK), but parallel stream remained too slow. What's wrong? Note: you have collect(Collectors.toList()) in you command. Collecting to single collection essentially introduces performance bottleneck and overhead in case of concurrent execution. It is possible to estimate the relative cost of overhead by replacing

collecting to collection -> counting the element count

For streams it can be done by collect(Collectors.counting()). I got results:

Collections: Elapsed time: 41856183 ns (0.041856 seconds)

Streams: Elapsed time: 546590322 ns (0.546590 seconds)

Parallel streams: Elapsed time: 1540051478 ns (1.540051 seconds)

That' s for a big task! (int max = 10000000) Conclusion: collecting items to collection took majority of time. The slowest part is adding to list. BTW, simple ArrayList is used for Collectors.toList().

Solution 3

public static void main(String[] args) {

//Calculating square root of even numbers from 1 to N

int min = 1;

int max = 10000000;

List<Integer> sourceList = new ArrayList<>();

for (int i = min; i < max; i++) {

sourceList.add(i);

}

List<Double> result = new LinkedList<>();

//Collections approach

long t0 = System.nanoTime();

long elapsed = 0;

for (Integer i : sourceList) {

if(i % 2 == 0){

result.add( doSomeCalculate(i));

}

}

elapsed = System.nanoTime() - t0;

System.out.printf("Collections: Elapsed time:\t %d ns \t(%f seconds)%n", elapsed, elapsed / Math.pow(10, 9));

//Stream approach

Stream<Integer> stream = sourceList.stream();

t0 = System.nanoTime();

result = stream.filter(i -> i%2 == 0).map(i -> doSomeCalculate(i))

.collect(Collectors.toList());

elapsed = System.nanoTime() - t0;

System.out.printf("Streams: Elapsed time:\t\t %d ns \t(%f seconds)%n", elapsed, elapsed / Math.pow(10, 9));

//Parallel stream approach

stream = sourceList.stream().parallel();

t0 = System.nanoTime();

result = stream.filter(i -> i%2 == 0).map(i -> doSomeCalculate(i))

.collect(Collectors.toList());

elapsed = System.nanoTime() - t0;

System.out.printf("Parallel streams: Elapsed time:\t %d ns \t(%f seconds)%n", elapsed, elapsed / Math.pow(10, 9));

}

static double doSomeCalculate(int input) {

for(int i=0; i<100000; i++){

Math.sqrt(i+input);

}

return Math.sqrt(input);

}

I change the code a bit, ran on my mac book pro which has 8 cores, I got a reasonable result:

Collections: Elapsed time: 1522036826 ns (1.522037 seconds)

Streams: Elapsed time: 4315833719 ns (4.315834 seconds)

Parallel streams: Elapsed time: 261152901 ns (0.261153 seconds)

Solution 4

For what you are trying to do, I would not use regular java api's anyway. There is a ton of boxing/unboxing going on, so there is a huge performance overhead.

Personally I think that a lot of API designed are crap because they create a lot of object litter.

Try to use a primitive arrays of double/int and try to do it single threaded and see what the performance is.

PS: You might want to have a look at JMH to take care of doing the benchmark. It takes care of some of the typical pitfalls like warming up the JVM.

Solution 5

Interesting results for Java 8 and Java 11. I used the code, provided by leventov with little modification:

@OutputTimeUnit(TimeUnit.NANOSECONDS)

@BenchmarkMode(Mode.AverageTime)

@OperationsPerInvocation(BenchmarkMain.N)

public class BenchmarkMain {

public static final int N = 10000;

static List<Integer> sourceList = new ArrayList<>();

static {

for (int i = 0; i < N; i++) {

sourceList.add(i);

}

}

@Benchmark

public List<Double> vanilla() {

List<Double> result = new ArrayList<>(sourceList.size() / 2 + 1);

for (Integer i : sourceList) {

if (i % 2 == 0){

result.add(Math.sqrt(i));

}

}

return result;

}

@Benchmark

public List<Double> stream() {

return sourceList.stream()

.filter(i -> i % 2 == 0)

.map(Math::sqrt)

.collect(Collectors.toCollection(

() -> new ArrayList<>(sourceList.size() / 2 + 1)));

}

/**

* @param args the command line arguments

*/

public static void main(String[] args) throws IOException {

org.openjdk.jmh.Main.main(args);

}

}

Java 8:

# JMH version: 1.31

# VM version: JDK 1.8.0_262, OpenJDK 64-Bit Server VM, 25.262-b19

# VM invoker: /opt/jdk1.8.0_262/jre/bin/java

# VM options: <none>

# Blackhole mode: full + dont-inline hint

# Warmup: 5 iterations, 10 s each

# Measurement: 5 iterations, 10 s each

# Timeout: 10 min per iteration

# Threads: 1 thread, will synchronize iterations

# Benchmark mode: Average time, time/op

...

Benchmark Mode Cnt Score Error Units

BenchmarkMain.stream avgt 25 10.680 ± 0.744 ns/op

BenchmarkMain.vanilla avgt 25 6.490 ± 0.159 ns/op

Java 11:

# JMH version: 1.31

# VM version: JDK 11.0.2, OpenJDK 64-Bit Server VM, 11.0.2+9

# VM invoker: /opt/jdk-11.0.2/bin/java

# VM options: <none>

# Blackhole mode: full + dont-inline hint

# Warmup: 5 iterations, 10 s each

# Measurement: 5 iterations, 10 s each

# Timeout: 10 min per iteration

# Threads: 1 thread, will synchronize iterations

# Benchmark mode: Average time, time/op

...

Benchmark Mode Cnt Score Error Units

BenchmarkMain.stream avgt 25 5.521 ± 0.057 ns/op

BenchmarkMain.vanilla avgt 25 7.359 ± 0.118 ns/op

Related videos on Youtube

Comments

-

Mister Smith almost 3 years

Mister Smith almost 3 yearsI'm new to Java 8. I still don't know the API in depth, but I've made a small informal benchmark to compare the performance of the new Streams API vs the good old Collections.

The test consists in filtering a list of

Integer, and for each even number, calculate the square root and storing it in a resultListofDouble.Here is the code:

public static void main(String[] args) { //Calculating square root of even numbers from 1 to N int min = 1; int max = 1000000; List<Integer> sourceList = new ArrayList<>(); for (int i = min; i < max; i++) { sourceList.add(i); } List<Double> result = new LinkedList<>(); //Collections approach long t0 = System.nanoTime(); long elapsed = 0; for (Integer i : sourceList) { if(i % 2 == 0){ result.add(Math.sqrt(i)); } } elapsed = System.nanoTime() - t0; System.out.printf("Collections: Elapsed time:\t %d ns \t(%f seconds)%n", elapsed, elapsed / Math.pow(10, 9)); //Stream approach Stream<Integer> stream = sourceList.stream(); t0 = System.nanoTime(); result = stream.filter(i -> i%2 == 0).map(i -> Math.sqrt(i)).collect(Collectors.toList()); elapsed = System.nanoTime() - t0; System.out.printf("Streams: Elapsed time:\t\t %d ns \t(%f seconds)%n", elapsed, elapsed / Math.pow(10, 9)); //Parallel stream approach stream = sourceList.stream().parallel(); t0 = System.nanoTime(); result = stream.filter(i -> i%2 == 0).map(i -> Math.sqrt(i)).collect(Collectors.toList()); elapsed = System.nanoTime() - t0; System.out.printf("Parallel streams: Elapsed time:\t %d ns \t(%f seconds)%n", elapsed, elapsed / Math.pow(10, 9)); }.And here are the results for a dual core machine:

Collections: Elapsed time: 94338247 ns (0,094338 seconds) Streams: Elapsed time: 201112924 ns (0,201113 seconds) Parallel streams: Elapsed time: 357243629 ns (0,357244 seconds)For this particular test, streams are about twice as slow as collections, and parallelism doesn't help (or either I'm using it the wrong way?).

Questions:

- Is this test fair? Have I made any mistake?

- Are streams slower than collections? Has anyone made a good formal benchmark on this?

- Which approach should I strive for?

Updated results.

I ran the test 1k times after JVM warmup (1k iterations) as advised by @pveentjer:

Collections: Average time: 206884437,000000 ns (0,206884 seconds) Streams: Average time: 98366725,000000 ns (0,098367 seconds) Parallel streams: Average time: 167703705,000000 ns (0,167704 seconds)In this case streams are more performant. I wonder what would be observed in an app where the filtering function is only called once or twice during runtime.

-

Mark Rotteveel about 10 yearshave you tried it with an

IntStreaminstead? -

skiwi about 10 yearsCan you please measure properly? If all you are doing is one run, then your benchmarks will of course be off.

skiwi about 10 yearsCan you please measure properly? If all you are doing is one run, then your benchmarks will of course be off. -

skiwi about 10 years@MisterSmith Can we have some transparency on how you warmed up your JVM, also with 1K tests?

skiwi about 10 years@MisterSmith Can we have some transparency on how you warmed up your JVM, also with 1K tests? -

Mister Smith about 10 yearsAnd for those interested in writting correct microbenchmarks, heres the question: stackoverflow.com/questions/504103/…

Mister Smith about 10 yearsAnd for those interested in writting correct microbenchmarks, heres the question: stackoverflow.com/questions/504103/… -

Mister Smith about 10 years@skiwi yes, I've done several tests, warmup iterations in the range of 30 to 1k, showing similar results. How many should I use?

Mister Smith about 10 years@skiwi yes, I've done several tests, warmup iterations in the range of 30 to 1k, showing similar results. How many should I use? -

skiwi about 10 years@MisterSmith 1K should definately suffice.

skiwi about 10 years@MisterSmith 1K should definately suffice. -

assylias about 10 years@MisterSmith One issue is that

assylias about 10 years@MisterSmith One issue is thattoListis not parallel, so your parallel stream is actually sequential but you get the overhead of the parallelisation. -

Stuart Marks about 10 years@assylias Using

Stuart Marks about 10 years@assylias UsingtoListshould run in parallel even if it's collecting to a non-thread-safe list, since the different threads will collect to thread-confined intermediate lists before being merged. -

geg over 7 yearsI'd avoid performing needlessly complex unrelated operations during the benchmark -- the division and square root in this case. It might not result in misleading timings but at the very least it scales the measurements in such a way that the actual relevant performance differences appear small.

-

user904963 about 2 years@skiwi It's a little funny when people talk about warming up the code. What if the code being tested only runs once in production like happens all the time? This kind of slow down for cold code could be significant enough to justify not using the cleaner

Streamcode and instead use an old-fashionedfor.

-

pveentjer about 10 yearsLinkedLists are even worse than ArrayLists because you need to create all the node objects. The mod operator also is dog slow. I believe something like 10/15 cycles + it drains the instruction pipeline. If you want to do a very fast division by 2, just shift the number 1 bit to the right. These are basic tricks, but I'm sure there are mode advanced tricks to speed things up, but these probably are more problem specific.

pveentjer about 10 yearsLinkedLists are even worse than ArrayLists because you need to create all the node objects. The mod operator also is dog slow. I believe something like 10/15 cycles + it drains the instruction pipeline. If you want to do a very fast division by 2, just shift the number 1 bit to the right. These are basic tricks, but I'm sure there are mode advanced tricks to speed things up, but these probably are more problem specific. -

Mister Smith about 10 yearsI'm aware of the boxing. This is just an informal benchmark. The idea is to have the same ammount of boxing/unboxing in both the collections and the streams tests.

Mister Smith about 10 yearsI'm aware of the boxing. This is just an informal benchmark. The idea is to have the same ammount of boxing/unboxing in both the collections and the streams tests. -

pveentjer about 10 yearsFirst I would make sure that it isn't measuring mistake. Try to run the benchmark a few times before you are doing the real benchmark. Then at least you have the JVM warmup out of the way and the code is correctly JITTED. Without this, you probably make the wrong conclusions.

pveentjer about 10 yearsFirst I would make sure that it isn't measuring mistake. Try to run the benchmark a few times before you are doing the real benchmark. Then at least you have the JVM warmup out of the way and the code is correctly JITTED. Without this, you probably make the wrong conclusions. -

Mister Smith about 10 yearsOk, i'll post new results following your advice. I've had a look at JMH but it requires Maven and it takes some time to config. Thanks anyway.

Mister Smith about 10 yearsOk, i'll post new results following your advice. I've had a look at JMH but it requires Maven and it takes some time to config. Thanks anyway. -

skiwi about 10 yearsYou need to microbenchmark this test, meaning it should be first warmed up a lot of times, and then executed a lot of tmes and averaged.

skiwi about 10 yearsYou need to microbenchmark this test, meaning it should be first warmed up a lot of times, and then executed a lot of tmes and averaged. -

ryvantage about 10 yearsI think it's best to avoid thinking of benchmark tests in terms of "For what you are trying to do." i.e., usually these kinds of exercises are simplified enough to be demonstrable, but complex enough that they look like they can/should be simplified.

ryvantage about 10 yearsI think it's best to avoid thinking of benchmark tests in terms of "For what you are trying to do." i.e., usually these kinds of exercises are simplified enough to be demonstrable, but complex enough that they look like they can/should be simplified. -

pveentjer about 10 yearsIf you want to do a comparison, you are doing a benchmark. End of story.

pveentjer about 10 yearsIf you want to do a comparison, you are doing a benchmark. End of story. -

Sergey Fedorov about 10 years@skiwi sure, you're right, especially because there is a large deviations in measurements. I've done only basic investigation and don't pretend results to be precise.

-

pveentjer about 10 yearsThe JIT in server mode, kicks in after 10k executions. And then it takes some time to compile the code and swap it.

pveentjer about 10 yearsThe JIT in server mode, kicks in after 10k executions. And then it takes some time to compile the code and swap it. -

Stuart Marks about 10 yearsI strongly recommend using JMH. If you don't want to use maven, you can download the jar directly (plus a couple dependent jars) and run it using an ant task. It should take ten minutes to set up.

Stuart Marks about 10 yearsI strongly recommend using JMH. If you don't want to use maven, you can download the jar directly (plus a couple dependent jars) and run it using an ant task. It should take ten minutes to set up. -

Mister Smith about 10 yearsThanks for taking the time to bench this. I don't think changing LinkedList for ArrayList would change anything, as both tests should add to it, the times should not be affected. Anyway, could you please explain the results? It's hard to tell what you are measuring here (units say ns/op, but what is considered an op?).

Mister Smith about 10 yearsThanks for taking the time to bench this. I don't think changing LinkedList for ArrayList would change anything, as both tests should add to it, the times should not be affected. Anyway, could you please explain the results? It's hard to tell what you are measuring here (units say ns/op, but what is considered an op?). -

leventov about 10 years@MisterSmith

leventov about 10 years@MisterSmithLinkedListis slower. op is one iteration. -

Mister Smith about 10 yearsThanks. Still 10-20 ns per iteration is too little compared to the times I obtained.

Mister Smith about 10 yearsThanks. Still 10-20 ns per iteration is too little compared to the times I obtained. -

Mister Smith about 10 yearsSorry for pesting you, but how comes your times are 10-20 ns when my times were 10-20 ms. Is your computer that fast? And I'm also puzzled by the fact your times are proportional to my first naive (and allegedly invalid) benchmark. So my second benchmark is worse than the first one?

Mister Smith about 10 yearsSorry for pesting you, but how comes your times are 10-20 ns when my times were 10-20 ms. Is your computer that fast? And I'm also puzzled by the fact your times are proportional to my first naive (and allegedly invalid) benchmark. So my second benchmark is worse than the first one? -

leventov about 10 years@MisterSmith look closely at

leventov about 10 years@MisterSmith look closely at@OperationsPerInvocation(StreamVsVanilla.N). I meausere processing of one list element. -

Brian Goetz almost 10 yearsYour conclusion about performance, while valid, is overblown. There are plenty of cases where the stream code is faster than the iterative code, largely because per-element access costs is cheaper with streams than with plain iterators. And in many cases, the streams version inlines to something that is equivalent to the hand-written version. Of course, the devil is in the details; any given bit of code might behave differently.

Brian Goetz almost 10 yearsYour conclusion about performance, while valid, is overblown. There are plenty of cases where the stream code is faster than the iterative code, largely because per-element access costs is cheaper with streams than with plain iterators. And in many cases, the streams version inlines to something that is equivalent to the hand-written version. Of course, the devil is in the details; any given bit of code might behave differently. -

Mellon over 8 yearsI think your test is fair, you just need a machine has more cpu cores.

-

Alexandr over 8 years@BrianGoetz, could you please specify use cases, when streams are faster?

-

pdem almost 8 yearsIn the last version of FMH :use

@Benchmarkinstead of@GenerateMicroBenchmark -

Lii almost 8 yearsAbout this sentence: "you have

Lii almost 8 yearsAbout this sentence: "you havecollect(Collectors.toList())in you command, i.e. there may be a situation when you need to address single Collection by many threads." I'm almost sure thattoListcollects to several different list instances in parallel. Only as the last step in the collection the elements are transferred to one list and then returned. So there should be not synchronisation overhead. This is why collectors have both a supplier, an accumerator and a combiner function. (It could be slow for other reasons, of course.) -

Sergey Fedorov almost 8 years@Lii I think the same way about

collectimplementation here. But in the end several lists should be merged into a single one, and it looks like merging is the most heavy operation in given example. -

Lii almost 8 yearsMaybe the merging is the most expensive part. But there is no synchronisation going on, is there? Because before merging, one thread should have handed over its list to another thread which merges the two.

Lii almost 8 yearsMaybe the merging is the most expensive part. But there is no synchronisation going on, is there? Because before merging, one thread should have handed over its list to another thread which merges the two. -

Sergey Fedorov almost 8 years@Lii Well, term 'synchronisation' is possibly too vague if it can cause misunderstanding. Can you offer a better wording?

-

Lii almost 8 yearsYeah, the term "synchronisation" is in Java so strongly connected to synchronized-statements and monitors. Alternative phrasing could maybe be something like this: "Concurrency overhead, starting and stopping new threads, transferring elements from different lists for each thread to a single result list".

Lii almost 8 yearsYeah, the term "synchronisation" is in Java so strongly connected to synchronized-statements and monitors. Alternative phrasing could maybe be something like this: "Concurrency overhead, starting and stopping new threads, transferring elements from different lists for each thread to a single result list". -

Lii almost 8 yearsBut maybe that's not entirely accurate, because this threads are in a pool and don't have to be started and stopped. But in some way the jobs must be handed to the threads and they must signal in some way when they're done. Maybe that also involves synchronisation, so maybe there is nothing wrong with the original phrasing!

Lii almost 8 yearsBut maybe that's not entirely accurate, because this threads are in a pool and don't have to be started and stopped. But in some way the jobs must be handed to the threads and they must signal in some way when they're done. Maybe that also involves synchronisation, so maybe there is nothing wrong with the original phrasing! -

Sergey Fedorov almost 8 years@Lii thanks, with referring to overhead answer looks more precise. And yes, the whole thread pool start shouldn't take so much time ;-)

-

kiltek over 5 years@BrianGoetz, Could you specify use cases, when Streams are faster?

-

deFreitas almost 5 yearshere a example of how generate benchmarks with gradle

-

Hari Bharathi over 3 years@Alexandr as you asked, this below lambda single line code is much faster than traditional loops. I personally did experiment using JMH and was amazed over the results.

Hari Bharathi over 3 years@Alexandr as you asked, this below lambda single line code is much faster than traditional loops. I personally did experiment using JMH and was amazed over the results.DATA_FOR_TESTING.stream().peek(s -> bh.consume(s));Check out this link for how the above code was written in another iteration methods mkyong.com/java/java-jmh-benchmark-tutorial -

user904963 about 2 years@HariBharathi Are you aware of what

peekdoes?