Linux: how to queue some jobs in the background?

Solution 1

Use screen.

- ssh to the server

- run

screen - launch your programs:

job1;job2;job3- separated with semicolons, they will run sequentially - Detach from the screen:

CTRL-A,D - logout from the server

(later)

- ssh to the server

- run

screen -r - and you are in your shell with your job queue running...

Solution 2

Alternatively, if you want a bit more control of your queue, e.g. the ability to list and modify entries, check out Task Spooler. It is available in the Ubuntu 12.10 Universe repositories as the package task-spooler.

Solution 3

I do agree that screen is very handy tool. There is additional command line tool enqueue - that enables enqueuing of additional jobs as needed i.e. even when you had already started one of the jobs, you can add another one when the first one is already running.

Here's sample from enqueue README:

$ enqueue add mv /disk1/file1 /disk2/file1

$ enqueue add mv /disk1/file2 /disk2/file2

$ enqueue add beep

$ enqueue list

Solution 4

a little more information:

If you separate your jobs with && it is equivalent to the &&/and operator in a programming language, such as in an if statement. If job 1 returns with an error, then job 2 won't run, etc. This is called "short circuiting." If you separate the jobs with ; instead, they will all run regardless of the return code.

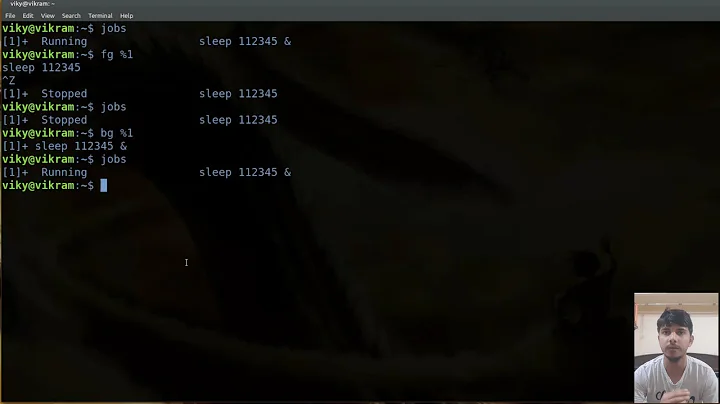

If you end the line with & it will background the entire thing. If you forget, you can hit control+z to pause the command and give you a prompt, then the command "bg" will background them.

If you close your ssh session, likely it will end the commands because they are attached to your shell (at least in most implementations of bash). If you want it to continue after you've logged out, use the command "disown".

Related videos on Youtube

A Question Asker

Updated on May 07, 2020Comments

-

A Question Asker almost 4 years

Here is the functionality I am looking for (and haven't quite found):

I have x processes that I want to run sequentially. Some of them could be quite time consuming.

I want these processes to run in the background of my shell.

I know about nohup, but it doesn't seem to work perfectly...assuming job1 is a time consuming job, if I ctrl+c out of the blank line that I get after doing nohup job1 && job2 && job3 &, then job2 and job3 won't run, and job1 might or might not run depending on how long I let nohup run.

Is there a way to get the functionality I want? I am ssh'ed into a linux server. For bonus points, I'd love it if the jobs that I queued up would continue running even if I closed my connection.

Thanks for your help.

EDIT: A small addendum to the question: if I have a shell script with three exec statements

exec BIGTHING exec smallthing exec smallthing

will it definitely be sequential? And is there a way to wrap those all into one exec line to get the equivalent functionality?

ie exec BIGTHING & smallthing & smallthing or && or somesuch

-

sizzzzlerz over 13 yearsWell, that's my "I'd didn't know you could do that!" for the day!

-

novelistparty over 4 yearsthis is incorrect. the job will be cancelled when you close the ssh session