Lucene Index problems with "-" character

Solution 1

StandardAnalyzer will treat the hyphen as whitespace, I believe. So it turns your query "gsx-*" into "gsx*" and "v-*" into nothing because at also eliminates single-letter tokens. What you see as the field contents in the search result is the stored value of the field, which is completely independent of the terms that were indexed for that field.

So what you want is for "v-strom" as a whole to be an indexed term. StandardAnalyzer is not suited to this kind of text. Maybe have a go with the WhitespaceAnalyzer or SimpleAnalyzer. If that still doesn't cut it, you also have the option of throwing together your own analyzer, or just starting off those two mentined and composing them with further TokenFilters. A very good explanation is given in the Lucene Analysis package Javadoc.

BTW there's no need to enter all the variants in the index, like V-strom, V-Strom, etc. The idea is for the same analyzer to normalize all these variants to the same string both in the index and while parsing the query.

Solution 2

ClassicAnalyzer handles '-' as a useful, non-delimiter character. As I understand ClassicAnalyzer, it handles '-' like the pre-3.1 StandardAnalyzer because ClassicAnalyzer uses ClassicTokenizer which treats numbers with an embedded '-' as a product code, so the whole thing is tokenized as one term.

When I was at Regenstrief Institute I noticed this after upgrading Luke, as the LOINC standard medical terms (LOINC was initiated by R.I.) are identified by a number followed by a '-' and a checkdigit, like '1-8' or '2857-1'. My searches for LOINCs like '45963-6' failed using StandardAnalyzer in Luke 3.5.0, but succeeded with ClassicAnalyzer (and this was because we built the index with the 2.9.2 Lucene.NET).

Solution 3

(Based on Lucene 4.7) StandardTokenizer splits hyphenated words into two. for example "chat-room" into "chat","room" and index the two words separately instead of indexing as a single whole word. It is quite common for separate words to be connected with a hyphen: “sport-mad,” “camera-ready,” “quick-thinking,” and so on. A significant number are hyphenated names, such as “Emma-Claire.” When doing a Whole Word Search or query, users expect to find the word within those hyphens. While there are some cases where they are separate words, that's why lucene keeps the hyphen out of the default definition.

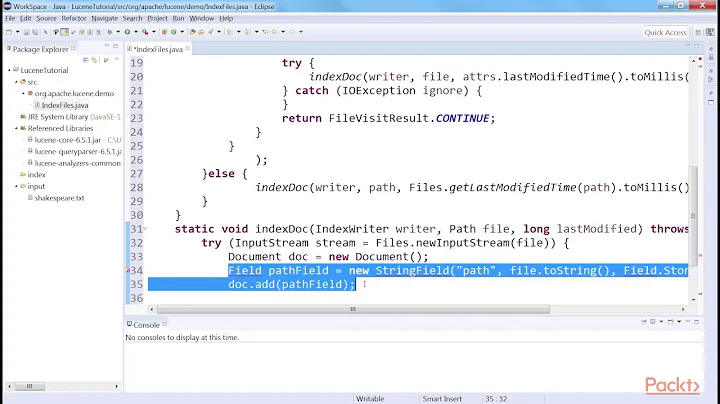

To give support of hyphen in StandardAnalyzer, you have to make changes in StandardTokenizerImpl.java which is generated class from jFlex.

Refer this link for complete guide.

You have to add following line in SUPPLEMENTARY.jflex-macro which is included by StandardTokenizerImpl.jflex file.

MidLetterSupp = ( [\u002D] )

And After making changes provide StandardTokenizerImpl.jflex file as input to jFlex engine and click on generate. The output of that will be StandardTokenizerImpl.java

And using that class file rebuild the index.

Solution 4

The ClassicAnalzer is recommended to index text containing product codes like 'GSX-R1000'. It will recognize this as a single term and did not split up its parts. But for example the text 'Europe/Berlin' will be split up by the ClassicAnalzer into the words 'Europe' and 'Berlin'. This means if you have a text indexed by the ClassicAnalyzer containing the phrase

Europe/Berlin GSX-R1000

you can search for "europe", "berlin" or "GSX-R1000".

But be careful which analyzer you use for the search. I think the best choice to search a Lucene index is the KeywordAnalyzer. With the KeywordAnalyzer you can also search for specific fields in a document and you can build complex queries like:

(processid:4711) (berlin)

This query will search documents with the phrase 'berlin' but also a field 'processid' containing the number 4711.

But if you search the index for the phrase "europe/berlin" you will get no result! This is because the KeywordAnalyzer did not change your search phrase, but the phrase 'Europe/Berlin' was split up into two separate words by the ClassicAnalyzer. This means you have to search for 'europe' and 'berlin' separately.

To solve this conflict you can translate a search term, entered by the user, in a search query that fits you needs using the following code:

QueryParser parser = new QueryParser("content", new ClassicAnalyzer());

Query result = parser.parse(searchTerm);

searchTerm = result.toString("content");

This code will translate the serach pharse

Europe/Berlin

into

europe berlin

which will result in the expected document set.

Note: This will also work for more complex situations. The search term

Europe/Berlin GSX-R1000

will be translated into:

(europe berlin) GSX-R1000

which will search correctly for all phrases in combination using the KeyWordAnalyzer.

Related videos on Youtube

Zteve

Updated on June 04, 2022Comments

-

Zteve almost 2 years

I'm having trouble with a Lucene Index, which has indexed words, that contain "-" Characters.

It works for some words that contain "-" but not for all and I don't find the reason, why it's not working.

The field I'm searching in, is analyzed and contains version of the word with and without the "-" character.

I'm using the analyzer: org.apache.lucene.analysis.standard.StandardAnalyzer

here an example:

if I search for "gsx-*" I got a result, the indexed field contains "SUZUKI GSX-R 1000 GSX-R1000 GSXR"

but if I search for "v-*" I got no result. The indexed field of the expected result contains: "SUZUKI DL 1000 V-STROM DL1000V-STROMVSTROM V STROM"

If I search for "v-strom" without "*" it works, but if I just search for "v-str" for example I don't get the result. (There should be a result because it's for a live search for a webshop)

So, what's the difference between the 2 expected results? why does it work for "gsx-" but not for "v-" ?

-

Marko Bonaci about 12 yearsHave you actually checked the contents of indexed field or you just expect it to be like that? If not use the greatest Lucene index tool ever - Luke: code.google.com/p/luke

-

-

Zteve about 12 yearsThanks for your help, I know that the displayed value is independend from the searched/indexed field, but for testing I displayed the field I'm searching in. I also use luke for testing and analyzing the problem. So what I exactly need is, that the customer can type v- and gets all the result that begin with v-. What do I need to change, that it works? I only need the right syntax so that I can change the query of the customer

-

Marko Bonaci about 12 yearsI'm a bit rusty with Solr, but I'd start by adding an additional field to your schema (e.g. product_name) which you should only lowercase (field type = lowercase). Add this field (OR) to your search request urls as additional param with higher weight.

-

Zteve about 12 yearswhat type of syntax/value should be in this field product_name? the same content as in the actual indexed field? It's also possible to change the value of the indexed field, as I could change it to for example to "V-STROM v-strom vstrom v strom V STROM", could a change of the value offer the solution? The only fixed thing is, that the customer should be able to find the result when he types "v-str" or "v-" etc. in the search field.

-

Marko Bonaci about 12 years@Zteve The ADDITIONAL field should contain the product name (that you said you wanted to prefix-search by) and be of type "lowercase", which would take care for all your additional questions by itself.

-

rainer198 almost 12 yearsI have a similar problem (indexing with StandardAnalizer and searching for dashed words like v-strom). What I don't understand is: I read that query input is processed in the same way as index documents were processed. Why does this not imply that you should find "v-strom" in the index even if I indexed with StandardAnalyzer?

-

Marko Topolnik almost 12 years@rainer198 If you indexed with

Marko Topolnik almost 12 years@rainer198 If you indexed withStandardAnalyzer, there is no term "v-strom" in the index, only "strom". Same for the query, after parsing you are searching only for "strom". -

rainer198 almost 12 years@Marko Yes, and that's why you should have a match: indexing: "v-strom" -> "strom". Querying: "v-strom" -> "strom" -> perfectly matches index term "strom"

-

Marko Topolnik almost 12 yearsWell yes, if it is acceptable to you to find anything that analyzes to "strom", then that's it. But if by querying "x-strom" you don't want results with "v-strom", then there's a problem. The original question doesn't even involve the "strom" part.

Marko Topolnik almost 12 yearsWell yes, if it is acceptable to you to find anything that analyzes to "strom", then that's it. But if by querying "x-strom" you don't want results with "v-strom", then there's a problem. The original question doesn't even involve the "strom" part. -

yotam.shacham over 11 yearsConfirmed that as of Lucene 4.0.0 WhitespaceAnalyzer will not remove the hyphen, but standard and classic will.

-

yotam.shacham over 11 yearsI just tried and as of Lucene 4.0.0 WhitespaceAnalyzer will not remove the hyphen, but standard and classic will.

-

danilopez.dev over 5 yearsThank you! Changing from

danilopez.dev over 5 yearsThank you! Changing fromStandardAnalyzertoWhitespaceAnalyzersaved me lots of hours!