Multiple sessions and graphs in Tensorflow (in the same process)

The issue is most certainly happening due to concurrent execution of different session objects. I moved the first model's session from the background thread to the main thread, repeated the controlled experiment several times (running for over 24 hours and reaching convergence) and never observed NaN. On the other hand, concurrent execution diverges the model within a few minutes.

I've restructured my code to use a common session object for all models.

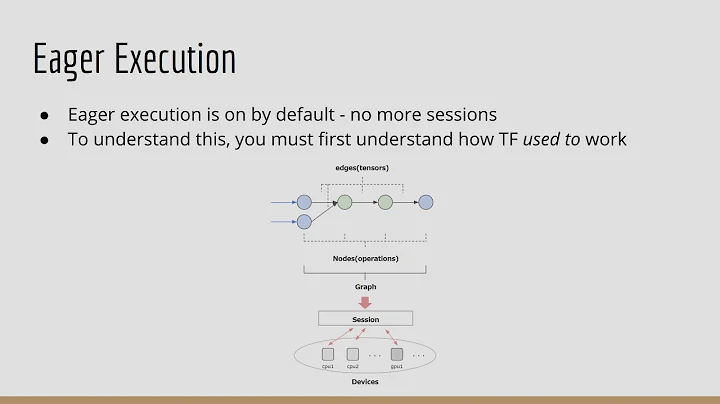

Related videos on Youtube

Vikesh

Updated on June 04, 2022Comments

-

Vikesh almost 2 years

I'm training a model where the input vector is the output of another model. This involves restoring the first model from a checkpoint file while initializing the second model from scratch (using

tf.initialize_variables()) in the same process.There is a substantial amount of code and abstraction, so I'm just pasting the relevant sections here.

The following is the restoring code:

self.variables = [var for var in all_vars if var.name.startswith(self.name)] saver = tf.train.Saver(self.variables, max_to_keep=3) self.save_path = tf.train.latest_checkpoint(os.path.dirname(self.checkpoint_path)) if should_restore: self.saver.restore(self.sess, save_path) else: self.sess.run(tf.initialize_variables(self.variables))Each model is scoped within its own graph and session, like this:

self.graph = tf.Graph() self.sess = tf.Session(graph=self.graph) with self.sess.graph.as_default(): # Create variables and ops.All the variables within each model are created within the

variable_scopecontext manager.The feeding works as follows:

- A background thread calls

sess.run(inference_op)oninput = scipy.misc.imread(X)and puts the result in a blocking thread-safe queue. - The main training loop reads from the queue and calls

sess.run(train_op)on the second model.

PROBLEM:

I am observing that the loss values, even in the very first iteration of the training (second model) keep changing drastically across runs (and become nan in a few iterations). I confirmed that the output of the first model is exactly the same everytime. Commenting out thesess.runof the first model and replacing it with identical input from a pickled file does not show this behaviour.This is the

train_op:loss_op = tf.nn.sparse_softmax_cross_entropy(network.feedforward()) # Apply gradients. with tf.control_dependencies([loss_op]): opt = tf.train.GradientDescentOptimizer(lr) grads = opt.compute_gradients(loss_op) apply_gradient_op = opt.apply_gradients(grads) return apply_gradient_opI know this is vague, but I'm happy to provide more details. Any help is appreciated!

- A background thread calls

-

Avijit Dasgupta over 6 yearsI am facing exactly same problem. Can you elaborate your solution please?

-

Vikesh over 6 yearsDo not run

sess.runconcurrently. Tensorflow assumes complete control of (all exposed) GPU memory. Runningsess.runin two different processes or threads concurrently will cause issues. -

swapnil agashe over 2 years@Vikesh Can you clarify with a small sample of code please? I have been facing the same issue but not able to find any solution

swapnil agashe over 2 years@Vikesh Can you clarify with a small sample of code please? I have been facing the same issue but not able to find any solution