Non-linear regression in C#

Solution 1

I used the MathNet.Iridium release because it is compatible with .NET 3.5 and VS2008. The method is based on the Vandermonde matrix. Then I created a class to hold my polynomial regression

using MathNet.Numerics.LinearAlgebra;

public class PolynomialRegression

{

Vector x_data, y_data, coef;

int order;

public PolynomialRegression(Vector x_data, Vector y_data, int order)

{

if (x_data.Length != y_data.Length)

{

throw new IndexOutOfRangeException();

}

this.x_data = x_data;

this.y_data = y_data;

this.order = order;

int N = x_data.Length;

Matrix A = new Matrix(N, order + 1);

for (int i = 0; i < N; i++)

{

A.SetRowVector( VandermondeRow(x_data[i]) , i);

}

// Least Squares of |y=A(x)*c|

// tr(A)*y = tr(A)*A*c

// inv(tr(A)*A)*tr(A)*y = c

Matrix At = Matrix.Transpose(A);

Matrix y2 = new Matrix(y_data, N);

coef = (At * A).Solve(At * y2).GetColumnVector(0);

}

Vector VandermondeRow(double x)

{

double[] row = new double[order + 1];

for (int i = 0; i <= order; i++)

{

row[i] = Math.Pow(x, i);

}

return new Vector(row);

}

public double Fit(double x)

{

return Vector.ScalarProduct( VandermondeRow(x) , coef);

}

public int Order { get { return order; } }

public Vector Coefficients { get { return coef; } }

public Vector XData { get { return x_data; } }

public Vector YData { get { return y_data; } }

}

which then I use it like this:

using MathNet.Numerics.LinearAlgebra;

class Program

{

static void Main(string[] args)

{

Vector x_data = new Vector(new double[] { 0, 1, 2, 3, 4 });

Vector y_data = new Vector(new double[] { 1.0, 1.4, 1.6, 1.3, 0.9 });

var poly = new PolynomialRegression(x_data, y_data, 2);

Console.WriteLine("{0,6}{1,9}", "x", "y");

for (int i = 0; i < 10; i++)

{

double x = (i * 0.5);

double y = poly.Fit(x);

Console.WriteLine("{0,6:F2}{1,9:F4}", x, y);

}

}

}

Calculated coefficients of [1,0.57,-0.15] with the output:

x y

0.00 1.0000

0.50 1.2475

1.00 1.4200

1.50 1.5175

2.00 1.5400

2.50 1.4875

3.00 1.3600

3.50 1.1575

4.00 0.8800

4.50 0.5275

Which matches the quadratic results from Wolfram Alpha.

Edit 1

To get to the fit you want try the following initialization for x_data and y_data:

Matrix points = new Matrix( new double[,] { { 1, 82.96 },

{ 2, 86.23 }, { 3, 87.09 }, { 4, 84.28 },

{ 5, 83.69 }, { 6, 89.18 }, { 7, 85.71 },

{ 8, 85.05 }, { 9, 85.58 }, { 10, 86.95 },

{ 11, 87.95 }, { 12, 89.44 }, { 13, 93.47 } } );

Vector x_data = points.GetColumnVector(0);

Vector y_data = points.GetColumnVector(1);

which produces the following coefficients (from lowest power to highest)

Coef=[85.892,-0.5542,0.074990]

x y

0.00 85.8920

1.00 85.4127

2.00 85.0835

3.00 84.9043

4.00 84.8750

5.00 84.9957

6.00 85.2664

7.00 85.6871

8.00 86.2577

9.00 86.9783

10.00 87.8490

11.00 88.8695

12.00 90.0401

13.00 91.3607

14.00 92.8312

Solution 2

@ja72 code is pretty good. But I ported it on the present version of Math.NET (MathNet.Iridium is not supported for now as I understand) and optimized code size and performance (For instance, Math.Pow function is not used in my solution because of slow performance).

public class PolynomialRegression

{

private int _order;

private Vector<double> _coefs;

public PolynomialRegression(DenseVector xData, DenseVector yData, int order)

{

_order = order;

int n = xData.Count;

var vandMatrix = new DenseMatrix(xData.Count, order + 1);

for (int i = 0; i < n; i++)

vandMatrix.SetRow(i, VandermondeRow(xData[i]));

// var vandMatrixT = vandMatrix.Transpose();

// 1 variant:

//_coefs = (vandMatrixT * vandMatrix).Inverse() * vandMatrixT * yData;

// 2 variant:

//_coefs = (vandMatrixT * vandMatrix).LU().Solve(vandMatrixT * yData);

// 3 variant (most fast I think. Possible LU decomposion also can be replaced with one triangular matrix):

_coefs = vandMatrix.TransposeThisAndMultiply(vandMatrix).LU().Solve(TransposeAndMult(vandMatrix, yData));

}

private Vector<double> VandermondeRow(double x)

{

double[] result = new double[_order + 1];

double mult = 1;

for (int i = 0; i <= _order; i++)

{

result[i] = mult;

mult *= x;

}

return new DenseVector(result);

}

private static DenseVector TransposeAndMult(Matrix m, Vector v)

{

var result = new DenseVector(m.ColumnCount);

for (int j = 0; j < m.RowCount; j++)

{

double v_j = v[j];

for (int i = 0; i < m.ColumnCount; i++)

result[i] += m[j, i] * v_j;

}

return result;

}

public double Calculate(double x)

{

return VandermondeRow(x) * _coefs;

}

}

It's also available on github:gist.

Solution 3

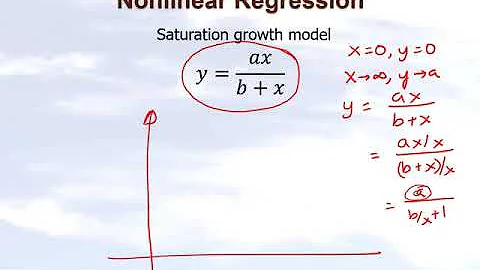

I don't think you want non linear regression. Even if you are using a quadratic function, it is still called linear regression. What you want is called multivariate regression. If you want a quadratic you just add a x squared term to your dependent variables.

Related videos on Youtube

Polynomial

Security researcher, ex-pentester, ex-developer, boat-botherer, reverse engineer, amateur cryptographer, electronics tinkerer, digital rights proponent, laser enthusiast, LD wannabe, half-orc nobleman of the evening, promulgator of useless information. Primary areas of knowledge: Security, Cryptography, Malware, Win32 API, Windows Internals, Marine & Industrial Control, Electronics, Embedded Systems, C#, .NET, x86 assembly, C, PHP, Python All answers and comments are encrypted with ROT256-ECB. Opinions are my own. Advice provided with no warranty.

Updated on June 04, 2022Comments

-

Polynomial almost 2 years

I'm looking for a way to produce a non-linear (preferably quadratic) curve, based on a 2D data set, for predictive purposes. Right now I'm using my own implementation of ordinary least squares (OLS) to produce a linear trend, but my trends are much more suited to a curve model. The data I'm analysing is system load over time.

Here's the equation that I'm using to produce my linear coefficients:

I've had a look at Math.NET Numerics and a few other libs, but they either provide interpolation instead of regression (which is of no use to me), or the code just doesn't work in some way.

Anyone know of any free open source libs or code samples that can produce the coefficients for such a curve?

-

Polynomial over 12 yearsThat implementation didn't work for me. It gave completely incorrect results (0/1/0 for the coeffs) out of the box and after some tweaking was still completely innacurate. Nothing like the Wolfram Alpha results I discovered earlier. The math in the link you provided is beyond me, unfortunately.

-

Polynomial over 12 yearsLinear regression gives me a line. I've got no idea how to edit the equation I posted to do anything different. I want to get this kind of result: www3.wolframalpha.com/input/…

-

Tim over 12 yearsUse the formula. But instead of x1 x2 use your x and x squared. If you still have problems I'll try and make an example calc in excel or pseudocode.

-

Polynomial over 12 yearsSolved my problem perfectly. Thanks :)

-

Ivan Kochurkin over 11 yearsI optimized and upgraded your answer :) stackoverflow.com/a/12770686/1046374

Ivan Kochurkin over 11 yearsI optimized and upgraded your answer :) stackoverflow.com/a/12770686/1046374

![[Lập trình Machine learning cơ bản] - Bài 3: Giới thiệu Linear Regression và hàm hθ(x) | HowKteam](https://i.ytimg.com/vi/_KfLLePcgCs/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLDvmoy7x5-naRVj9gPQJiDIe93PKQ)