OpenCV fisheye calibration cuts too much of the resulting image

Solution 1

I think I have ran into a similar issue, looking for the "alpha" knot in getOptimalNewCameraMatrix for fisheye.

I calibrated it with cv2.fisheye.calibrate, got the K and D parameters

K = [[ 329.75951163 0. 422.36510555]

[ 0. 329.84897388 266.45855056]

[ 0. 0. 1. ]]

D = [[ 0.04004325]

[ 0.00112638]

[ 0.01004722]

[-0.00593285]]

This is what I get with

map1, map2 = cv2.fisheye.initUndistortRectifyMap(K, d, np.eye(3), k, (800,600), cv2.CV_16SC2)

nemImg = cv2.remap( img, map1, map2, interpolation=cv2.INTER_LINEAR, borderMode=cv2.BORDER_CONSTANT)

And I think it chops too much. I want to see the whole Rubik's cube

I fix it with

nk = k.copy()

nk[0,0]=k[0,0]/2

nk[1,1]=k[1,1]/2

# Just by scaling the matrix coefficients!

map1, map2 = cv2.fisheye.initUndistortRectifyMap(k, d, np.eye(3), nk, (800,600), cv2.CV_16SC2) # Pass k in 1st parameter, nk in 4th parameter

nemImg = cv2.remap( img, map1, map2, interpolation=cv2.INTER_LINEAR, borderMode=cv2.BORDER_CONSTANT)

TADA!

Solution 2

As mentioned by Paul Bourke here:

a fisheye projection is not a "distorted" image, and the process isn't a "dewarping". A fisheye like other projections is one of many ways of mapping a 3D world onto a 2D plane, it is no more or less "distorted" than other projections including a rectangular perspective projection

To get a projection without image cropping, (and your camera has ~180 degrees FOV) you can project the fisheye image in a square using something like this:

Source code:

#include <iostream>

#include <sstream>

#include <time.h>

#include <stdio.h>

#include <opencv2/core/core.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/calib3d/calib3d.hpp>

#include <opencv2/highgui/highgui.hpp>

// - compile with:

// g++ -ggdb `pkg-config --cflags --libs opencv` fist2rect.cpp -o fist2rect

// - execute:

// fist2rect input.jpg output.jpg

using namespace std;

using namespace cv;

#define PI 3.1415926536

Point2f getInputPoint(int x, int y,int srcwidth, int srcheight)

{

Point2f pfish;

float theta,phi,r, r2;

Point3f psph;

float FOV =(float)PI/180 * 180;

float FOV2 = (float)PI/180 * 180;

float width = srcwidth;

float height = srcheight;

// Polar angles

theta = PI * (x / width - 0.5); // -pi/2 to pi/2

phi = PI * (y / height - 0.5); // -pi/2 to pi/2

// Vector in 3D space

psph.x = cos(phi) * sin(theta);

psph.y = cos(phi) * cos(theta);

psph.z = sin(phi) * cos(theta);

// Calculate fisheye angle and radius

theta = atan2(psph.z,psph.x);

phi = atan2(sqrt(psph.x*psph.x+psph.z*psph.z),psph.y);

r = width * phi / FOV;

r2 = height * phi / FOV2;

// Pixel in fisheye space

pfish.x = 0.5 * width + r * cos(theta);

pfish.y = 0.5 * height + r2 * sin(theta);

return pfish;

}

int main(int argc, char **argv)

{

if(argc< 3)

return 0;

Mat orignalImage = imread(argv[1]);

if(orignalImage.empty())

{

cout<<"Empty image\n";

return 0;

}

Mat outImage(orignalImage.rows,orignalImage.cols,CV_8UC3);

namedWindow("result",CV_WINDOW_NORMAL);

for(int i=0; i<outImage.cols; i++)

{

for(int j=0; j<outImage.rows; j++)

{

Point2f inP = getInputPoint(i,j,orignalImage.cols,orignalImage.rows);

Point inP2((int)inP.x,(int)inP.y);

if(inP2.x >= orignalImage.cols || inP2.y >= orignalImage.rows)

continue;

if(inP2.x < 0 || inP2.y < 0)

continue;

Vec3b color = orignalImage.at<Vec3b>(inP2);

outImage.at<Vec3b>(Point(i,j)) = color;

}

}

imwrite(argv[2],outImage);

}

Related videos on Youtube

NoShadowKick

Updated on June 04, 2022Comments

-

NoShadowKick almost 2 years

I am using OpenCV to calibrate images taken using cameras with fish-eye lenses.

The functions I am using are:

findChessboardCorners(...);to find the corners of the calibration pattern.cornerSubPix(...);to refine the found corners.fisheye::calibrate(...);to calibrate the camera matrix and the distortion coefficients.fisheye::undistortImage(...);to undistort the images using the camera info obtained from calibration.

While the resulting image does appear to look good (straight lines and so on), my issue is that the function cut away too much of the image.

This is a real problem, as I am using four cameras with 90 degrees between them, and when so much of the sides are cut off, there is no overlapping area between them which is needed as I am going to stitch the images.

I looked into using

fisheye::estimateNewCameraMatrixForUndistortRectify(...)but I could not get it to give good results, as I do not know what I should put in as theRinput, as the rotation vector output offisheye::calibrateis 3xN (where N is the number of calibration images) andfisheye::estimateNewCameraMatrixForUndistortRectifyrequires a 1x3 or 3x3.The images below show an image of my undistortion result, and an example of the kind of result I would ideally want.

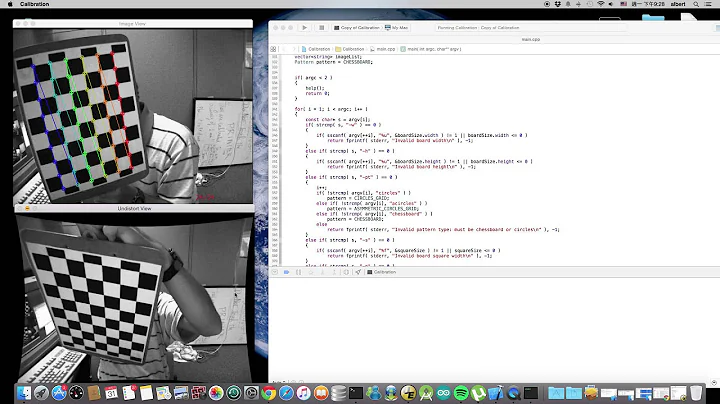

Undistortion:

Example of wanted result:

-

NoShadowKick over 8 yearsI tried that, and for regular images (using the images in the opencv samples folders) it gave the type of result I wanted, however when I use it on my fisheye images, it does not give the wanted result.

-

NoShadowKick over 8 years

-

alexisrozhkov over 8 yearsYour images seem to be from a very wide-angle camera (~180 degrees fov). If you want to include all pixels - you'll have make an infinite-sized image (since pixels with angle from main camera axis >= 180 would be projected on infinity). So you'll have to discard some of then in order to get finite-sized image. By the way, what alpha have you used?

-

NoShadowKick over 8 yearsYes, as stated is is a fisheye lens camera with a very wide fov. I am using 1.0 as you suggested in your earlier comment.

-

alexisrozhkov over 8 yearsHmm, then maybe you're better off constructing new calibration matrix yourself. Seems that opencv has some kind of limit on scale

-

NoShadowKick over 8 yearsThese are my inputs to the function new_cam_matrix = getOptimalNewCameraMatrix(cam_matrix, dist_coeffs, image_size, alpha);

-

NoShadowKick over 8 yearsI think I should use fisheye::estimateNewCameraMatrixForUndistortRectify, as it is the function made for calibrating the new camera matrix for wide fov lens images, but I can't seem to figure out how to get the R matrix.

-

Papershine about 6 yearsThis does not provide an answer to the question. You can search for similar questions, or refer to the related and linked questions on the right-hand side of the page to find an answer. If you have a related but different question, ask a new question, and include a link to this one to help provide context. See: Ask questions, get answers, no distractions

Papershine about 6 yearsThis does not provide an answer to the question. You can search for similar questions, or refer to the related and linked questions on the right-hand side of the page to find an answer. If you have a related but different question, ask a new question, and include a link to this one to help provide context. See: Ask questions, get answers, no distractions -

Zhengfang Xin over 2 yearsWhy is the image asymmetric?

Zhengfang Xin over 2 yearsWhy is the image asymmetric?