Oracle T4CPreparedStatement memory leaks?

Solution 1

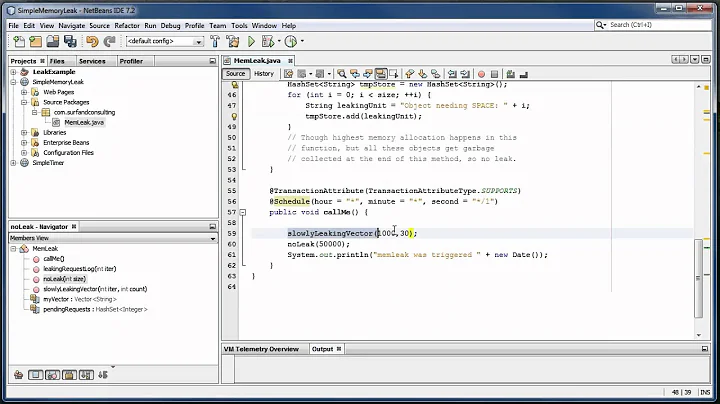

While possible, it seems unlikely you've found a huge memory leak in 11g. I would start by getting the actual SQL from the leaked cursors and looking in code for where that SQL is created. A very common cause of leaked cursors I have found in the past is code like this:

try {

PreparedStatment stmt = null;

stmt = con.prepareStatement("SOME AWESOME SQL");

//lots of lines of code that masks the problem

stmt = con.prepareStatment("DIFFERENT SQL"); //You just leaked "SOME AWESOME SQL"!!!

//lots more code

} finally {

stmt.close() //looks like everything is ok, but only the second one actually got closed

}

Solution 2

I encountered the same issue. Although Affe's leak could be the problem, that wasn't my issue and I found a different answer after some digging:

The Oracle JDBC driver maintains buffers into which data is read as a performance optimisation. The buffer size is computed based on the maximum possible row size (so VARCHAR(2000) would allocate something like 2000 chars), multiplied by the JDBC fetch size. This allows the driver to read data directly into the buffer, rather than allocating on demand which would (apparently) be slower.

Each prepared statement within each connection maintains a buffer of this kind. If you are using a large connection pool with statement caching (or you cache PreparedStatement objects manually, or leak them...) then you can rapidly eat up a lot of heap space. 1.6GB in my case!

This is all explained by Oracle themselves in a PDF here

My experience was based on the 11.2.0.3 driver.

Related videos on Youtube

Comments

-

Jay almost 2 years

A little background on the application that I am gonna talk about in the next few lines:

XYZ is a data masking workbench eclipse RCP application: You give it a source table column, and a target table column, it would apply a trasformation (encryption/shuffling/etc) and copy the row data from source table to target table. Now, when I mask n tables at a time, n threads are launched by this app.

Here is the issue:

I have run into a production issue on first roll out of the above said app. Unfortunately, I don't have any logs to get to the root. However, I tried to run this app in test region and do a stress test.

When I collected .hprof files and ran 'em through an analyzer (yourKit), I noticed that objects of oracle.jdbc.driver.T4CPreparedStatement were retaining heap. The analysis also tells me that one of my classes is holding a reference to this preparedstatement object and thereby, n threads have n such objects. T4CPreparedStatement seemed to have character arrays: lastBoundChars and bindChars each of size char[300000].

So, I researched a bit (google!), obtained ojdbc6.jar and tried decompiling T4CPreparedStatement. I see that T4CPreparedStatement extends OraclePreparedStatement, which dynamically manages array size of lastBoundChars and bindChars.

So, my questions here are:

- Have you ever run into an issue like this?

- Do you know the significance of lastBoundChars / bindChars?

- I am new to profiling, so do you think I am not doing it correct? (I also ran the hprofs through MAT - and this was the main identified issue - so, I don't really think I could be wrong?)

I have found something similar on the web here: http://forums.oracle.com/forums/thread.jspa?messageID=2860681

Appreciate your suggestions / advice.

-

Jay almost 14 yearsAffe, thank you, for the hint. I will scan my code for this and post updates