PyTorch NotImplementedError in forward

18,305

Solution 1

please look carefully at the indentation of your __init__ function: your forward is part of __init__ not part of your module.

Solution 2

This error happens when you don't implement the required method from super class, in my case, i had typo on the function name forward. I recommend you check your code indentation.

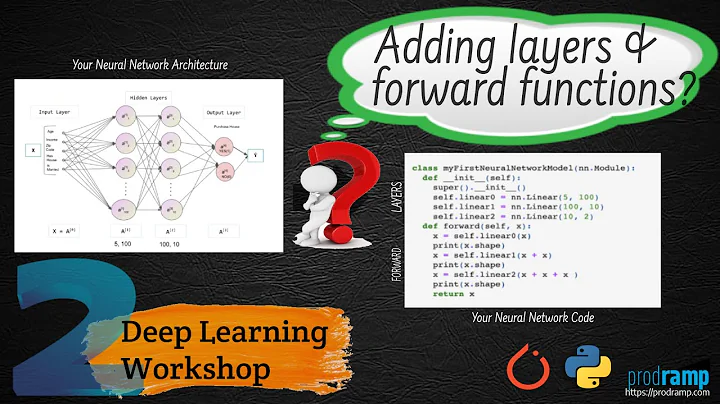

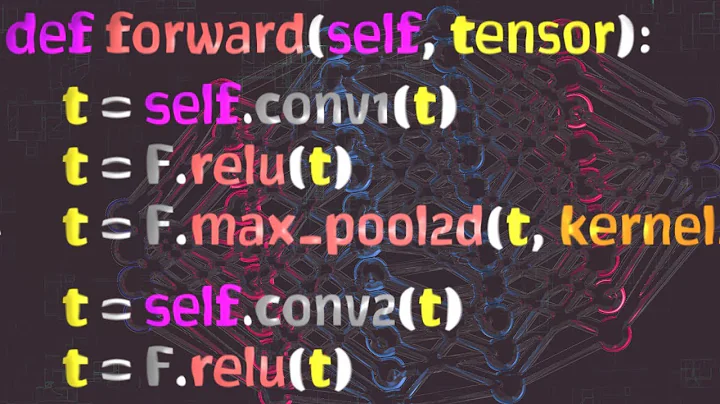

Related videos on Youtube

Author by

marlo

Updated on September 14, 2022Comments

-

marlo over 1 year

import torch import torch.nn as nn device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') class Model(nn.Module): def __init__(self): super(Model, self).__init__() self.layer = nn.Sequential( nn.Conv2d(1, 16, kernel_size=3, stride=1, padding=1), nn.BatchNorm2d(16), nn.ReLU(), nn.MaxPool2d(kernel_size=2, stride=2), # 16x16x650 nn.Conv2d(16, 32, kernel_size=3, stride=1, padding=1), # 32x16x650 nn.ReLU(), nn.Dropout2d(0.5), nn.Conv2d(32, 64, kernel_size=3, stride=1, padding=1), # 64x16x650 nn.ReLU(), nn.MaxPool2d(kernel_size=2, stride=2), # 64x8x325 nn.Conv2d(64, 128, kernel_size=3, stride=1, padding=1), nn.ReLU()) # 64x8x325 self.fc = nn.Sequential( nn.Linear(64*8*325, 128), nn.ReLU(), nn.Linear(128, 256), nn.ReLU(), nn.Linear(256, 1), ) def forward(self, x): out = self.layer1(x) out = self.layer2(out) out = out.reshape(out.size(0), -1) out = self.fc(out) return out # HYPERPARAMETER learning_rate = 0.0001 num_epochs = 15 import data def main(): model = Model().to(device) criterion = nn.CrossEntropyLoss() optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate) total_step = len(data.train_loader) for epoch in range(num_epochs): for i, (images, labels) in enumerate(data.train_loader): images = images.to(device) labels = labels.to(device) outputs = model(images) loss = criterion(outputs, labels) optimizer.zero_grad() loss.backward() optimizer.step() if (i + 1) % 100 == 0: print('Epoch [{}/{}], Step [{}/{}], Loss: {:.4f}' .format(epoch + 1, num_epochs, i + 1, total_step, loss.item())) model.eval() with torch.no_grad(): correct = 0 total = 0 for images, labels in data.test_loader: images = images.to(device) labels = labels.to(device) outputs = model(images) _, predicted = torch.max(outputs.data, 1) total += labels.size(0) correct += (predicted == labels).sum().item() print('Test Accuracy of the model on the 10000 test images: {} %'.format(100 * correct / total)) if __name__ == '__main__': main()Error:

File "/home/rladhkstn8/Desktop/SWID/tmp/pycharm_project_853/model.py", line 82, in <module> main() File "/home/rladhkstn8/Desktop/SWID/tmp/pycharm_project_853/model.py", line 56, in main outputs = model(images) File "/home/rladhkstn8/anaconda3/lib/python3.6/site-packages/torch/nn/modules/module.py", line 477, in __call__ result = self.forward(*input, **kwargs) File "/home/rladhkstn8/anaconda3/lib/python3.6/site-packages/torch/nn/modules/module.py", line 83, in forward raise NotImplementedError NotImplementedErrorI do not know where the problem is. I know that

NotImplementedErrorshould be implemented, but it happens when there is unimplemented code.-

codecubed over 2 yearsThis can also happen if you try to call forward on a

codecubed over 2 yearsThis can also happen if you try to call forward on aModuleListinstead ofSequential

-

-

Sameen about 3 yearsI don't understand what you mean. A code example would have been helpful.

-

pydrink almost 3 years@samisnotinsane If you were to hold a ruler vertical from where you have defined

__init__and let it run vertical down your code,forwardshould be defined where that ruler hits its line. Instead, yours is indented one tab in from the ruler, i.e. there is a space of one tab between the ruler andforward. You have indenteddef forwardwith two tabs instead of one likedef __init__. This means that you've definedforwardwithin__init__, when it is its own method, independent of__init__.