Read SAS sas7bdat data with Spark

Solution 1

It looks like the package was not imported correctly. You have to use --packages saurfang:spark-sas7bdat:2.0.0-s_2.10 when running spark-submit or pyspark. See: https://spark-packages.org/package/saurfang/spark-sas7bdat

You could also download the JAR file from that page, and run your pyspark or spark-submit command with --jars /path/to/jar

Solution 2

I've just sorted this issue out in R, and I bet my 2 cents it's the same issue here. The problem seems to be that the correct repository for com.github.saurfang.sas.spark is unavailable/not specified. It should be https://repos.spark-packages.org/

We're all stumped because the error to the console here (and in R) is only slightly informative and relates to this part

Failed to find data source: com.github.saurfang.sas.spark

When I ran the R call to spark-submit in cmd (I'm using Windows), low-and-behold, it showed the attempts at trying to download the com.github.saurfang.sas.spark from various caches and repos, but not the one referenced above..

Thus fixing it all, resulted in this call and download of the spark package (using sparklyr in R)..

spark-submit2.cmd --driver-memory 32G --name sparklyr --class sparklyr.Shell --packages "saurfang:spark-sas7bdat:2.0.0-s_2.11" --repositories https://repos.spark-packages.org/ "...\sparklyr\java\sparklyr-2.0-2.11.jar" 8880 41823

Related videos on Youtube

Mateo Rod

Updated on June 04, 2022Comments

-

Mateo Rod almost 2 years

I have a SAS table and I try to read it with Spark. I've try to use this https://github.com/saurfang/spark-sas7bdat like but I couldn't get it to work.

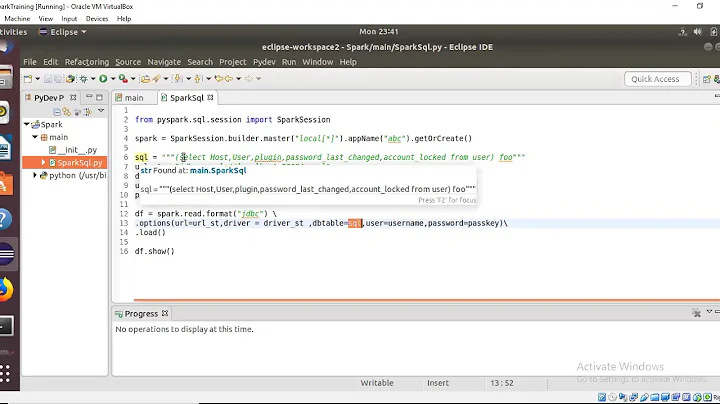

Here is the code:

from pyspark.sql import SQLContext sqlContext = SQLContext(sc) df = sqlContext.read.format("com.github.saurfang.sas.spark").load("my_table.sas7bdat")It returns this error:

Py4JJavaError: An error occurred while calling o878.load. : java.lang.ClassNotFoundException: Failed to find data source: com.github.saurfang.sas.spark. Please find packages at http://spark.apache.org/third-party-projects.html at org.apache.spark.sql.execution.datasources.DataSource$.lookupDataSource(DataSource.scala:635) at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:190) at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:174) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(Unknown Source) at sun.reflect.DelegatingMethodAccessorImpl.invoke(Unknown Source) at java.lang.reflect.Method.invoke(Unknown Source) at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:244) at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357) at py4j.Gateway.invoke(Gateway.java:282) at py4j.commands.AbstractCommand.invokeMethod(AbstractCommand.java:132) at py4j.commands.CallCommand.execute(CallCommand.java:79) at py4j.GatewayConnection.run(GatewayConnection.java:238) at java.lang.Thread.run(Unknown Source) Caused by: java.lang.ClassNotFoundException: com.github.saurfang.sas.spark.DefaultSource at java.net.URLClassLoader.findClass(Unknown Source) at java.lang.ClassLoader.loadClass(Unknown Source) at java.lang.ClassLoader.loadClass(Unknown Source) at org.apache.spark.sql.execution.datasources.DataSource$$anonfun$23$$anonfun$apply$15.apply(DataSource.scala:618) at org.apache.spark.sql.execution.datasources.DataSource$$anonfun$23$$anonfun$apply$15.apply(DataSource.scala:618) at scala.util.Try$.apply(Try.scala:192) at org.apache.spark.sql.execution.datasources.DataSource$$anonfun$23.apply(DataSource.scala:618) at org.apache.spark.sql.execution.datasources.DataSource$$anonfun$23.apply(DataSource.scala:618) at scala.util.Try.orElse(Try.scala:84) at org.apache.spark.sql.execution.datasources.DataSource$.lookupDataSource(DataSource.scala:618)...Any ideas?

-

Tom almost 5 yearsHow is this an answer to the question? It looks more like a new topic to me.

-

Akki almost 5 yearsIt's seems like it only because you are required to create a text version for the corresponding sas7bdat file but in the end it does solve the issue of reading sas7bdat file with same schema. Simply reading the sas7bdat data through saurfang didn't work for me correctly but this does.

-

Etisha over 3 yearsHave you added any package to read a sas7bdat file?

-

Akki over 3 yearsYes, loading the saurfang package while initiating the spark-submit spark-submit --packages saurfang:spark-sas7bdat:2.0.0-s_2.10

-

Matthew Son over 2 yearsThanks, this and therinspark.com/contributing.html helped me to solve the issue.