Reading a json file into a RDD (not dataFrame) using pyspark

11,233

textFile reads data line by line. Individual lines of your input are not syntactically valid JSON.

Just use json reader:

spark.read.json("test.json", multiLine=True)

or (not recommended) whole text files

sc.wholeTextFiles("test.json").values().map(json.loads)

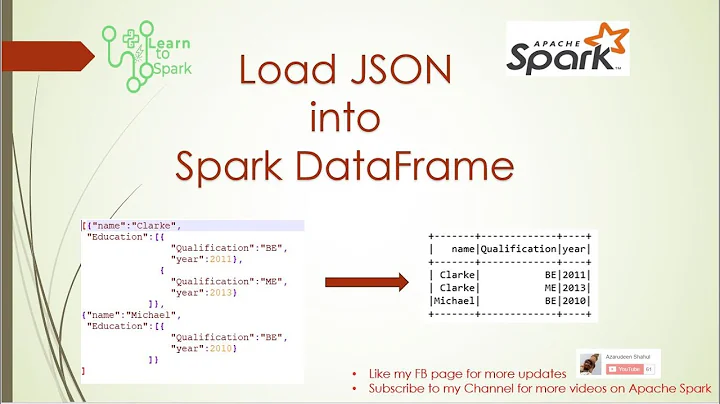

Related videos on Youtube

Author by

Yash

Updated on June 04, 2022Comments

-

Yash almost 2 years

I have the following file: test.json >

{ "id": 1, "name": "A green door", "price": 12.50, "tags": ["home", "green"] }I want to load this file into a RDD. This is what I tried:

rddj = sc.textFile('test.json') rdd_res = rddj.map(lambda x: json.loads(x))I got an error:

Expecting object: line 1 column 1 (char 0)

I don't completely understand what does

json.loadsdo.How can I resolve this problem ?

-

Alexandre Dupriez over 6 yearsProbably a duplicate of stackoverflow.com/questions/39430868/…

Alexandre Dupriez over 6 yearsProbably a duplicate of stackoverflow.com/questions/39430868/… -

ags29 over 6 yearsThe JSON format is not so great for processing with Spark textfile as it will try and process line-by-line, whereas the JSONs cover multiple lines. If you can access your JSON data in the JSON lines format (each json object is "flattened" to a single line, that will work. Alternatively, you can keep the data in the format above and use sc.wholeTextFiles. This returns a key/value rdd, where key is the filename and value is the file content. Then you can process by wrapping the json.loads above into a function which you apply via mapPartitions.

-

Raúl Reguillo Carmona over 6 yearsPossible duplicate of how to read json with schema in spark dataframes/spark sql

Raúl Reguillo Carmona over 6 yearsPossible duplicate of how to read json with schema in spark dataframes/spark sql -

eliasah over 6 yearsThis is actually not a dupe.

-

-

Yash over 6 yearsThanks for your answer. Looks like a fair approach. However, I was using spark 1.6 which does not have the spark module. What worked for me was: rddj = hiveContext.jsonFile("input file path").

-

Femn Dharamshi over 2 yearsdoes spark.read.json load data into a RDD or into Dataframe? I have a huge json, approx ~1TB, so it needs to be loaded into a RDD

Femn Dharamshi over 2 yearsdoes spark.read.json load data into a RDD or into Dataframe? I have a huge json, approx ~1TB, so it needs to be loaded into a RDD -

Adarsh Kumar almost 2 years@FemnDharamshi It loads into a Dataframe