reading only specific messages from kafka topic

In Kafka it's not possible doing something like that. The consumer consumes messages one by one, one after the other starting from the latest committed offset (or from the beginning, or seeking at a specific offset). Depends on your use case, maybe you could have a different flow in your scenario: the message taking the process to do goes into a topic but then the application which processes the action, then writes the result (completed or failed) in two different topics: in this way you have all completed separated from failed. Another way is to use a Kafka Streams application for doing the filtering but taking into account that it's just a sugar, in reality the streams application will always read all the messages but allowing you to filter messages easily.

Related videos on Youtube

Prabhanj

Updated on June 04, 2022Comments

-

Prabhanj almost 2 years

Scenario:

I am writing data JSON object data into kafka topic while reading I want to read an only specific set of messages based on the value present in the message. I am using kafka-python library.

sample messages:

{flow_status: "completed", value: 1, active: yes} {flow_status:"failure",value 2, active:yes}Here I want to read only messages having flow_Status as completed.

-

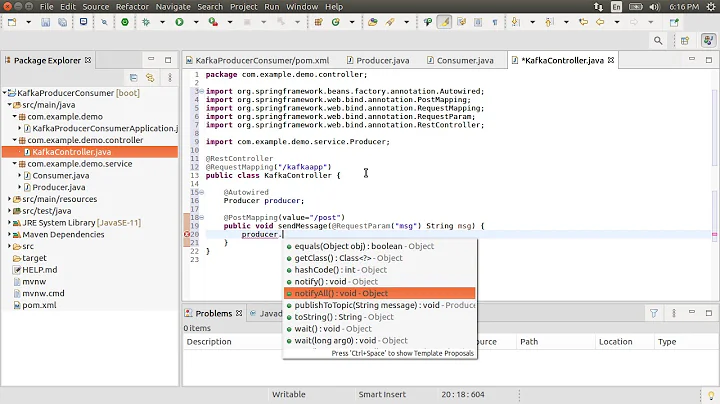

Sivaram Rasathurai about 2 yearsI have created kafka customer who can do that with the help of spring kafka. You may get some idea when you read this blog rcvaram.medium.com/…

Sivaram Rasathurai about 2 yearsI have created kafka customer who can do that with the help of spring kafka. You may get some idea when you read this blog rcvaram.medium.com/…

-

-

Prabhanj about 5 yearsso I can have 3 topics, 1 for whole log, 1 for completed status , 1 for failure status... job will write to topic 1, then will filter data based on status to other topic.

-

ppatierno about 5 yearsexactly, somehow the status for you is the kind of the message which deserves a different topic in this use case (one for completed and one for failure)

-

Prabhanj about 5 yearsis it good approach, to have single topic with two partitons(one for completed,one for failure), while sending will keep logic in producer to send data to respective partitions... at consumer end, will create separated consumer_groups , one group to read from failed partition and other to read from completed partition

-

ppatierno about 5 yearsthe producer side could be good yes but you need to implement a custom partitioner for doing that. On the consumer side is quite the opposite, two consumers needs to be in the same consumer group in order to have one partition assigned each one. If they are part of different consumer groups they will get all messages from both partitions. In any case it doesn't work well, because if one consumer crashes, the other will get the other partition (receiving completed and failed messages). You could avoid using consumer groups but direct partitions assignment.