Redirecting stdout with find -exec and without creating new shell

Solution 1

A simple solution would be to put a wrapper around your script:

#!/bin/sh

myscript "$1" > "$1.stdout"

Call it myscript2 and invoke it with find:

find . -type f -exec myscript2 {} \;

Note that although most implementations of find allow you to do what you have done, technically the behavior of find is unspecified if you use {} more than once in the argument list of -exec.

Solution 2

You can do it with eval. It may be ugly, but so is having to make a shell script for this. Plus, it's all on one line. For example

find -type f -exec bash -c "eval md5sum {} > {}.sum " \;

Solution 3

If you export your environment variables, they'll already be present in the child shell (If you use bash -c instead of sh -c, and your parent shell is itself bash, then you can also export functions in the parent shell and have them usable in the child; see export -f).

Moreover, by using -exec ... {} +, you can limit the number of shells to the smallest possible number needed to pass all arguments on the command line:

set -a # turn on automatic export of all variables

source initscript1

source initscript2

# pass as many filenames as possible to each sh -c, iterating over them directly

find * -name '*.stdout' -prune -o -type f \

-exec sh -c 'for arg; do myscript "$arg" > "${arg}.stdout"' _ {} +

Alternately, you can just perform the execution in your current shell directly:

while IFS= read -r -d '' filename; do

myscript "$filename" >"${filename}.out"

done < <(find * -name '*.stdout' -prune -o -type f -print0)

See UsingFind discussing safely and correctly performing bulk actions through find; and BashFAQ #24 discussing the use of process substitution (the <(...) syntax) to ensure that operations are performed in the parent shell.

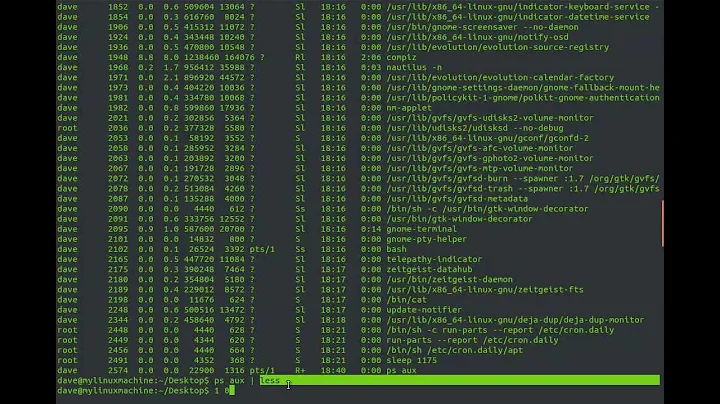

Related videos on Youtube

jserras

Updated on September 19, 2022Comments

-

jserras over 1 year

I have one script that only writes data to

stdout. I need to run it for multiple files and generate a different output file for each input file and I was wondering how to usefind -execfor that. So I basically tried several variants of this (I replaced the script bycatjust for testability purposes):find * -type f -exec cat "{}" > "{}.stdout" \;but could not make it work since all the data was being written to a file literally named

{}.stdout.Eventually, I could make it work with :

find * -type f -exec sh -c "cat {} > {}.stdout" \;But while this latest form works well with

cat, my script requires environment variables loaded through several initialization scripts, thus I end up with:find * -type f -exec sh -c "initscript1; initscript2; ...; myscript {} > {}.stdout" \;Which seems a waste because I have everything already initialized in my current shell.

Is there a better way of doing this with

find? Other one-liners are welcome.-

William Pursell about 11 yearsIf they are initialized in your original shell, but not set in the subshell, then they are not environment variables. Write

set -aat the top of your initscripts. -

William PursellIs the last example you give correct, or is the command:

find . -type f -exec sh -c ". initscript1; . initscript2; ...; myscript {} > {}.stdout" \;(Instead of simply invokinginitscript1, are you actually calling. initscript1, ie you are sourcing the file with the dot command).

-

-

jserras about 11 yearsBut in

findmanual, somewhere in-execit is said that: The string '{}' is replaced by the current file name being processed everywhere it occurs in the arguments to the command, not just in arguments where it is alone, as in some versions of find. link. Still, thanks for the workaround. -

William Pursell about 11 yearsThe manual for your particular implementation of

findstate that it works, but the standard reads:If more than one argument containing only the two characters "{}" is present, the behavior is unspecified.It's not a big deal, but is something that can burn you (at which point it suddenly becomes a big deal!) -

jilles about 11 yearsA more important disadvantage is that things like

-exec sh -c "myscript {} > {}.stdout" \;can cause arbitrary code execution in the face of hostile file names. It is more secure to do-exec sh -c 'myscript "$1" > "$1.stdout"' sh {} \;. -

tripleee about 7 yearsThe

tripleee about 7 yearsThebash -cis the beef here, theevalisn't actually doing anything useful. But you are not avoiding the shell. -

Charles Duffy about 7 yearsThe

evalis actively dangerous here. If you have a file name that contains$(rm -rf $HOME), this is going to be very bad news. -

William Pursell about 7 yearsUsing

_as $0 to the invoked sh is a bit obfuscating! -

Charles Duffy about 7 years@WilliamPursell, it's a common idiom -- can find links if you like. (

_is also a conventional unused/placeholder value in some other languages, such as Python, but my understanding is that it was common in shell first). -

William Pursell about 7 yearsI've seen it used in go and perl, but never in this setting. I tend to ignore it and set $0 to {}, which is probably a much worse practice!

-

Charles Duffy about 7 years@tripleee, even without the

evalthis is still dangerous, because you're running your filenames throughbash -c. With theeval, you're evaluating each filename through the shell parser twice; without it, you're evaluating it once. The only acceptable number of times data is parsed as code is from a security perspective zero. -

tripleee about 7 yearsSo a secure rephrase would be

tripleee about 7 yearsSo a secure rephrase would befind -type f -exec bash -c 'for f; do md5sum "$f" >"$f.sum"; done' _ +but that won't avoid the shell either, of course (and basically duplicates the existing answer by @CharlesDuffy).