Running shell script in parallel

Solution 1

Check out bash subshells, these can be used to run parts of a script in parallel.

I haven't tested this, but this could be a start:

#!/bin/bash

for i in $(seq 1 1000)

do

( Generating random numbers here , sorting and outputting to file$i.txt ) &

if (( $i % 10 == 0 )); then wait; fi # Limit to 10 concurrent subshells.

done

wait

Solution 2

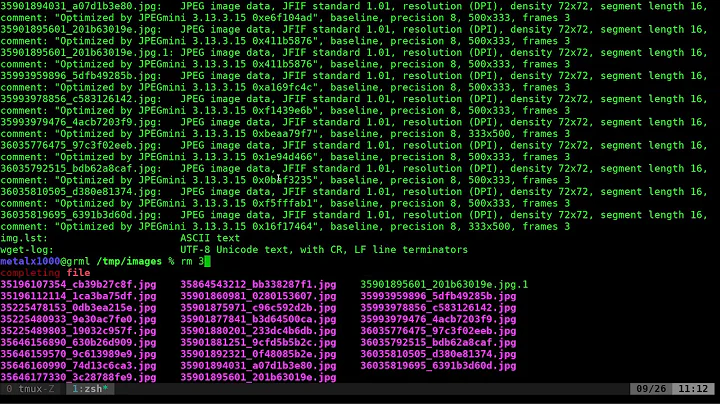

Another very handy way to do this is with gnu parallel, which is well worth installing if you don't already have it; this is invaluable if the tasks don't necessarily take the same amount of time.

seq 1000 | parallel -j 8 --workdir $PWD ./myrun {}

will launch ./myrun 1, ./myrun 2, etc, making sure 8 jobs at a time are running. It can also take lists of nodes if you want to run on several nodes at once, eg in a PBS job; our instructions to our users for how to do that on our system are here.

Updated to add: You want to make sure you're using gnu-parallel, not the more limited utility of the same name that comes in the moreutils package (the divergent history of the two is described here.)

Solution 3

To make things run in parallel you use '&' at the end of a shell command to run it in the background, then wait will by default (i.e. without arguments) wait until all background processes are finished. So, maybe kick off 10 in parallel, then wait, then do another ten. You can do this easily with two nested loops.

Solution 4

There is a whole list of programs that can run jobs in parallel from a shell, which even includes comparisons between them, in the documentation for GNU parallel. There are many, many solutions out there. Another good news is that they are probably quite efficient at scheduling jobs so that all the cores/processors are kept busy at all times.

Solution 5

There is a simple, portable program that does just this for you: PPSS. PPSS automatically schedules jobs for you, by checking how many cores are available and launching another job every time another one just finished.

Related videos on Youtube

Tony

Updated on January 05, 2020Comments

-

Tony over 4 years

I have a shell script which

- shuffles a large text file (6 million rows and 6 columns)

- sorts the file based the first column

- outputs 1000 files

So the pseudocode looks like this

file1.sh #!/bin/bash for i in $(seq 1 1000) do Generating random numbers here , sorting and outputting to file$i.txt doneIs there a way to run this shell script in

parallelto make full use of multi-core CPUs?At the moment, .

/file1.shexecutes in sequence 1 to 1000 runs and it is very slow.Thanks for your help.

-

Noufal Ibrahim about 13 yearsIf you find yourself needing to anything non trivial (e.g. multiprocessing etc.) in a shell script, it's time to rewrite it in a proper programming language.

-

Tony Delroy about 13 yearsThat will kick off all the thousand tasks in parallel, which might lead to too much swapping / contention for optimal work throughput, but it's certainly a reasonable and easy way to get started.

-

Anders Lindahl about 13 yearsGood point! The simplest solution would be to have an outer loop that limits the number of started subshells and

waitbetween them. -

Tony Delroy about 13 years@Anders: or just slip an "if (( $i % 10 == 0 )); then wait; fi" before the "done" in your loop above...

-

Tony about 13 years@Anders Thanks- It works quite well together with Tony's suggestions.

-

Anders Lindahl about 13 yearsI've added Tonys suggestion to the answer above.

-

Tony Delroy about 13 yearsCool... +1 from me too then :-)

-

Anders Lindahl about 13 years@Tony: I think it makes sense to leave it in.

waitwith no subshells running seems to do nothing, and if choose a number of concurrent subshells that isn't a factor of the number of tasks to run we might get active subshells still running when the loop ends. -

Tony about 13 years@Anders- Thanks for the clarification

-

Jonathan Dursi about 13 yearsFor the PBS question and across nodes, see stackoverflow.com/questions/5453427/… .

Jonathan Dursi about 13 yearsFor the PBS question and across nodes, see stackoverflow.com/questions/5453427/… . -

Tony about 13 years@Jonathan- Thanks for the pointer. I will ask my system administrator to install GNU parallel. It seems a useful utility to have on the system. Actually I was going to post the question on PBS, but you have already answered it. Cheers

-

Ole Tange about 13 yearsThis solution works best if all the jobs take exactly the same time. If the jobs do not take the same time you will waste CPU time waiting for one of the long jobs to finish. In other words: It will not keep 10 jobs running at the same time at all times.

-

Ole Tange about 13 yearsIf you sysadmin will not install it, it is easy to install yourself: Simply copy the perl script 'parallel' to a dir in your path and you are done. No compilation or installation of libraries needed.

-

Tony about 13 years@Ole - Thanks for the tip. My sysadmin has agreed to install it on the system.

-

Tony about 13 years@Jonathan- When you refer to ./myrun, is it the modified script with "&" and "wait" or without them, that is the original shell script? Cheers

-

Jonathan Dursi about 13 yearsIt's just the unmodified script. parallel does the bookkeeping work of spawning the jobs off and waiting until they're all done. Having said that, test and make sure everything works on small numbers of tasks before running 1000 at once... (I'm sure you would have done that, as it's obvious, but you'd be amazed how many people don't and go straight for the full-scale run as their "test".)

Jonathan Dursi about 13 yearsIt's just the unmodified script. parallel does the bookkeeping work of spawning the jobs off and waiting until they're all done. Having said that, test and make sure everything works on small numbers of tasks before running 1000 at once... (I'm sure you would have done that, as it's obvious, but you'd be amazed how many people don't and go straight for the full-scale run as their "test".) -

t0mm13b over 11 yearsQué habla Inglés? Please refrain from text speak, u, coz, ... You certainly typed out other words fine but not the little words - clear laziness obviously!

-

Roman Newaza almost 11 years@Jonathan Dursi, strange, but I have no -W option in my parallel version. I have installed it from

moreutilspackage. -

Jonathan Dursi almost 11 years@RomanNewaza , It looks like in recent versions

Jonathan Dursi almost 11 years@RomanNewaza , It looks like in recent versions-Wis gone and you have to use--workdir; I'll update my answer accordingly, thanks for pointing this out. -

Roman Newaza almost 11 yearsIn my version of

parallelI have[-j maxjobs] [-l maxload] [-i] [-n]in the manOPTIONSsection.[-c]is present only in theEXAMPLES. Seams like its man page is lousy. -

Jonathan Dursi almost 11 yearsIt turns out the moreutils package includes not gnu-parallel but Tollef's; the history of the evolution of the tools is at gnu.org/software/parallel/history.html

Jonathan Dursi almost 11 yearsIt turns out the moreutils package includes not gnu-parallel but Tollef's; the history of the evolution of the tools is at gnu.org/software/parallel/history.html -

jrw32982 almost 8 yearsPlus the link is broken.

-

Tony Delroy about 6 years@d-b

waitwaits for background processes to finish, not threads. For example,for FILE in huge.txt massive.log enormous.xml; do scp $FILE someuser@somehost:/tmp/ &; done; wait; echo "finished"would run threescp(secure copy) processes to copy three files in parallel to a remove host's/tmpdirectory, and only output"finished"after all three copies were completed. -

Lewis Chan almost 6 yearsIt is good if different subshell handles different task. What if they operate on a single file ? Task allocation may be needed, but how to do that decently ?

Lewis Chan almost 6 yearsIt is good if different subshell handles different task. What if they operate on a single file ? Task allocation may be needed, but how to do that decently ? -

Daniel Alder about 5 yearsinstalling on debian/ubuntu:

apt install parallel