skip some rows in read.csv in R

14,020

Solution 1

It is possible using sqldf package, using read.csv.sql

Lets say the contents of sample.csv looks like this:

id,name,age

1,"a",23

2,"b",24

3,"c",23

Now to read only rows where age=23:

require(sqldf)

df <- read.csv.sql("sample.csv", "select * from file where age=23")

df

id name age

1 1 "a" 23

2 3 "c" 23

It is possible to select necessary columns:

df <- read.csv.sql("sample.csv", "select id, name from file where age=23")

df

id name

1 1 "a"

2 3 "c"

Solution 2

It is better to read all and subset later like suggested in the comment :

csvData [!csvData$ticker %in% c('ADCT','ABT'),]

EDIT

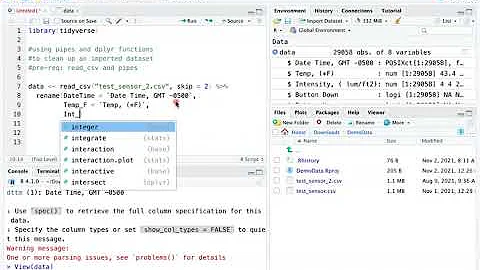

You can use fread from data.table package for more efficient method to read your file.

library(read.table)

fread(file="pf.csv")

Related videos on Youtube

Comments

-

Dinoop Nair over 1 year

Dinoop Nair over 1 yearI have a csv file which I read using the following function:

csvData <- read.csv(file="pf.csv", colClasses=c(NA, NA,"NULL",NA,"NULL",NA,"NULL","NULL","NULL")) dimnames(csvData)[[2]]<- c("portfolio", "date", "ticker", "quantity")It reads all lines from that file. But i want to skip some rows from reading. The row should not read if the value of the

ticker-column is:ABTorADCT. Is it possible?sample of my csv file is as follows:

RUS1000,01/29/1999,21st Centy Ins Group,TW.Z,90130N10,72096,1527.534,0.01,21.188 RUS1000,01/29/1999,3com Corp,COMS,88553510,358764,16861.908,0.16,47.000 RUS1000,01/29/1999,3m Co,MMM,88579Y10,401346,31154.482,0.29,77.625 RUS1000,01/29/1999,A D C Telecommunicat,ADCT,00088630,135114,5379.226,0.05,39.813 RUS1000,01/29/1999,Abbott Labs,ABT,00282410,1517621,70474.523,0.66,46.438 RUS1000,02/26/1999,21st Centy Ins Group,TW.Z,90130N10,72096,1378.836,0.01,19.125 RUS1000,02/26/1999,3com Corp,COMS,88553510,358764,11278.644,0.11,31.438 RUS1000,02/26/1999,3m Co,MMM,88579Y10,402146,29783.938,0.29,74.063-

Roman Luštrik about 11 yearsUse

Roman Luštrik about 11 yearsUsereadLinesand use regular expressions to filter out undesirable rows. -

topchef about 11 yearsbefore using R run:

topchef about 11 yearsbefore using R run:sed '/(,ADCT,|,ABT,)/d' > newfile -

A5C1D2H2I1M1N2O1R2T1Why not read in the whole file and subset later?

A5C1D2H2I1M1N2O1R2T1Why not read in the whole file and subset later?

-

-

Roman Luštrik about 11 yearsAssuming file was too big to read into memory, what would be OP's choices then?

Roman Luštrik about 11 yearsAssuming file was too big to read into memory, what would be OP's choices then? -

Dinoop Nair about 11 yearsactually the file having 200mb+ and majority of the data contains these values.So i think it is not efficient.

Dinoop Nair about 11 yearsactually the file having 200mb+ and majority of the data contains these values.So i think it is not efficient. -

CHP about 11 yearsCan you elaborate the answer? Then it will be more likely to help your answer gain attention.

-

Nishanth about 11 yearsI have updated my answer with a simple, self-contained example.

Nishanth about 11 yearsI have updated my answer with a simple, self-contained example. -

G. Grothendieck about 8 years

read.csv.sqlis based on SQLite facilities, not onread.tableso one can't really expect that it works exactly the same; however, thefilter=andsql=arguments ofread.csv.sqlcan be used for arbitrary preprocessing so depending on what your data looks like arbitrary missing value situations can usually be handled. For example,filter = "sed -e s/NULL//g"would remove all occurrences of the string NULL. (On Windows it will assume you have Rtools installed and get sed from there.) -

Chris about 8 yearsI tried swapping NULLs with empty strings but it wasn't interpreted as NA. Your trick AFAIK would not make it treat the values as NA.

Chris about 8 yearsI tried swapping NULLs with empty strings but it wasn't interpreted as NA. Your trick AFAIK would not make it treat the values as NA. -

G. Grothendieck about 8 yearsIf its a numeric field, say, then they will be treated as 0 and you can replace them on the R end. Or replace NULL with -99, say, and replace them on the R end.

-

Chris about 8 yearsSo a bit of a hack. I'd have to choose a magic numeric and hope it doesn't exist. 0, -1 and -99 are far too common. My function is speedy especially with larger values of n

Chris about 8 yearsSo a bit of a hack. I'd have to choose a magic numeric and hope it doesn't exist. 0, -1 and -99 are far too common. My function is speedy especially with larger values of n