Spark SQL to explode array of structure

13,022

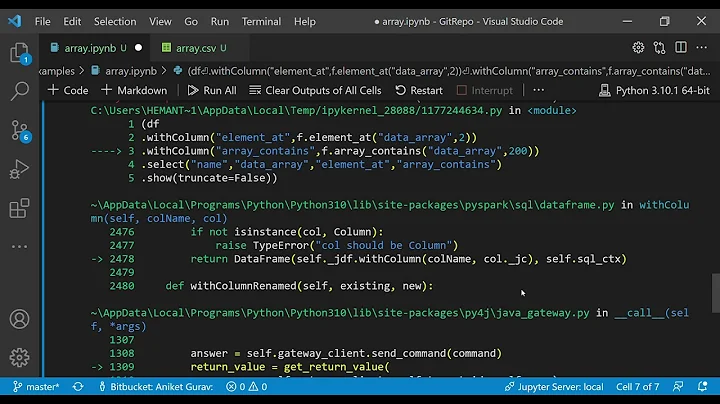

Solution 1

Addition to Paul Leclercq's answer, here is what can work.

import org.apache.spark.sql.functions.explode

df.select(explode($"control").as("control")).select("control.*")

Solution 2

Explode will create a new row for each element in the given array or map column

import org.apache.spark.sql.functions.explode

df.select(

explode($"control")

)

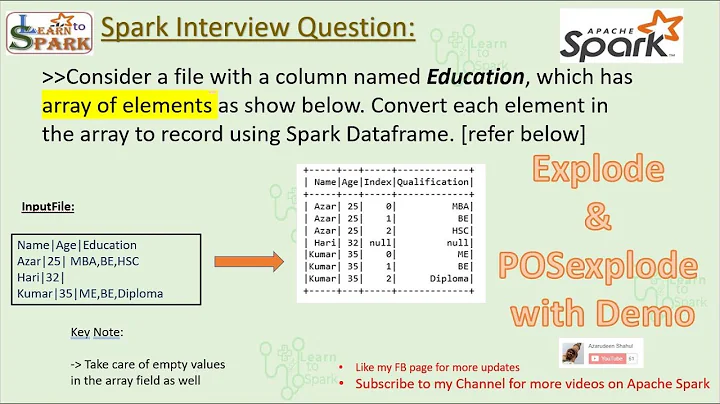

Related videos on Youtube

Author by

abiswas

Updated on June 04, 2022Comments

-

abiswas almost 2 years

I have the below JSON structure which I am trying to convert to a structure with each element as column as shown below using Spark SQL. Explode(control) is not working. Can someone please suggest a way to do this?

Input:

{ "control" : [ { "controlIndex": 0, "customerValue": 100.0, "guid": "abcd", "defaultValue": 50.0, "type": "discrete" }, { "controlIndex": 1, "customerValue": 50.0, "guid": "pqrs", "defaultValue": 50.0, "type": "discrete" } ] }Desired output:

controlIndex customerValue guid defaultValult type 0 100.0 abcd 50.0 discrete 1 50.0 pqrs 50.0 discrete-

mrsrinivas over 6 yearsCan you add the code you tried?

-

abiswas over 6 yearsI tried this - select explode(control) from myview It makes one column and puts each structure inside that

-