Use WebRTC/GetUserMedia stream as input for FFMPEG

Solution 1

This is quite a widespread requirement these days (2020), so I will give you my take on the problem:

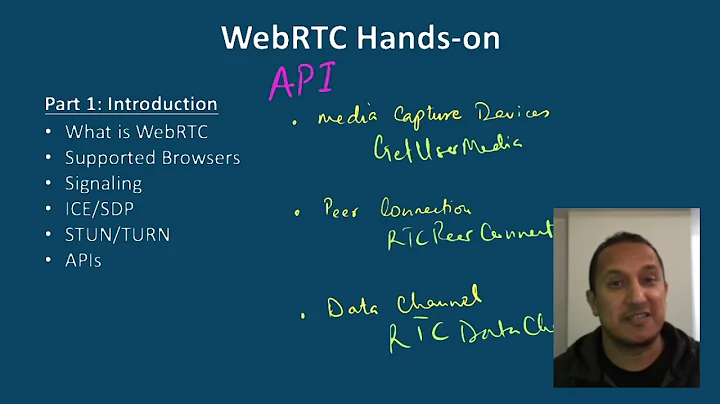

First of all, you are bumping into a perfect example of dramatic incompatibility (codecs and streaming protocols) between two different multimedia domains (WebRTC and ffmpeg). So to make them interoperate, you will need some tricky technics and third party software.

ffmpeg by itself cannot support "WebRTC" because WebRTC is not completely defined protocol. The signaling mechanism of WebRTC (exchanging SDPs and ice candidates) is not defined: it is left for the application to implement it. In this very first step of WebRTC, application has to connect to signaling server over some protocol (usually websocket or http request). So, in order to support WebRTC (RTCPeerConnection), ffmpeg would need to interoperate with some 3-rd party signaling server. Another alternative would be to implement signaling server inside of ffmpeg itself, but then ffmpeg would need to listen on some port, and that port would need to be open in firewalls (that's what signaling server does). Not really a practical idea for ffmpeg.

-

Therefore, the real practical solution is that ffmpeg receives a stream from some third party WebRTC gateway/server. Your webpage publishes via WebRTC to that gateway/server, and then ffmpeg pulls a stream from it.

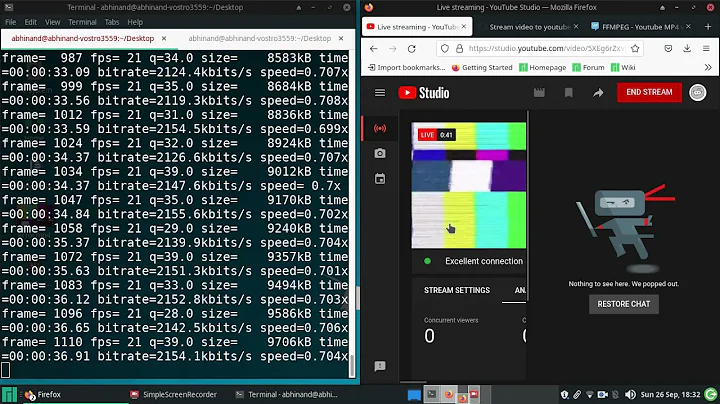

a. If your WebRTC webpage encodes H264 video + Opus audio then your life is relatively easy. You can install Wowza or Unreal Media Server and publish your stream via WebRTC to these servers. Then start MPEG2-TS broadcasting of that stream in Wowza/Unreal, and receive that MPEG2-TS stream with ffmpeg. Mission accomplished, and no transcoding/decoding has been done to the stream, just transmuxing (unpackaging from RTP container used in WebRTC, and packaging to MPEG2-TS container), which is very CPU-inexpensive thing.

b. The real "beauty" comes when you need to use VP8/VP9 codecs in your WebRTC publishing webpage. You cannot do the procedure suggested in the previous paragraph, because there is no streaming protocol supported by ffmpeg, that can carry VP8/VP9 - encoded video. The mission can still be accomplished for ffmpeg on Windows OS, but in a very awkward way: use two DirectShow source filters from Unreal: The WebRTC source filter and Video Mixer source filter. You cannot use WebRTC source filter by itself because ffmpeg cannot receive compressed video from DirectShow source filters (this is a big deficiency in ffmpeg). So: Configure Video Mixer source filter to get video from WebRTC source filter (which, in turn will receive your published stream from Unreal Media Server). Video Mixer source filter will decompress the stream into RGB24 video and PCM audio. Then ffmpeg can get this content using

ffmpeg -f dshow -i video="Unreal Video Mixer Source".

Solution 2

ffmpeg doesn't have WebRTC support (yet) but you have a couple other options that I know of.

- Pion WebRTC, here is an example of saving video to disk

- Amazon Kinesis has an Open Source Pure C WebRTC Client here

- Python implementation of WebRTC aiortc

- GStreamer has webrtcbin

Related videos on Youtube

ApplowPi

Updated on June 04, 2022Comments

-

ApplowPi almost 2 years

ApplowPi almost 2 yearsI'm recording my screen with gerUserMedia and get my video & audio stream. I'm then using WebRTC to send/receive this stream on another device. Is there any way I can then use this incoming webrtc stream as an input for ffmpeg by converting it somehow?

Everything I'm working with is in javascript.

Thanks in advance.

-

Sean DuBois about 4 yearsffmpeg could support WebRTC easily. It could take an offer as stdin, and return an answer as stdout. FFmpeg could provide a 'W3C Like' C API and wouldn't require any signaling integration. I would be careful of the solution of doing a 'WebRTC gateway server'. If you don't have congestion control and error correction (NACK, PLI) you aren't going to have a great experience.

-

user1390208 about 4 years@SeanDuBois WebRTC gateways/servers are normally based on Goggle's WebRTC implementation, so they inherently have these features. And, of course, you need to run ffmpeg on the same machine or LAN with WebRTC gateway, so packet loss or congestion is not an issue.

-

Sean DuBois about 4 yearsAFAIK no Open Source MCUs/SFUs use libwebrtc. Maybe some proprietary ones, but it seems unlikely! -- Your suggested topology does make sense to me in that case! I was thinking you would want viewer feedback to end up back to the broadcaster, but if you don't want the media server to re-encode I could see that working!