using pyodbc on linux to insert unicode or utf-8 chars in a nvarchar mssql field

Solution 1

I can remember having this kind of stupid problems using odbc drivers, even if that time it was a java+oracle combination.

The core thing is that odbc driver apparently encodes the query string when sending it to the DB. Even if the field is Unicode, and if you provide Unicode, in some cases it does not seem to matter.

You need to ensure that what is sent by the driver has the same encoding as your Database (not only server, but also database). Otherwise, of course you get funky characters because either the client or the server is mixing things up when encoding/or decoding. Do you have any idea of the charset (codepoint as MS like to say) that your server is using as a default for decoding data?

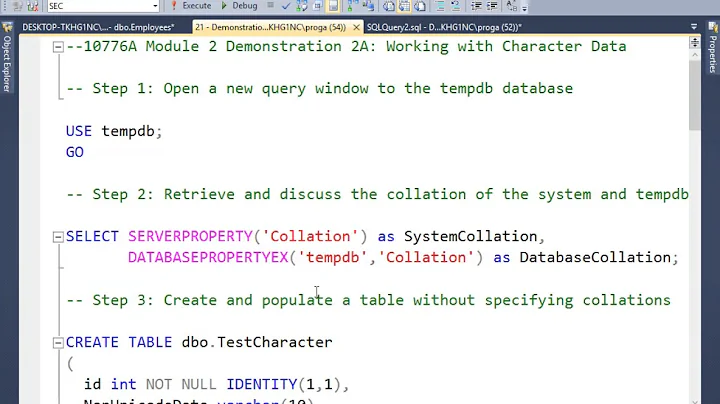

Collation has nothing to do with this problem :)

See that MS page for example. For Unicode fields, collation is used only to define the sort order in the column, not to specify how the data is stored.

If you store your data as Unicode, there is an Unique way to represent it, that's the purpose of Unicode: no need to define a charset that is compatible with all the languages that you are going to use :)

The question here is "what happens when I give data to the server that is not Unicode?". For example:

- When I send an UTF-8 string to the server, how does it understand it?

- When I send an UTF-16 string to the server, how does it understand it?

- When I send a Latin1 string to the server, how does it understand it?

From the server perspective, all these 3 strings are only a stream of bytes. The server cannot guess the encoding in which you encoded them. Which means that you will get troubles if your odbc client ends up sending bytestrings (an encoded string) to the server instead of sending unicode data: if you do so, the server will use a predefined encoding (that was my question: what encoding the server will use? Since it is not guessing, it must be a parameter value), and if the string had been encoded using a different encoding, dzing, data will get corrupted.

It's exactly similar as doing in Python:

uni = u'Hey my name is André'

in_utf8 = uni.encode('utf-8')

# send the utf-8 data to server

# send(in_utf8)

# on server side

# server receives it. But server is Japanese.

# So the server treats the data with the National charset, shift-jis:

some_string = in_utf8 # some_string = receive()

decoded = some_string.decode('sjis')

Just try it. It's fun. The decoded string is supposed to be "Hey my name is André", but is "Hey my name is Andrテゥ". é gets replaced by Japanese テゥ

Hence my suggestion: you need to ensure that pyodbc is able to send directly the data as Unicode. If pyodbc fails to do this, you will get unexpected results.

And I described the problem in the Client to Server way. But the same sort of issues can arise when communicating back from the Server to the Client. If the Client cannot understand Unicode data, you'll likely get into troubles.

FreeTDS handles Unicode for you.

Actually, FreeTDS takes care of things for you and translates all the data to UCS2 unicode. (Source).

- Server <--> FreeTDS : UCS2 data

- FreeTDS <--> pyodbc : encoded strings, encoded in UTF-8 (from

/etc/freetds/freetds.conf)

So I would expect your application to work correctly if you pass UTF-8 data to pyodbc. In fact, as this django-pyodbc ticket states, django-pyodbc communicates in UTF-8 with pyodbc, so you should be fine.

FreeTDS 0.82

However, cramm0 says that FreeTDS 0.82 is not completely bugfree, and that there are significant differences between 0.82 and the official patched 0.82 version that can be found here. You should probably try using the patched FreeTDS

Edited: removed old data, which had nothing to do with FreeTDS but was only relevant to Easysoft commercial odbc driver. Sorry.

Solution 2

I use UCS-2 to interact with SQL Server, not UTF-8.

Correction: I changed the .freetds.conf entry so that the client uses UTF-8

tds version = 8.0

client charset = UTF-8

text size = 32768

Now, bind values work fine for UTF-8 encoded strings. The driver converts transparently between the UCS-2 used for storage on the dataserver side and the UTF-8 encoded strings given to/taken from the client.

This is with pyodbc 2.0 on Solaris 10 running Python 2.5 and FreeTDS freetds-0.82.1.dev.20081111 and SQL Server 2008

import pyodbc

test_string = u"""Comment ça va ? Très bien ?"""

print type(test_string),repr(test_string)

utf8 = 'utf8:' + test_string.encode('UTF-8')

print type(utf8), repr(utf8)

c = pyodbc.connect('DSN=SA_SQL_SERVER_TEST;UID=XXX;PWD=XXX')

cur = c.cursor()

# This does not work as test_string is not UTF-encoded

try:

cur.execute('INSERT unicode_test(t) VALUES(?)', test_string)

c.commit()

except pyodbc.Error,e:

print e

# This one does:

try:

cur.execute('INSERT unicode_test(t) VALUES(?)', utf8)

c.commit()

except pyodbc.Error,e:

print e

Here is the output from the test table (I had manually put in a bunch of test data via Management Studio)

In [41]: for i in cur.execute('SELECT t FROM unicode_test'):

....: print i

....:

....:

('this is not a banana', )

('\xc3\x85kergatan 24', )

('\xc3\x85kergatan 24', )

('\xe6\xb0\xb4 this is code-point 63CF', )

('Mich\xc3\xa9l', )

('Comment a va ? Trs bien ?', )

('utf8:Comment \xc3\xa7a va ? Tr\xc3\xa8s bien ?', )

I was able to put in some in unicode code points directly into the table from Management Studio by the 'Edit Top 200 rows' dialog and entering the hex digits for the unicode code point and then pressing Alt-X

Solution 3

I had the same problem when trying to bind unicode parameter: '[HY004] [FreeTDS][SQL Server]Invalid data type (0) (SQLBindParameter)'

I solved it by upgrading freetds to version 0.91.

I use pyodbc 2.1.11. I had to apply this patch to make it work with unicode, otherwise I was getting memory corruption errors occasionally.

Related videos on Youtube

Comments

-

nosklo over 3 years

I am using Ubuntu 9.04

I have installed the following package versions:

unixodbc and unixodbc-dev: 2.2.11-16build3 tdsodbc: 0.82-4 libsybdb5: 0.82-4 freetds-common and freetds-dev: 0.82-4I have configured

/etc/unixodbc.inilike this:[FreeTDS] Description = TDS driver (Sybase/MS SQL) Driver = /usr/lib/odbc/libtdsodbc.so Setup = /usr/lib/odbc/libtdsS.so CPTimeout = CPReuse = UsageCount = 2I have configured

/etc/freetds/freetds.conflike this:[global] tds version = 8.0 client charset = UTF-8I have grabbed pyodbc revision

31e2fae4adbf1b2af1726e5668a3414cf46b454ffromhttp://github.com/mkleehammer/pyodbcand installed it using "python setup.py install"I have a windows machine with Microsoft SQL Server 2000 installed on my local network, up and listening on the local ip address 10.32.42.69. I have an empty database created with name "Common". I have the user "sa" with password "secret" with full priviledges.

I am using the following python code to setup the connection:

import pyodbc odbcstring = "SERVER=10.32.42.69;UID=sa;PWD=secret;DATABASE=Common;DRIVER=FreeTDS" con = pyodbc.connect(s) cur = con.cursor() cur.execute(''' CREATE TABLE testing ( id INTEGER NOT NULL IDENTITY(1,1), name NVARCHAR(200) NULL, PRIMARY KEY (id) ) ''') con.commit()Everything WORKS up to this point. I have used SQLServer's Enterprise Manager on the server and the new table is there. Now I want to insert some data on the table.

cur = con.cursor() cur.execute('INSERT INTO testing (name) VALUES (?)', (u'something',))That fails!! Here's the error I get:

pyodbc.Error: ('HY004', '[HY004] [FreeTDS][SQL Server]Invalid data type (0) (SQLBindParameter)'Since my client is configured to use UTF-8 I thought I could solve by encoding data to UTF-8. That works, but then I get back strange data:

cur = con.cursor() cur.execute('DELETE FROM testing') cur.execute('INSERT INTO testing (name) VALUES (?)', (u'somé string'.encode('utf-8'),)) con.commit() # fetching data back cur = con.cursor() cur.execute('SELECT name FROM testing') data = cur.fetchone() print type(data[0]), data[0]That gives no error, but the data returned is not the same data sent! I get:

<type 'unicode'> somé stringThat is, pyodbc won't accept an unicode object directly, but it returns unicode objects back to me! And the encoding is being mixed up!

Now for the question:

I want code to insert unicode data in a NVARCHAR and/or NTEXT field. When I query back, I want the same data I inserted back.

That can be by configuring the system differently, or by using a wrapper function able to convert the data correctly to/from unicode when inserting or retrieving

That's not asking much, is it?

-

nosklo almost 15 yearsI tried to do it with UCS-2. I changed freetds.conf to UCS-2 and would encode the string with UCS-2 before sending it to the database. However python doesn't support UCS2 codec so I've tried utf16, since they seem similar enough for this test's purpose. But then I still get unicode objects back, and even stranger ones! Now even ascii chars don't come back right. If I store u'ABC'.encode('utf16') I get a wierd 8-chars unicode string that when encoded to utf-8 doesn't make any sense. Can you share your configuration?

-

CiscoIPPhone almost 15 yearsnosklo, it must be inserting the byte order mark before the chars. So 8-octets is correct (en.wikipedia.org/wiki/Byte-order_mark).

-

nosklo almost 15 years@CiscoIPPhone, if it returned 8 bytes, yes, but it is returning 8 unicode chars.

-

Paul Harrington almost 15 yearsI checked my .freetds.conf and noticed: tds version = 8.0 client charset = CP1252 I believe this is similar to ISO8559. I also noticed that my application does all its inserts server-side: this means that I have not actually checked insertion of data by the client. What needs to be checked is sucessful parameter binding to a nchar,nvarchar column. I will try to do this today.

-

Nicolas Dumazet almost 15 yearsand... I edited out the data relevant to Easysoft odbc drivers. Sorry for the confusion. At least now there is a proper explanation of what's collation/what happens behind the pyodbc box.

-

nosklo almost 15 yearswow, that's... great! I'll review today and if it works you get 550 rep!

-

Paul Harrington almost 15 yearsThe UTF-8 encoding of the bind value is critical other iconv will not correctly translate the value to the SQL Server encoding.

-

nosklo almost 15 yearsI see your config is working, but I fail to see what is wrong on mine... I will try different pyodbc/freetds version combinations.

-

nosklo almost 15 yearsno, that's not the issue. I can't reproduce that. using N'Ű' doesn't even work on SQL SERVER 2000.