What does it mean when train and validation loss diverge from epoch 1?

Possible explanations

- Coding error

- Overfitting due to differences in the training / validation data

- Skewed classes (and differences in the training / validation data)

Things I would try

- Swapping the training and the validation set. Does the problem still occur?

- Plot the curves in more detail for the first ~10 epochs (e.g. directly after initialization; each few training iterations, not only per epoch). Do you still start at > 75%? Then your classes might be skewed and you might also want to check if your training-validation split is stratified.

Code

- This is useless:

np.concatenate(X_train) - Make your code as readable as possible when you post it here. This includes removing lines which are commented out.

This looks suspicious for a coding error to me:

if test:

X_test.append(input)

y_test.append(output)

else:

#if((output == 0 and np.average(y_train) > 0.5) or output == 1):

X_train.append(input)

y_train.append(output)

use sklearn.model_selection.train_test_split instead. Do all transformations to the data before, then make the split with this method.

Related videos on Youtube

Bren077s

Updated on June 27, 2022Comments

-

Bren077s almost 2 years

I was recently working on a deep learning model in Keras and it gave me very perplexing results. The model is capable of mastering the training data over time, but it consistently gets worse results on the validation data.

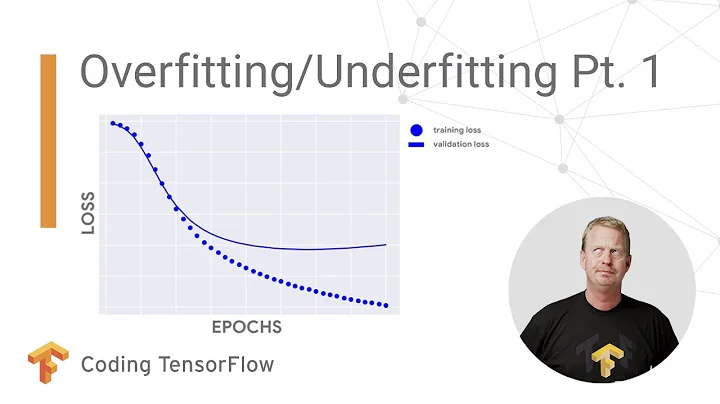

I know that if the validation accuracy goes up for a while and then starts to decrease that you are over-fitting to the training data, but in this case, the validation accuracy only ever decreases. I am really confused why this happens. Does anyone have any intuition as to what could cause this to happen? Or any suggestions on things to test to potentially fix it?

Edit to add more info and code

Ok. So I am making a model that is trying to do some basic stock predictions. By looking at the open, high, low, close, and volume of the last 40 days, the model tries to predict whether or not the price will go up two average true ranges without going down one average true range. As input, I took CSVs from Yahoo Finance that include this information for the last 30 years for all of the stocks in the Dow Jones Industrial Average. The model trains on 70% of the stocks and validates on the other 20%. This leads to about 150,000 training samples. I am currently using a 1d Convolutional Neural Network, but I have also tried other smaller models (logistic regression and small Feed Forward NN) and I always get the same either diverging train and validation loss or nothing learned at all because the model is too simple.

Here is the code:

import numpy as np from sklearn import preprocessing from sklearn.metrics import auc, roc_curve, roc_auc_score from keras.layers import Input, Dense, Flatten, Conv1D, Activation, MaxPooling1D, Dropout, Concatenate from keras.models import Model from keras.callbacks import ModelCheckpoint, EarlyStopping, Callback from keras import backend as K import matplotlib.pyplot as plt from random import seed, shuffle from os import listdir class roc_auc(Callback): def on_train_begin(self, logs={}): self.aucs = [] def on_train_end(self, logs={}): return def on_epoch_begin(self, epoch, logs={}): return def on_epoch_end(self, epoch, logs={}): y_pred = self.model.predict(self.validation_data[0]) self.aucs.append(roc_auc_score(self.validation_data[1], y_pred)) if max(self.aucs) == self.aucs[-1]: model.save_weights("weights.roc_auc.hdf5") print(" - auc: %0.4f" % self.aucs[-1]) return def on_batch_begin(self, batch, logs={}): return def on_batch_end(self, batch, logs={}): return rrr = 2 epochs = 200 batch_size = 64 days_input = 40 seed(42) X_train = [] X_test = [] y_train = [] y_test = [] files = listdir("Stocks") total_stocks = len(files) shuffle(files) for x, file in enumerate(files): test = False if (x+1.0)/total_stocks > 0.7: test = True if test: print("Test -> Stocks/%s" % file) else: print("Train -> Stocks/%s" % file) stock = np.loadtxt(open("Stocks/"+file, "r"), delimiter=",", skiprows=1, usecols = (1,2,3,5,6)) atr = [] last = None for day in stock: if last is None: tr = abs(day[1] - day[2]) atr.append(tr) else: tr = max(day[1] - day[2], abs(last[3] - day[1]), abs(last[3] - day[2])) atr.append((13*atr[-1]+tr)/14) last = day.copy() stock = np.insert(stock, 5, atr, axis=1) for i in range(days_input,stock.shape[0]-1): input = stock[i-days_input:i, 0:5].copy() for j, day in enumerate(input): input[j][1] = (day[1]-day[0])/day[0] input[j][2] = (day[2]-day[0])/day[0] input[j][3] = (day[3]-day[0])/day[0] input[:,0] = input[:,0] / np.linalg.norm(input[:,0]) input[:,1] = input[:,1] / np.linalg.norm(input[:,1]) input[:,2] = input[:,2] / np.linalg.norm(input[:,2]) input[:,3] = input[:,3] / np.linalg.norm(input[:,3]) input[:,4] = input[:,4] / np.linalg.norm(input[:,4]) preprocessing.scale(input, copy=False) output = -1 buy = stock[i][1] stoploss = buy - stock[i][5] target = buy + rrr*stock[i][5] for j in range(i+1, stock.shape[0]): if stock[j][0] < stoploss or stock[j][2] < stoploss: output = 0 break elif stock[j][1] > target: output = 1 break if output != -1: if test: X_test.append(input) y_test.append(output) else: X_train.append(input) y_train.append(output) shape = list(X_train[0].shape) shape[:0] = [len(X_train)] X_train = np.concatenate(X_train).reshape(shape) y_train = np.array(y_train) shape = list(X_test[0].shape) shape[:0] = [len(X_test)] X_test = np.concatenate(X_test).reshape(shape) y_test = np.array(y_test) print("Train class split is %0.2f" % (100*np.average(y_train))) print("Test class split is %0.2f" % (100*np.average(y_test))) inputs = Input(shape=(days_input,5)) x = Conv1D(32, 5, padding='same')(inputs) x = Activation('relu')(x) x = MaxPooling1D()(x) x = Conv1D(64, 5, padding='same')(x) x = Activation('relu')(x) x = MaxPooling1D()(x) x = Conv1D(128, 5, padding='same')(x) x = Activation('relu')(x) x = MaxPooling1D()(x) x = Flatten()(x) x = Dense(128, activation="relu")(x) x = Dense(64, activation="relu")(x) output = Dense(1, activation="sigmoid")(x) model = Model(inputs=inputs,outputs=output) model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy']) filepath="weights.best.hdf5" checkpoint = ModelCheckpoint(filepath, monitor='val_acc', verbose=0, save_best_only=True, mode='max') auc_hist = roc_auc() callbacks_list = [checkpoint, auc_hist] history = model.fit(X_train, y_train, validation_data=(X_test,y_test) , epochs=epochs, callbacks=callbacks_list, batch_size=batch_size, class_weight ='balanced').history model_json = model.to_json() with open("model.json", "w") as json_file: json_file.write(model_json) model.save_weights("weights.latest.hdf5") model.load_weights("weights.roc_auc.hdf5") plt.plot(history['acc']) plt.plot(history['val_acc']) plt.title('model accuracy') plt.ylabel('accuracy') plt.xlabel('epoch') plt.legend(['train', 'test'], loc='upper left') plt.show() plt.plot(history['loss']) plt.plot(history['val_loss']) plt.title('model loss') plt.ylabel('loss') plt.xlabel('epoch') plt.legend(['train', 'test'], loc='upper left') plt.show() plt.plot(auc_hist.aucs) plt.title('model ROC AUC') plt.ylabel('AUC') plt.xlabel('epoch') plt.show() y_pred = model.predict(X_train) fpr, tpr, _ = roc_curve(y_train, y_pred) roc_auc = auc(fpr, tpr) plt.subplot(1, 2, 1) plt.plot(fpr, tpr, label='ROC curve (area = %0.2f)' % roc_auc) plt.plot([0, 1], [0, 1], color='navy',linestyle='--') plt.xlim([0.0, 1.0]) plt.ylim([0.0, 1.0]) plt.xlabel('False Positive Rate') plt.ylabel('True Positive Rate') plt.title('Train ROC') plt.legend(loc="lower right") y_pred = model.predict(X_test) fpr, tpr, thresholds = roc_curve(y_test, y_pred) roc_auc = auc(fpr, tpr) plt.subplot(1, 2, 2) plt.plot(fpr, tpr, label='ROC curve (area = %0.2f)' % roc_auc) plt.plot([0, 1], [0, 1], color='navy',linestyle='--') plt.xlim([0.0, 1.0]) plt.ylim([0.0, 1.0]) plt.xlabel('False Positive Rate') plt.ylabel('True Positive Rate') plt.title('Test ROC') plt.legend(loc="lower right") plt.show() with open('roc.csv','w+') as file: for i in range(len(thresholds)): file.write("%f,%f,%f\n" % (fpr[i], tpr[i], thresholds[i]))Results by 100 batches instead of by epoch

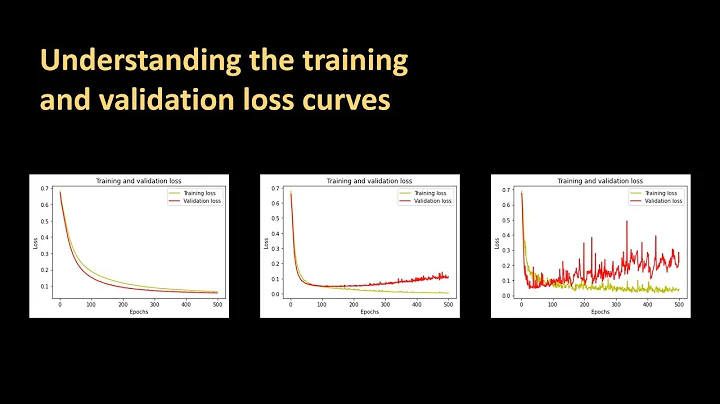

I listened to suggestions and made a few updates. The classes are now balanced 50% to 50% instead of 25% to 75%. Also, the validation data is randomly selected now instead of being a specific set of stocks. By graphing the loss and accuracy at a finer resolution(100 batches vs 1 epoch), the over-fitting can clearly be seen. The model does actually start to learn at the very beginning before it starts to diverge. I am surprised at how fast it starts to over-fit, but now that I can see the issue hopefully I can debug it.

-

Bren077s almost 7 yearsHere is what I currently know. The data is imbalanced about 0.75 to 0.25 split across both the training ad the validation set. Switching the validation set and the training set creates similar output. The part in the code that you pointed out is not a mistake. Instead of randomly splitting the training and the testing data, I made it so that some of the stocks where used as training and some as testing. Overall, the trends in all of the stocks are similar, so that should not have an adverse affect. New test data of future stock prices would be similar to the validation set.

-

Bren077s almost 7 yearsI will working on testing your possible issues and see if any of them fix my problem.

-

Bren077s almost 7 yearsSo looking at the loss and accuracy a few batches at a time showed how the model was over-fitting. It generally was over-fitting to the training data by the end of the first few epochs. Also, balancing the data helped quite a bit. Thank you!

-

Martin Thoma almost 7 yearsYou're welcome. Would you mind sharing those new curves with us?