Why are tar archive formats switching to xz compression to replace bzip2 and what about gzip?

Solution 1

For distributing archives over the Internet, the following things are generally a priority:

- Compression ratio (i.e., how small the compressor makes the data);

- Decompression time (CPU requirements);

- Decompression memory requirements; and

- Compatibility (how wide-spread the decompression program is)

Compression memory & CPU requirements aren't very important, because you can use a large fast machine for that, and you only have to do it once.

Compared to bzip2, xz has a better compression ratio and lower (better) decompression time. It, however—at the compression settings typically used—requires more memory to decompress[1] and is somewhat less widespread. Gzip uses less memory than either.

So, both gzip and xz format archives are posted, allowing you to pick:

- Need to decompress on a machine with very limited memory (<32 MB): gzip. Given, not very likely when talking about kernel sources.

- Need to decompress minimal tools available: gzip

- Want to save download time and/or bandwidth: xz

There isn't really a realistic combination of factors that'd get you to pick bzip2. So its being phased out.

I looked at compression comparisons in a blog post. I didn't attempt to replicate the results, and I suspect some of it has changed (mostly, I expect xz has improved, as its the newest.)

(There are some specific scenarios where a good bzip2 implementation may be preferable to xz: bzip2 can compresses a file with lots of zeros and genome DNA sequences better than xz. Newer versions of xz now have an (optional) block mode which allows data recovery after the point of corruption and parallel compression and [in theory] decompression. Previously, only bzip2 offered these.[2] However none of these are relevant for kernel distribution)

1: In archive size, xz -3 is around bzip -9. Then xz uses less memory to decompress. But xz -9 (as, e.g., used for Linux kernel tarballs) uses much more than bzip -9. (And even xz -0 needs more than gzip -9).

2: F21 System Wide Change: lbzip2 as default bzip2 implementation

Solution 2

First of all, this question is not directly related to tar. Tar just creates an uncompressed archive, the compression is then applied later on.

Gzip is known to be relatively fast when compared to LZMA2 and bzip2. If speed matters, gzip (especially the multithreaded implementation pigz) is often a good compromise between compression speed and compression ratio. Although there are alternatives if speed is an issue (e.g. LZ4).

However, if a high compression ratio is desired LZMA2 beats bzip2 in almost every aspect. The compression speed is often slower, but it decompresses much faster and provides a much better compression ratio at the cost of higher memory usage.

There is not much reason to use bzip2 any more, except of backwards compatibility. Furthermore, LZMA2 was desiged with multithreading in mind and many implementations by default make use of multicore CPUs (unfortunately xz on Linux does not do this, yet). This makes sense since the clock speeds won't increase any more but the number of cores will.

There are multithreaded bzip2 implementations (e.g. pbzip), but they are often not installed by default. Also note that multithreaded bzip2 only really pay off while compressing whereas decompression uses a single thread if the file was compress using a single threaded bzip2, in contrast to LZMA2. Parallel bzip2 variants can only leverage multicore CPUs if the file was compressed using a parallel bzip2 version, which is often not the case.

Solution 3

LZMA2 is a block compression system whereas gzip is not. This means that LZMA2 lends itself to multi-threading. Also, if corruption occurs in an archive, you can generally recover data from subsequent blocks with LZMA2 but you cannot do this with gzip. In practice, you lose the entire archive with gzip subsequent to the corrupted block. With an LZMA2 archive, you only lose the file(s) affected by the corrupted block(s). This can be important in larger archives with multiple files.

Solution 4

Short answer : xz is more efficient in terms of compression ratio. So it saves disk space and optimizes the transfer through the network.

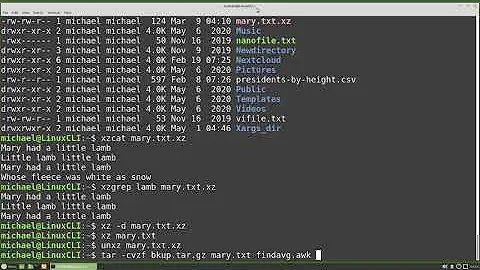

You can see this Quick Benchmark so as to discover the difference by practical tests.

Related videos on Youtube

Admin

Updated on September 18, 2022Comments

-

Admin over 1 year

Admin over 1 yearMore and more

tararchives use thexzformat based on LZMA2 for compression instead of the traditionalbzip2(bz2)compression. In fact kernel.org made a late "Good-bye bzip2" announcement, 27th Dec. 2013, indicating kernel sources would from this point on be released in both tar.gz and tar.xz format - and on the main page of the website what's directly offered is intar.xz.Are there any specific reasons explaining why this is happening and what is the relevance of

gzipin this context? -

Todd Allen over 10 yearsWell some tars grok a

zoption. -

christopher_bincom over 10 yearsAny comment on the topic of fault tolerance or is that something that's always implemented completely outside of compression algorithms?

christopher_bincom over 10 yearsAny comment on the topic of fault tolerance or is that something that's always implemented completely outside of compression algorithms? -

Tobu over 10 years"speed" makes for a muddled answer, you should refer to compression speed or decompression speed. Neither pixz, pbzip2 or pigz are installed by default (or used by tar without the -I flag), but pixz and pbzip2 speed up compression and decompression and pigz is just for compression.

-

Tobu over 10 years@illuminÉ resiliency can't be provided without sacrificing compression ratio. It's an orthogonal problem, and while tools like Parchive exist, for distributing the kernel TCP's error handling does the job just as well.

-

Marco over 10 years@Tobu

xzwill be multithreaded by default so nopixzinstallation will be required in the future. On some platformsxzthreading is supported already. Whereasbzip2will unlikely ever be multithreaded since the format wasn't designed with multithreading in mind. Furthermore,pbzip2only speeds up decompression if the file has been compressed usingpbzip2which is often not the case. -

derobert over 10 years@illuminÉ Fault tolerance (assuming you mean something similar to par2) isn't normally a concern with distributing archives over the Internet. Downloads are assumed reliable enough (and you can just redownload if it was corrupted). Cryptographic hashes and signatures are often used, and they detect corruption as well as tampering. There are compressors that give greater fault tolerance, though at the cost of compression ratio. No one seems to find the tradeoff worth it for HTTP or FTP downloads.

-

RaveTheTadpole over 9 years@Marco I believe lbzip2 allows for parallel decompression of files even if they were compressed with a non-parallel implementation (e.g. stock bzip2). That's why I use lbzip2 over pbzip2. (It's possible this has evolved since your comment.)

-

New Atech over 8 yearsxz uses LESS memory to decompress.

New Atech over 8 yearsxz uses LESS memory to decompress. -

derobert over 8 years@Mike Has it changed since I wrote this? In particular, footnote one explains memory usage.

-

New Atech over 8 years@derobert I don't know, but it seems quite misleading to me, because for example (from the blog post) xz-2 compressed file has nearly the same size as bzip2-3 but xz-2 uses 1300 KB of memory and bzip2-3 uses 1700 KB when decompressing. (Plus compress time of xz-2 is faster.) However it uses more memory when compressing.

New Atech over 8 years@derobert I don't know, but it seems quite misleading to me, because for example (from the blog post) xz-2 compressed file has nearly the same size as bzip2-3 but xz-2 uses 1300 KB of memory and bzip2-3 uses 1700 KB when decompressing. (Plus compress time of xz-2 is faster.) However it uses more memory when compressing. -

derobert over 8 years@Mike right, for same file size xz uses less than bzip, but it seems people prefer the higher compression ratio instead (so real world use requires more memory). I'll think of a way to make that clearer.

-

derobert over 8 years@Mike does that make it clearer? I checked and confirmed that kernel sources are compressed with

xz -9(xv -vvlwill tell you). -

derobert over 7 years@StéphaneChazelas I've updated the post based on xz's parallel compression support. According to the docs at least, it doesn't do parallel decompression yet—but sounds like it's possible in theory.

-

derobert over 7 years@Joshua true, even less than that according to the docs. I've changed it to 32MB, as of course much of that RAM will be used for other things already running (kernel, init, shell, services, etc.). I think this is almost entirely irrelevant for kernel sources, though. (gcc will surely use more memory!)

-

leden over 7 yearsThis is a very useful and important distinction, indeed!

-

flarn2006 almost 5 yearsLink is broken.

-

nyov over 4 yearsCan you back up these claims with sources? I have yet to see an XZ recovery tool, and my known source claims otherwise: nongnu.org/lzip/xz_inadequate.html

-

Craig Younkins about 4 yearsNew link: catchchallenger.first-world.info/wiki/…

-

Rashini Gamalath about 4 years

This makes sense since the clock speeds won't increase any more- what? that's not quite true. the post was made in 2014, when Intel released the i3-4370 at 3.8GHz. in 2017, Intel released thei7-8700Kat 4.7GHz. in 2018 they released the i9-9900K at 5GHz - and there's probably cpus in 2015 & 2016 that's missing on this list too -

Marco about 4 years@hanshenrik Look at the charts in 42 Years of Microprocessor Trend Data. You'll notice the CPU frequency reaching a plateau in the mid 2000's. That's what I'm talking about.

-

Rashini Gamalath about 4 yearsit's not raising as fast as it used to, sure, but it's still raising: Intel's upcoming 10th gen 2020 desktop chips will supposedly reach 5.3GHz (10gen intel cpus are out already, but only the low-power laptop-variants, the desktop gen10's aren't out yet)

-

Chris L. Barnes about 3 yearsThis answer should include why LZMA2 is relevant to the question, which asks about the xz format. From wikipedia "The .xz format, which can contain LZMA2 data" en.wikipedia.org/wiki/…

-

HCSF almost 3 yearsI came across this article, suggesting xz should not be used as the compressed data might be corrupted. Thought?

HCSF almost 3 yearsI came across this article, suggesting xz should not be used as the compressed data might be corrupted. Thought? -

Admin almost 2 years"It, however—at the compression settings typically used—requires more memory to decompress[1] and is somewhat less widespread." that sentence exceeds my brain's stack size

Admin almost 2 years"It, however—at the compression settings typically used—requires more memory to decompress[1] and is somewhat less widespread." that sentence exceeds my brain's stack size