Why were old games programmed in assembly when higher level languages existed?

Solution 1

Those games had 8-bit CPU chips and microscopic memories, like, 2kB. This answer would take up over half the RAM.

Compiled code was out of the question. Even on 8-bit CPU's with "large" memories, like "64K" (whee!) compiled code was difficult to use; it was not routinely seen until 16-bit microprocessors appeared.

Also, the only potentially useful language was C and it had not yet taken over the world. There were few if any C compilers for 8-bit micros at that time.

But C wouldn't have helped that much. Those games had horribly cheap hacks in them that pretty much required timing-specific instruction loops ... e.g., a sprite's Y-coordinate might depend on WHEN (in the video scan) its control register was written. (Shudder...)

Now there was a nice interpreted-bytecode language around that time or perhaps a little bit later: UCSD Pascal running on the UCSD P-System. Although I'm not a big Pascal fan, it was way ahead of everything else for those early processors. It wouldn't fit on a game or run fast enough for game play, though.

Solution 2

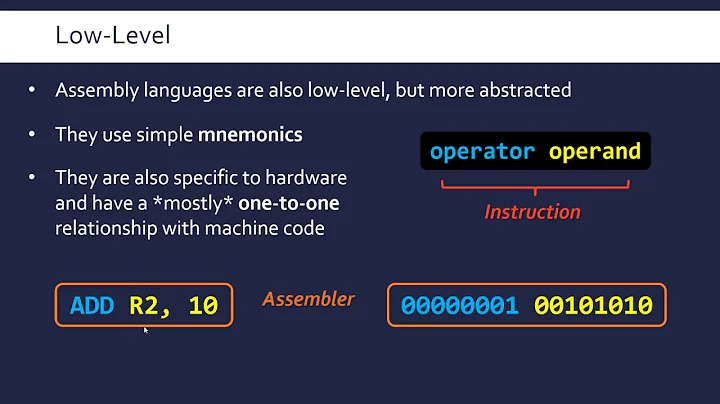

Performance, performance, performance. Games have always been all about getting the most out of the hardware. Even the best C, COBOL or FORTRAN compilers today can't compete with skillfully crafted assembly code.

As DigitalRoss has pointed out, there were also severe memory constraints, and assembly was the only tool that allowed for the fine grained control needed.

The same thing sort of applies today, although the old-days' assembler has been more or less replaced with C++. Even though programming languages such as Python exist (that are very easy to use), the low-level programming languages are still preferred for the most demanding applications.

Solution 3

"make it easier to code right"

Didn't matter.

High performance, minimal memory use was essential.

Easy to code was irrelevant.

Remember, early games had microscopic amounts of memory on very, very slow processors with no actual devices. Stuff was connected -- essentially -- directly to the CPU chip.

Solution 4

I would assume there were a much larger percentage of programmers that both knew assembler and wouldn't think twice about programming in assembler. And even into the IBM-PC and AT days folks that never really programmed anything but assembler were still around and could easily program circles around the C and Pascal programmers.

Pascal, and C were wonderful (for desktop computers) once they got a foothold and you could afford a compiler, you were happy to just be programming in some new language and had never heard of an optimizer, you just assumed the high level language turned into the machine code the same way with every compiler. You still easily fit your programs on a 5.25" floppy. And had plenty of that 640K to spare.

I think we need to bring back or have more 4K programming contests. Write a game for the GBA or NDS but the binary, data, code, everything, cannot be larger than 4K. Or perhaps re-invent the gameplay of asteroids, rocks, ship, bad guys, missles, don't worry about the video pixels because, first it wasn't, and second that was handled by a second processor (well hardware state machine), just generate the vector drawing commands. There are free 6502 C compilers out there now, and p-code based pascal compilers that it would be easy to run the output on the 6502, and see if you can get the frame rate up to real time at 1.5mhz or whatever it was running. And fit in the prom. I think the exercise will answer your question. OR...just make a runtime p-code interpreter and see if that fits. (standardpascal.org, look for the p5 compiler).

A handful of Kbytes is like a thousand lines of code or less. There just wasn't that much to the program, and assembler isn't difficult at all, certainly not on a 6502 or other similar systems. You didn't have caches and mmus and virtualization and multi core, at least not the headaches we have today. You had the freedom to chose whatever resister you wanted, each subroutine could use its own custom calling convention, you didn't throw away huge quantities of instructions juggling registers or stack or memory just so you could call functions in a standard way. You had but one interrupt and used it to time poll/manage the hardware (video updates, evenly timed polling of buttons and other user input, polling the quarter slot detector even while the game is being played).

Asteroids looks to be roughly 3000 lines of assembler. With the efficiency of the compilers now or then I would say you would need to write the whole game in about 500 lines of C code to beat that, pascal using p-code I will give you 100 lines of code, okay 200 (not p-code but optimized for the target I will give you more lines than C).

The Atari VCS (a.k.a 2600) didn't even have memory for a framebuffer for the video. The program had to generate the pixels just in time as well as perform all the game tasks. Sure, not a lot of pixels, but think about the programming task and the limited size and speed of the rom. For something like that you start getting into how many instructions per pixel, and we are talking a small number. High level compiled code is going to run in fits and spurts and not going to be smooth enough to guarantee the timing.

If you have the chance you should disassemble some of those old game roms, very educational, the instruction sequences were often very elegant. Optimized compiled high level code has cool tricks but it just isn't the same.

Supposedly better languages and compilers don't automatically make you better, faster, more efficient or reliable. Just like a fancy car doesn't make someone a better driver.

Solution 5

Compilers back then were not as good as the compilers we have now. Therefore many people felt, rightly or wrongly, that they could out optimize the compiler.

Related videos on Youtube

Comments

-

jmasterx almost 4 years

I noticed that most if not all Nes / Atari, etc games were coded in assembly, however at that time, C, COBOL, and FORTRAN existed which I would think would make it easier to code right? So then why did they choose assembly over these available higher level languages?

-

Crashworks over 12 yearsWe still program chunks of many games in assembly -- or more typically, by using C++ compiler intrinsics that look like functions, but actually compile into single hardware opcodes. The reason, then and now, is performance.

-

-

btilly about 13 yearsThere are very, very few people today who can actually beat C, COBOL or FORTRAN compilers.

btilly about 13 yearsThere are very, very few people today who can actually beat C, COBOL or FORTRAN compilers. -

Admin about 13 years@btilly: That is only because there are very, very few people today who bother acquiring the necessary knowledge to do that. It is not worth it anymore.

Admin about 13 years@btilly: That is only because there are very, very few people today who bother acquiring the necessary knowledge to do that. It is not worth it anymore. -

Oystein about 13 years"640K ought to be enough for anybody." - Bill Gates. Done.

-

user1066101 about 13 years@btilly: Back in the day, we modified the output from the Fortran compiler to hand optimize performance critical sections. Fortran was required by the contract but didn't meet the performance requirements.

-

dmckee --- ex-moderator kitten about 13 yearsAh! The good(?) old days... ::stares off into the distance::

-

Gordon Gustafson about 13 yearsfortunately most of those who can are writing the compilers. :D

-

Peter Cordes almost 6 yearsAlso, compilers sucked compared to modern gcc/clang because writing an optimizing compiler is hard. (And it needs a lot of memory and time to run, so a good optimizing compiler for Z80 or whatever can't run on a Z80.) It used to be a lot easier to beat a C compiler with hand-written asm, compare to now where it's still possible on a small scale (for certain loops: Why is this C++ code faster than my hand-written assembly for testing the Collatz conjecture?) but totally impractical to beat the compiler for large-scale constant-propagation.

Peter Cordes almost 6 yearsAlso, compilers sucked compared to modern gcc/clang because writing an optimizing compiler is hard. (And it needs a lot of memory and time to run, so a good optimizing compiler for Z80 or whatever can't run on a Z80.) It used to be a lot easier to beat a C compiler with hand-written asm, compare to now where it's still possible on a small scale (for certain loops: Why is this C++ code faster than my hand-written assembly for testing the Collatz conjecture?) but totally impractical to beat the compiler for large-scale constant-propagation. -

Peter Cordes almost 6 years@btilly: Compilers back then sucked compared to modern optimizing compilers. They were much easier to beat with hand-written asm. e.g. Why do C to Z80 compilers produce poor code?. (Z80 was a particularly poor compiler target, as well as old compilers sucking.) Modern optimizing compilers are the product of millions of dev hours of effort, and wouldn't run in the cramped memory of an old system anyway. Why are there so few C compilers?

Peter Cordes almost 6 years@btilly: Compilers back then sucked compared to modern optimizing compilers. They were much easier to beat with hand-written asm. e.g. Why do C to Z80 compilers produce poor code?. (Z80 was a particularly poor compiler target, as well as old compilers sucking.) Modern optimizing compilers are the product of millions of dev hours of effort, and wouldn't run in the cramped memory of an old system anyway. Why are there so few C compilers? -

btilly almost 6 years@PeterCordes Not only that, but also modern hardware is a much more complex optimization target.

btilly almost 6 years@PeterCordes Not only that, but also modern hardware is a much more complex optimization target. -

Peter Cordes almost 6 years@btilly: only if you consider auto-vectorization. x86-64 is a pretty nice compiler target, having 15 (mostly) orthogonal integer registers + a stack pointer. Pointers fit in a single register, and any register can be a base and/or index in an addressing mode (major diff from Z80, or 8086 segments). Powerful out-of-order execution capabilities make instruction-scheduling / ordering mostly unimportant even though implementations are highly pipelined superscalar. Intel/AMD design CPUs to be good at plowing through compiler-generated code, even when it's less optimal than hand-written.

Peter Cordes almost 6 years@btilly: only if you consider auto-vectorization. x86-64 is a pretty nice compiler target, having 15 (mostly) orthogonal integer registers + a stack pointer. Pointers fit in a single register, and any register can be a base and/or index in an addressing mode (major diff from Z80, or 8086 segments). Powerful out-of-order execution capabilities make instruction-scheduling / ordering mostly unimportant even though implementations are highly pipelined superscalar. Intel/AMD design CPUs to be good at plowing through compiler-generated code, even when it's less optimal than hand-written. -

Peter Cordes almost 6 years@btilly: Sandybridge-family has less weird stuff than P6-family, e.g. no more register-read stalls so compilers don't have to worry about reading too many "cold" registers in a loop. (Although gcc still likes to increment a register before reading it as a pointer, probably a heuristic left over from tuning for P6... I don't think compilers ever did really model the pipeline in enough detail to know when they'd get register-read stalls, and clang still creates partial-register stalls for P6-family.) Pipelined in-order CPUs like some ARM are a tricky compiler target, though.

Peter Cordes almost 6 years@btilly: Sandybridge-family has less weird stuff than P6-family, e.g. no more register-read stalls so compilers don't have to worry about reading too many "cold" registers in a loop. (Although gcc still likes to increment a register before reading it as a pointer, probably a heuristic left over from tuning for P6... I don't think compilers ever did really model the pipeline in enough detail to know when they'd get register-read stalls, and clang still creates partial-register stalls for P6-family.) Pipelined in-order CPUs like some ARM are a tricky compiler target, though. -

btilly almost 6 years@PeterCordes You probably know this a lot better than I do. My last memory of the complications had to do with register-read stalls...

btilly almost 6 years@PeterCordes You probably know this a lot better than I do. My last memory of the complications had to do with register-read stalls... -

Peter Cordes almost 6 years@btilly: yeah, Intel has steadily made their architectures easier compiler targets, removing more and more tricky stalls. Modern x86 has even fewer "glass jaws". Skylake even made page-split loads nearly as cheap as cache-line splits (~10 cycle penalty, down from ~100), so auto-vectorizing over maybe-unaligned data, or coalescing stores/loads of adjacent data, is less risky. AMD's Ryzen is pretty robust, too, AFAIK, with basically no weird scheduling rules. (uop caches help a lot). Low power silvermont and Xeon Phi (knight's landing) have some stuff to avoid; multi-uop isns stall decode.

Peter Cordes almost 6 years@btilly: yeah, Intel has steadily made their architectures easier compiler targets, removing more and more tricky stalls. Modern x86 has even fewer "glass jaws". Skylake even made page-split loads nearly as cheap as cache-line splits (~10 cycle penalty, down from ~100), so auto-vectorizing over maybe-unaligned data, or coalescing stores/loads of adjacent data, is less risky. AMD's Ryzen is pretty robust, too, AFAIK, with basically no weird scheduling rules. (uop caches help a lot). Low power silvermont and Xeon Phi (knight's landing) have some stuff to avoid; multi-uop isns stall decode.