Write-locked file sometimes can't find contents (when opening a pickled pandas DataFrame) - EOFError: Ran out of input

It was a bit of a vague question perhaps, but since I managed to fix it and understand the situation better, I think it would be informative to post here what I found, in the hopes that others might also find this. It seems to me like a problem that could potentially come up to other as well.

Credit to the ´portalocker´ github page for the help and answer: https://github.com/WoLpH/portalocker/issues/40

The issue seems to have been that I was doing this on a cluster. As a result, the filesystem is a bit complicated, as the processes are operating on multiple nodes. It can take longer than expected for the saved files to "sync" up and be seen by the different running processes.

What seems to have worked for me is to flush the file and force the system sync, and additionally (not sure yet if this is optional), to add a longer ´time.sleep()´ after that.

According to the ´portalocker´ developer, it can take an unpredictable amount of time for files to sync up on a cluster, so you might need to vary the sleep time.

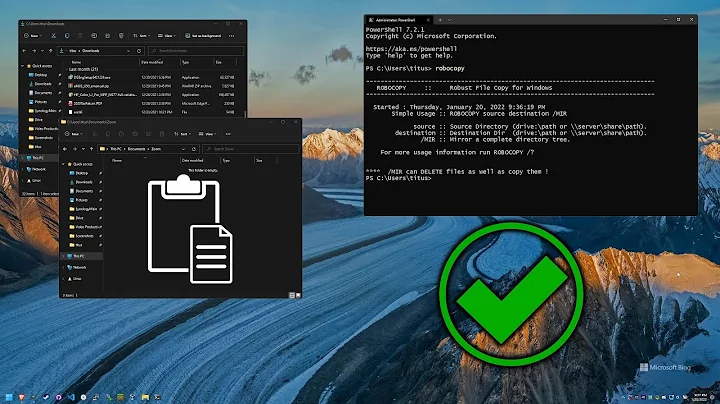

In other words, add this after saving the file:

df.to_pickle(file)

file.flush()

os.fsync(file.fileno())

time.sleep(1)

Hopefully this helps out someone else out there.

Related videos on Youtube

Marses

Updated on December 07, 2022Comments

-

Marses over 1 year

Here's my situation: I need to run 300 processes on a cluster (they are independent) that all add a portion of their data to the same DataFrame (they will also need to read the file before writing as a result). They may need to do this multiple times throughout their RunTime.

So I tried using write-locked files, with the

portalockerpackage. However, I'm getting a type of bug and I don't understand where it's coming from.Here's the skeleton code where each process will write to the same file:

with portalocker.Lock('/path/to/file.pickle', 'rb+', timeout=120) as file: file.seek(0) df = pd.read_pickle(file) # ADD A ROW TO THE DATAFRAME # The following part might not be great, # I'm trying to remove the old contents of the file first so I overwrite # and not append, not sure if this is required or if there's # a better way to do this. file.seek(0) file.truncate() df.to_pickle(file)The above works, most of the time. However, the more simultaneous processes I have write-locking, the more I get an EOFError bug on the

pd.read_pickle(file)stage.EOFError: Ran out of inputThe traceback is very long and convoluted.

Anyway, my thoughts so far are that since it works sometimes, the code above must be fine *(though it might be messy and I wouldn't mind hearing of a better way to do the same thing).

However, when I have too many processes trying to write-lock, I suspect the file doesn't have time save or something, or at least somehow the next processes doesn't yet see the contents that have been saved by the previous process.

Would there be a way around that? I tried adding in

time.sleep(0.5)statements around my code (before theread_pickle, after theto_pickle) and I don't think it helped. Does anyone understand what could be happening or know a better way to do this?Also note, I don't think the write-lock times out. I tried timing the process, and I also added a flag in there to flag if the Write-Lock times out. While there are 300 processes and they might be trying to write and varying rates, in general I'd estimate there's about 2.5 writes per second, which doesn't seem like it should overload the system no?*

*The pickled DataFrame has a size of a few hundred KB.

-

David Schwartz over 11 years

sudo cddoesn't make any sense. What would be the point of a process that changed its own current working directory and then terminated? -

Darkonaut over 5 yearsJust for the record, which OS are you on? I yesterday tried to replicate this and also watched your discussion on github but failed to replicate your issue on my Ubuntu-machine, so it really seems like a cluster-thingy.

Darkonaut over 5 yearsJust for the record, which OS are you on? I yesterday tried to replicate this and also watched your discussion on github but failed to replicate your issue on my Ubuntu-machine, so it really seems like a cluster-thingy. -

Marses over 5 yearsYeah it's most definitely a cluster thing, it's a CentOS 7.5, running jobs with a SLURM scheduler.

-

-

tarabyte over 11 yearsthank you all for the replies. i can't vote up any of them because i have no reputation yet

-

tarabyte over 11 yearsi upgraded to gnu-fdisk to handle the 2TB limit i guess fdisk had. i will try parted though, thank you

-

tarabyte over 11 yearsthank you for the warnings. to clarify the original post, they were on different computers. i formatted on a netbook and mounted on another tower so /dev/sdd vs. /dev/sdb was safe

-

tarabyte over 11 yearshitting enter when prompted for the default start does not work. how am i supposed to know what number i should place here?

-

ssmy over 11 yearsOh yeah. I usually use 1, for 1 megabyte. Aligns it correctly. Sorry about that.