AccessDenied for ListObjectsV2 operation for S3 bucket

Solution 1

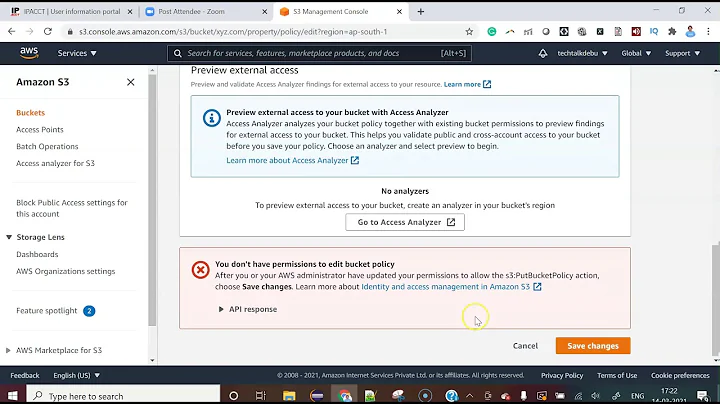

Try to update your bucket policy to:

{

"Version": "version_id",

"Statement": [

{

"Sid": "AllowPublicRead",

"Effect": "Allow",

"Action": [

"s3:*"

],

"Resource": [

"arn:aws:s3:::BUCKET-NAME",

"arn:aws:s3:::BUCKET-NAME/*"

]

}

] }

I hope you understand this is very insecure.

Solution 2

I'm not sure the accepted answer is actually acceptable, as it simply allows all operations on the bucket. Also the Sid is misleading... ;-)

This AWS article mentions the required permissions for aws s3 sync.

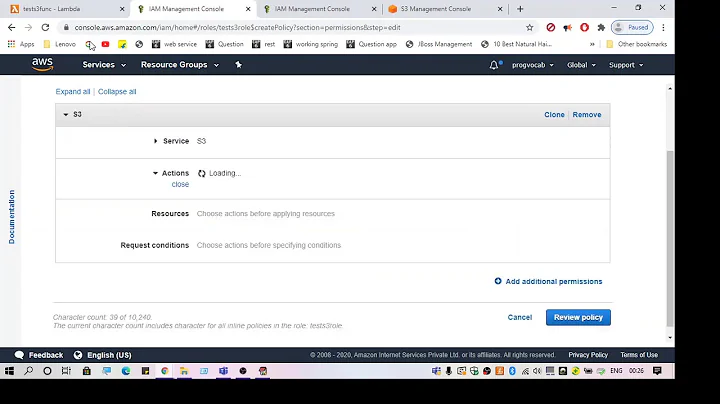

This is how a corresponding policy looks like:

{

"Version": "version_id",

"Statement": [

{

"Sid": "AllowBucketSync",

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:PutObject",

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::BUCKET-NAME",

"arn:aws:s3:::BUCKET-NAME/*"

]

}

] }

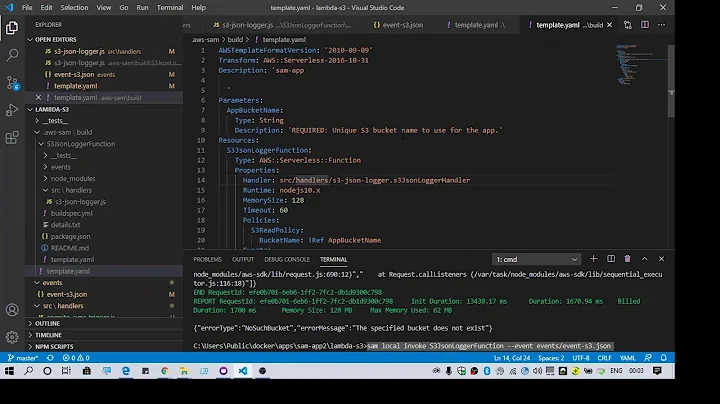

Solution 3

I had this problem recently. No matter what I did, no matter what permissions I provided, I kept getting "An error occurred (AccessDenied) when calling the ListObjectsV2 operation: Access Denied" when running aws s3 ls <bucket>

I had forgotten that I have multiple aws profiles configured in my environment. The aws command was using the default profile, which has a different set of access keys. I had to specify the --profile flag to the command:

aws s3 ls <bucket> --profile <correct profile>

That worked. It's a niche situation, but maybe it'll help someone out.

Solution 4

I got "AccessDenied" errors, too, even though the policy was correct. I gave mrbranden's solution a try though I only have one (the default) credentials configured. And lo and behold,

$ aws s3 ls <bucket> --profile=default

made it work!

My aws --version is aws-cli/1.18.69 Python/3.8.5 Linux/5.4.0-1035-aws botocore/1.16.19

Related videos on Youtube

tbone

Updated on July 29, 2022Comments

-

tbone 4 months

During GitlabCi I got: "fatal error: An error occurred (AccessDenied) when calling the ListObjectsV2 operation: Access Denied"

My bucket policy :

{ "Version": "2008-10-17", "Statement": [ { "Sid": "AllowPublicRead", "Effect": "Allow", "Principal": { "AWS": "*" }, "Action": "s3:*", "Resource": "arn:aws:s3:::BUCKET-NAME/*" } ]}

In gitlabCI settings set:

- AWS_ACCESS_KEY_ID: YOUR-AWS-ACCESS-KEY-ID

- AWS_SECRET_ACCESS_KEY: YOUR-AWS-SECRET-ACCESS-KEY

- S3_BUCKET_NAME: YOUR-S3-BUCKET-NAME

- DISTRIBUTION_ID: CLOUDFRONT-DISTRIBUTION-ID

My .gitlab-ci.yml

image: docker:latest stages: - build - deploy build: stage: build image: node:8.11.3 script: - export API_URL="d144iew37xsh40.cloudfront.net" - npm install - npm run build - echo "BUILD SUCCESSFULLY" artifacts: paths: - public/ expire_in: 20 mins environment: name: production only: - master deploy: stage: deploy image: python:3.5 dependencies: - build script: - export AWS_ACCESS_KEY_ID=$AWS_ACCESS_KEY_ID - export AWS_SECRET_ACCESS_KEY=$AWS_SECRET_ACCESS_KEY - export S3_BUCKET_NAME=$S3_BUCKET_NAME - export DISTRIBUTION_ID=$DISTRIBUTION_ID - pip install awscli --upgrade --user - export PATH=~/.local/bin:$PATH - aws s3 sync --acl public-read --delete public $S3_BUCKET_NAME - aws cloudfront create-invalidation --distribution-id $DISTRIBUTION_ID --paths '/*' - echo "DEPLOYED SUCCESSFULLY" environment: name: production only: - master -

daevski over 1 yearIt looks like

s3:ListBucketis depreciated and one should uses3:ListObjectsV2?ListBucketwithin the actions table on Actions defined by Amazon S3 now links to theListObjectspage, and that page now encourages the use ofListObjectsV2.