Alpha-beta move ordering

Solution 1

-

Node reordering with shallow search is trivial: calculate the heuristic value for each child of the state before recursively checking them. Then, sort the values of these states [descending for max vertex, and ascending for min vertex], and recursively invoke the algorithm on the sorted list. The idea is - if a state is good at shallow depth, it is more likely to be good at deep state as well, and if it is true - you will get more prunnings.

The sorting should be done before this [in both

ifandelseclauses]for (Move move : children) { -

storing moves is also trivial - many states are calculated twice, when you finish calculating any state, store it [with the depth of the calculation! it is improtant!] in a

HashMap. First thing you do when you start calculation on a vertex - is check if it is already calculated - and if it is, returned the cached value. The idea behind it is that many states are reachable from different paths, so this way - you can eliminate redundant calculations.The changes should be done both in the first line of the method [something like

if (cache.contains((new State(board,depth,player)) return cache.get(new State(board,depth,player))] [excuse me for lack of elegance and efficiency - just explaining an idea here].

You should also addcache.put(...)before eachreturnstatement.

Solution 2

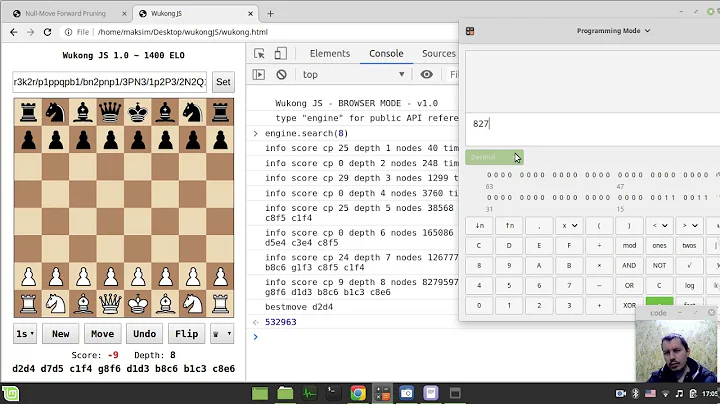

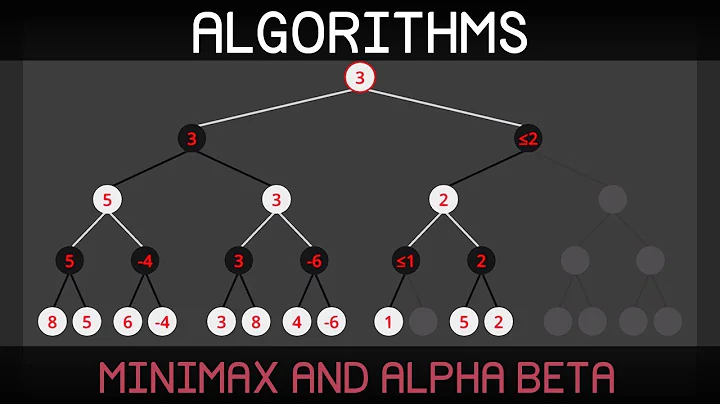

First of all one has to understand the reasoning behind the move ordering in an alpha-beta pruning algorithm. Alpha-beta produces the same result as a minimax but in a lot of cases can do it faster because it does not search through the irrelevant branches.

It is not always faster, because it does not guarantee to prune, if fact in the worse case it will not prune at all and search absolutely the same tree as minimax and will be slower because of a/b values book-keeping. In the best case (maximum pruning) it allows to search a tree 2 times deep at the same time. For a random tree it can search 4/3 times deeper for the same time.

Move ordering can be implemented in a couple of ways:

- you have a domain expert who gives you suggestion of what moves are better. For example in chess promotion of a pawn, capturing high value pieces with lower value piece are on average good moves. In checkers it is better to kill more checkers in a move then less checker and it is better to create a queen. So your move generation function return better moves before

- you get the heuristic of how good is the move from evaluating the position at the 1 level of depth smaller (your shallow search / iterative deepening). You calculated the evaluation at the depth n-1, sorted the moves and then evaluate at the depth n.

The second approach you mentioned has nothing to do with a move ordering. It has to do with a fact that evaluation function can be expensive and many positions are evaluated many time. To bypass this you can store the values of the position in hash once you calculated it and reuse it later.

Related videos on Youtube

Salvador Dali

I am a Software Engineer in the Google Search Growth team. I use Tensorflow and TFX to analyze search data and Go to write data pipelines. This is my personal profile which has absolutely nothing to do with my employer.

Updated on June 04, 2022Comments

-

Salvador Dali almost 2 years

I have a basic implementation of alpha-beta pruning but I have no idea how to improve the move ordering. I have read that it can be done with a shallow search, iterative deepening or storing the bestMoves to transition table.

Any suggestions how to implement one of these improvements in this algorithm?

public double alphaBetaPruning(Board board, int depth, double alpha, double beta, int player) { if (depth == 0) { return board.evaluateBoard(); } Collection<Move> children = board.generatePossibleMoves(player); if (player == 0) { for (Move move : children) { Board tempBoard = new Board(board); tempBoard.makeMove(move); int nextPlayer = next(player); double result = alphaBetaPruning(tempBoard, depth - 1, alpha,beta,nextPlayer); if ((result > alpha)) { alpha = result; if (depth == this.origDepth) { this.bestMove = move; } } if (alpha >= beta) { break; } } return alpha; } else { for (Move move : children) { Board tempBoard = new Board(board); tempBoard.makeMove(move); int nextPlayer = next(player); double result = alphaBetaPruning(tempBoard, depth - 1, alpha,beta,nextPlayer); if ((result < beta)) { beta = result; if (depth == this.origDepth) { this.bestMove = move; } } if (beta <= alpha) { break; } } return beta; } } public int next(int player) { if (player == 0) { return 4; } else { return 0; } } -

FedericoCapaldo over 7 yearsgiven the code sample in the question, could you please provide a possible implementation or the sorting please (therefore both sorting and calling recursively on the sorted list)? I am confused on how to implement that.

FedericoCapaldo over 7 yearsgiven the code sample in the question, could you please provide a possible implementation or the sorting please (therefore both sorting and calling recursively on the sorted list)? I am confused on how to implement that. -

mLstudent33 almost 3 yearsGreat intuitive answer on the theory although I'll have to learn hash map for the second part. For Python, I'm thinking of using a nested dictionary something like ` {depth:{node:score}}`

![[AI] Thuật toán Minimax, alpha-beta](https://i.ytimg.com/vi/CAEI_J50B18/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLBVkmN8johtO1k0nNpAe5CcXr0qUw)