Approach for REST request with long execution time?

Solution 1

Assuming that you can configure HTTP timeouts using whatever framework you choose, then you could request via a GET and just hang for 5 mins.

However it may be more flexible to initiate an execution via a POST, get a receipt (a number/id whatever), and then perform a GET using that 5 mins later (and perhaps retry given that your procedure won't take exactly 5 mins every time). If the request is still ongoing then return an appropriate HTTP error code (404 perhaps, but what would you return for a GET with a non-existant receipt?), or return the results if available.

Solution 2

This is one approach.

Create a new request to perform ProcessXYZ

POST /ProcessXYZRequests

201-Created

Location: /ProcessXYZRequest/987

If you want to see the current status of the request:

GET /ProcessXYZRequest/987

<ProcessXYZRequest Id="987">

<Status>In progress</Status>

<Cancel method="DELETE" href="/ProcessXYZRequest/987"/>

</ProcessXYZRequest>

when the request is finished you would see something like

GET /ProcessXYZRequest/987

<ProcessXYZRequest>

<Status>Completed</Status>

<Results href="/ProcessXYZRequest/Results"/>

</ProcessXYZRequest>

Using this approach you can easily imagine what the following requests would give

GET /ProcessXYZRequests/Pending

GET /ProcessXYZRequests/Completed

GET /ProcessXYZRequests/Failed

GET /ProcessXYZRequests/Today

Solution 3

As Brian Agnew points out, 5 minutes is entirely manageable, if somewhat wasteful of resources, if one can control timeout settings. Otherwise, at least two requests must be made: The first to get the result-producing process rolling, and the second (and third, fourth, etc., if the result takes longer than expected to compile) to poll for the result.

Brian Agnew and Darrel Miller both suggest similar approaches for the two(+)-step approach: POST a request to a factory endpoint, starting a job on the server, and later GET the result from the returned result endpoint.

While the above is a very common solution, and indeed adheres to the letter of the REST constraints, it smells very much of RPC. That is, rather than saying, "provide me a representation of this resource", it says "run this job" (RPC) and then "provide me a representation of the resource that is the result of running the job" (REST). EDIT: I'm speaking very loosely here. To be clear, none of this explicitly defies the REST constraints, but it does very much resemble dressing up a non-RESTful approach in REST's clothing, losing out on its benefits (e.g. caching, idempotency) in the process.

As such, I would rather suggest that when the client first attempts to GET the resource, the server should respond with 202 "Accepted" (http://www.w3.org/Protocols/rfc2616/rfc2616-sec10.html#sec10.2.3), perhaps with "try back in 5 minutes" somewhere in the response entity. Thereafter, the client can poll the same endpoint to GET the result, if available (otherwise return another 202, and try again later).

Some additional benefits of this approach are that single-use resources (such as jobs) are not unnecessarily created, two separate endpoints need not be queried (factory and result), and likewise the second endpoint need not be determined from parsing the response from the first, thus simpler. Moreover, results can be cached, "for free" (code-wise). Set the cache expiration time in the result header according to how long the results are "valid", in some sense, for your problem domain.

I wish I could call this a textbook example of a "resource-oriented" approach, but, perhaps ironically, Chapter 8 of "RESTful Web Services" suggests the two-endpoint, factory approach. Go figure.

Solution 4

If you control both ends, then you can do whatever you want. E.g. browsers tend to launch HTTP requests with "connection close" headers so you are left with fewer options ;-)

Bear in mind that if you've got some NAT/Firewalls in between you might have some drop connections if they are inactive for some time.

Could I suggest registering a "callback" procedure? The client issues the request with a "callback end-point" to the server, gets a "ticket". Once the server finishes, it "callbacks" the client... or the client can check the request's status through the ticket identifier.

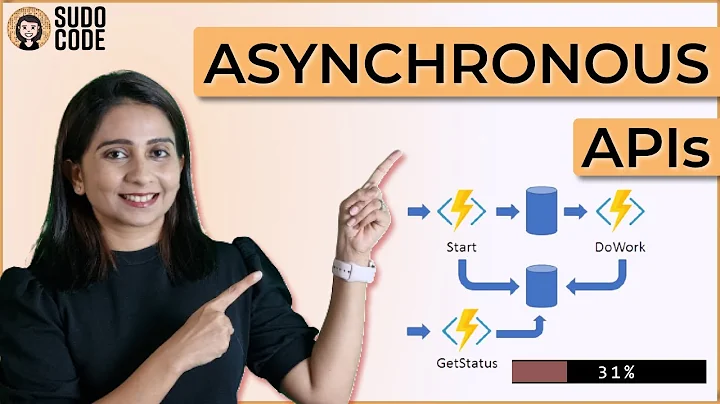

Related videos on Youtube

Marcus Leon

Director Clearing Technology, Intercontinental Exchange. Develop the clearing systems that power ICE/NYSE's derivatives markets.

Updated on November 05, 2020Comments

-

Marcus Leon over 3 years

Marcus Leon over 3 yearsWe are building a REST service that will take about 5 minutes to execute. It will be only called a few times a day by an internal app. Is there an issue using a REST (ie: HTTP) request that takes 5 minutes to complete?

Do we have to worry about timeouts? Should we be starting the request in a separate thread on the server and have the client poll for the status?

-

Merlyn Morgan-Graham about 13 yearsIsn't this stateful (on the server), and isn't stateful behavior against REST ideals?

-

Steven Huwig over 12 years@Merlyn Morgan-Graham: it's not statefulness that's "against REST ideals," it's hidden state. Since the state is available as a resource at a given URL, this is fine.

-

Vic Seedoubleyew over 4 yearsThis is interesting, thanks again for sharing. I might need to think more about it, but off the top of my head, one downside I see to the single-endpoint approach would be that one would have to define a fixed-time cache duration, which would mean that "retrying" the whole operation before the cache expires would not result in a real retry. In some use cases this might confuse users

Vic Seedoubleyew over 4 yearsThis is interesting, thanks again for sharing. I might need to think more about it, but off the top of my head, one downside I see to the single-endpoint approach would be that one would have to define a fixed-time cache duration, which would mean that "retrying" the whole operation before the cache expires would not result in a real retry. In some use cases this might confuse users