Ask Google to remove thousands of pages from its index after cleaning up from hacked site

Solution 1

Google will automatically remove pages that now return a 404 status. They will get removed 24 hours after Googlebot next tries to crawl them. If you want to speed up the process slightly, return "410 Gone" status for those URLs instead. Then they will be removed without the day grace period after they are next crawled.

The only problem is that it may take Googlebot months to get around to crawling all those dead pages. If you want to speed the crawling up, you have two options:

- Submit each URL individually to the Google Search Console URL removal tool.

- Create a temporary sitemap of all the dead URLs and add that sitemap to Google Search Console. (reference)

To get a list of all the URLs, I would suggest using your server logs. They will have a more complete record of the URLs than a site: search or Google Search Console. I would use grep on the command line. If the all the URLs are similar to the URL you posted, you could come up with a regular expression pattern for them. That URL is 31 characters long with letters, dashes, and digits. It ends in a numbers. Maybe something like this. It will look for 15 to 30 of those characters followed by a dash and 4 to 10 digits.

grep -oE '/[0-9a-z\-]{15,30}-[0-9]{4,10}' /var/log/apache2/example.com.log

Solution 2

This problem won't be solved by pinging Google to recrawl your site or resubmitting the sitemap because it would index the new URLs and not delete the old/dummy ones.

The Webmaster tool used for URL removal is the only way to ask Google to remove links from its index, however, it only allows one link at a time, to be submitted for removal.

In order to overcome this, you can use a chrome extension to automate this process. It is a paid tool(about $9) on chrome extensions store but you can get it for free on GitHub.

- Go to this link.

- Download the .zip file.

- Extract and import into the chrome extensions.

Now reload your URL removal tab and you will see an option to upload a .csv or.xls file.

Download the list of URLs that you need to delete from Search console and upload the file here. (These links will be excluded from your sitemap so you would find the list of these URLs easily)

Let the tool do its job because it sure will take time depending upon the number of links you have.

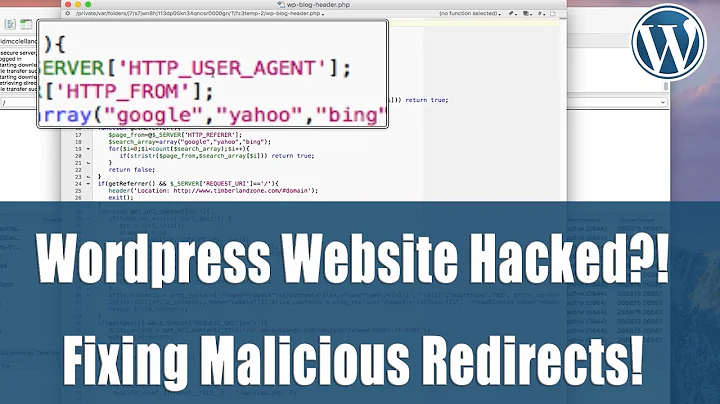

Related videos on Youtube

Rad

Updated on September 18, 2022Comments

-

Rad over 1 year

I've had my website hacked. I have cleaned it and searched google

site:example.comand made a list of all the results. Removed the actual links that are OK and made a list of the ones in the format ofexample.com/ad0-b1fermarte54eb17chb-1244425. I've submitted these links to Google to remove and Google removed these links.The problem here is that after my initial search, that resulted in 200 URLs which we've removed, other new links appeared out of nowhere. These links don't actually work anymore and return a 404 page, but Google still has these in its results.

We've tried deleting our sitemap and re-submitting it. Google has crawled it, but again, it has not removed the dummy search results.

Looking in our google webmaster console, we saw that in the coverage tab the number of indexed links spiked from 230 (normal usage) to 10.900 links - I'm guessing these are all dummy links that were infected and now cleaned.

Any magic way of having Google remove dead links automatically? Or a way to force it to recrawl the entire website?

-

Stephen Ostermiller over 4 years

Stephen Ostermiller over 4 years -

Beda Schmid almost 3 yearsYou can do nothing. Google will decide when, if and wether to recrawl, remove or else your links. If you are lucky and your site is very busy and considered authoritative by garbage like google, it will do that fast and thoroughly. If you are not so lucky and belong to the 99% of webowners then your site is doomed for being ignored because all links will be considered garbage by google, triggering a spiral effect to not index (because considered garbage) and so forth. You are better of killing the site. Unfortunately that is what happens when we give 99% market share to one big giant: google.

Beda Schmid almost 3 yearsYou can do nothing. Google will decide when, if and wether to recrawl, remove or else your links. If you are lucky and your site is very busy and considered authoritative by garbage like google, it will do that fast and thoroughly. If you are not so lucky and belong to the 99% of webowners then your site is doomed for being ignored because all links will be considered garbage by google, triggering a spiral effect to not index (because considered garbage) and so forth. You are better of killing the site. Unfortunately that is what happens when we give 99% market share to one big giant: google.

-

-

Rad over 4 yearsHey @Anuvesh, thanks so much for your fast reply. I'm actually in the proccess of doing this now, the only problem that I've found is that I can't download more than 1000 links from Google Search Console, any workaround that? Best regards

-

Anuvesh over 4 yearsUse this plugin for excel analyticsedge.com/product.

Anuvesh over 4 yearsUse this plugin for excel analyticsedge.com/product. -

Anuvesh over 4 yearsFollow these steps searchengineland.com/…

Anuvesh over 4 yearsFollow these steps searchengineland.com/… -

Rad over 4 yearsThat's actually exactly what I did before posting here, how strange is that? Problem is that I got stuck at step #8 - I could not find the option to "Write worksheet" and the results were only 1.3k or so out of 11k (using the Basic version of Analytics Edge)

-

Ferrybig over 4 yearsThe download link on that website downloads a zip file, not a rar file

-

Beda Schmid almost 3 yearsBoth this answer and the answer below are wrong. The "Submit each URL individually to the Google Search Console URL removal tool." or The Webmaster tool used for URL removal which links to google.com/webmasters/tools/removals do NOT remove the URL, it only HIDES it and it is back a day or two later, because the URL are not even recrawled. When this happens, you are 100% subject and slave to Googles will and it can be that your unwanted URLs will still pop up in the net after years, because if Google decided your site is "not worth indexing" it will simply not crawl it anymore.

Beda Schmid almost 3 yearsBoth this answer and the answer below are wrong. The "Submit each URL individually to the Google Search Console URL removal tool." or The Webmaster tool used for URL removal which links to google.com/webmasters/tools/removals do NOT remove the URL, it only HIDES it and it is back a day or two later, because the URL are not even recrawled. When this happens, you are 100% subject and slave to Googles will and it can be that your unwanted URLs will still pop up in the net after years, because if Google decided your site is "not worth indexing" it will simply not crawl it anymore. -

Stephen Ostermiller almost 3 years@BedaSchmid I haven't used it in several years, but the my experience with the URL removal tool was that it quickly removes a URL from the search results for about six months which matches what Google's documentation says. Googlebot recrawled the pages within 6 months and permanently removed them. Have you tried this recently and gotten different results?

Stephen Ostermiller almost 3 years@BedaSchmid I haven't used it in several years, but the my experience with the URL removal tool was that it quickly removes a URL from the search results for about six months which matches what Google's documentation says. Googlebot recrawled the pages within 6 months and permanently removed them. Have you tried this recently and gotten different results? -

Beda Schmid almost 3 yearsYes, I’ve made a mistake 8 months ago and added a testing sub domain which was indexed by google. Now the sub domain is deleted (since approx 7 months) and the google still indexes it. I removed hundreds of urls thru that tool and it removed them, only to add them back a few days later. Community support of google called that expected and “I have to wait up and maybe more than a year, depending on how eager google bots are to visit my site”. Of course with a ton of subsite 404s google probably now simply doesn’t visit anymore, at least, I barely see google bot in the logs anymore….

Beda Schmid almost 3 yearsYes, I’ve made a mistake 8 months ago and added a testing sub domain which was indexed by google. Now the sub domain is deleted (since approx 7 months) and the google still indexes it. I removed hundreds of urls thru that tool and it removed them, only to add them back a few days later. Community support of google called that expected and “I have to wait up and maybe more than a year, depending on how eager google bots are to visit my site”. Of course with a ton of subsite 404s google probably now simply doesn’t visit anymore, at least, I barely see google bot in the logs anymore…. -

Beda Schmid almost 3 yearsBtw thanks for that doc. Indeed it states six month! This seems a bug then? Because my removals get approved and pop right back a few days later even thou the site Is 100% gone since months! Where would we report bugs to google? 😩

Beda Schmid almost 3 yearsBtw thanks for that doc. Indeed it states six month! This seems a bug then? Because my removals get approved and pop right back a few days later even thou the site Is 100% gone since months! Where would we report bugs to google? 😩 -

Stephen Ostermiller almost 3 yearsThere isn't a great way to report bugs to Google.

Stephen Ostermiller almost 3 yearsThere isn't a great way to report bugs to Google. -

Beda Schmid almost 3 yearsI discovered that the search console also offers remove from cache option. That seems to work better at least until now. The other tool (accessible without search console property) doesn’t even allow for wild card removal and seems pretty much the buggy component in this game. Will update in a few weeks again with results

Beda Schmid almost 3 yearsI discovered that the search console also offers remove from cache option. That seems to work better at least until now. The other tool (accessible without search console property) doesn’t even allow for wild card removal and seems pretty much the buggy component in this game. Will update in a few weeks again with results