Bash command line and input limit

Solution 1

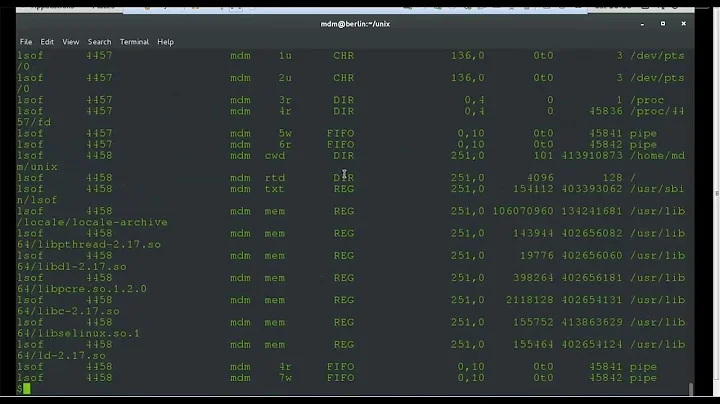

The limit for the length of a command line is not imposed by the shell, but by the operating system. This limit is usually in the range of hundred kilobytes. POSIX denotes this limit ARG_MAX and on POSIX conformant systems you can query it with

$ getconf ARG_MAX # Get argument limit in bytes

E.g. on Cygwin this is 32000, and on the different BSDs and Linux systems I use it is anywhere from 131072 to 2621440.

If you need to process a list of files exceeding this limit, you might want to look at the xargs utility, which calls a program repeatedly with a subset of arguments not exceeding ARG_MAX.

To answer your specific question, yes, it is possible to attempt to run a command with too long an argument list. The shell will error with a message along "argument list too long".

Note that the input to a program (as read on stdin or any other file descriptor) is not limited (only by available program resources). So if your shell script reads a string into a variable, you are not restricted by ARG_MAX. The restriction also does not apply to shell-builtins.

Solution 2

Ok, Denizens. So I have accepted the command line length limits as gospel for quite some time. So, what to do with one's assumptions? Naturally- check them.

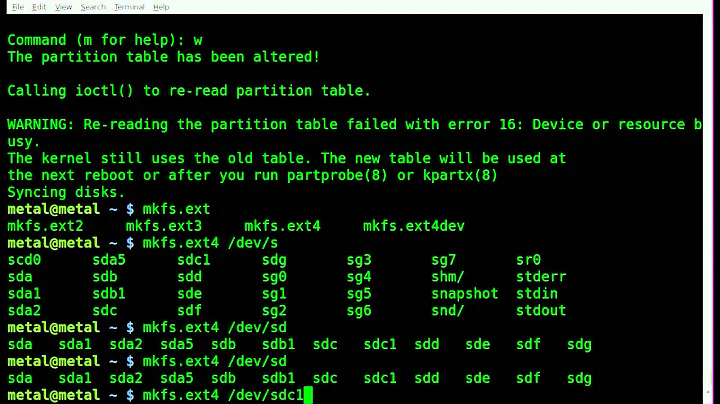

I have a Fedora 22 machine at my disposal (meaning: Linux with bash4). I have created a directory with 500,000 inodes (files) in it each of 18 characters long. The command line length is 9,500,000 characters. Created thus:

seq 1 500000 | while read digit; do

touch $(printf "abigfilename%06d\n" $digit);

done

And we note:

$ getconf ARG_MAX

2097152

Note however I can do this:

$ echo * > /dev/null

But this fails:

$ /bin/echo * > /dev/null

bash: /bin/echo: Argument list too long

I can run a for loop:

$ for f in *; do :; done

which is another shell builtin.

Careful reading of the documentation for ARG_MAX states, Maximum length of argument to the exec functions. This means: Without calling exec, there is no ARG_MAX limitation. So it would explain why shell builtins are not restricted by ARG_MAX.

And indeed, I can ls my directory if my argument list is 109948 files long, or about 2,089,000 characters (give or take). Once I add one more 18-character filename file, though, then I get an Argument list too long error. So ARG_MAX is working as advertised: the exec is failing with more than ARG_MAX characters on the argument list- including, it should be noted, the environment data.

Solution 3

There is a buffer limit of something like 1024. The read will simply hang mid paste or input. To solve this use the -e option.

http://linuxcommand.org/lc3_man_pages/readh.html

-e use Readline to obtain the line in an interactive shell

Change your read to read -e and annoying line input hang goes away.

Related videos on Youtube

Derek Halden

I enjoy long walks on the beach, and by walks, I mean nights, and by beach, I mean web.

Updated on April 29, 2022Comments

-

Derek Halden about 2 years

Is there some sort of character limit imposed in bash (or other shells) for how long an input can be? If so, what is that character limit?

I.e. Is it possible to write a command in bash that is too long for the command line to execute? If there is not a required limit, is there a suggested limit?

-

Charles Duffy over 8 yearsThe input limit is very different from the OS-level argument limit (note that some things other than arguments, such as environment variables, also apply towards that one). The generated command passed to the operating system can have more or fewer characters than the shell command that generated it.

-

-

Krzysztof Jabłoński about 9 yearsFine answer, yet I'd like a clarification. If I got a construct

cmd <<< "$LONG_VAR"and the LONG_VAR value exceeded the limit, would it blow my command? -

Jens about 9 years@KrzysztofJabłoński Unlikely, because the contents of

LONG_VARare passed on stdin--and that is done entirely in the shell; it is not expanded as an argument tocmd, so the ARG_MAX limit for fork()/exec() does not come into play. It is easy to try yourself: create a variable with contents exceeding ARG_MAX and run your command. -

Mike S over 8 yearsHere's the clarification, for the record: for an 8 megabyte m4a file, I did:

Mike S over 8 yearsHere's the clarification, for the record: for an 8 megabyte m4a file, I did:blah="$(cat /home/schwager/Music/Recordings/20090420\ 131623.m4a)"; cat <<< $blah >/dev/null. Note no error. -

Charles Duffy over 8 yearsHmm. I hadn't read the existing answer to imply that builtins were subject to the constraint in question, but can certainly see how someone could.

-

Mike S over 8 yearsYes, I think it's difficult to remember- especially for newer command-line afficianados- that the situation of calling a bash builtin vs. fork/exec'ing a command is different in non-obvious ways. I wanted to make that clear. One question that I invariably get in a job interview (as a Linux Sysadmin) is, "So I got a bunch of files in a directory. How do I loop over all of them..." The questioner is invariably driving towards the line length limit and wants a find/while or xargs solution. In the future I'm going to say, "ah hell- just use a for loop. It can handle it!" :-)

Mike S over 8 yearsYes, I think it's difficult to remember- especially for newer command-line afficianados- that the situation of calling a bash builtin vs. fork/exec'ing a command is different in non-obvious ways. I wanted to make that clear. One question that I invariably get in a job interview (as a Linux Sysadmin) is, "So I got a bunch of files in a directory. How do I loop over all of them..." The questioner is invariably driving towards the line length limit and wants a find/while or xargs solution. In the future I'm going to say, "ah hell- just use a for loop. It can handle it!" :-) -

Lester Cheung over 8 years@MikeS while you could do a for loop, if you can use a find-xargs combo you will fork a lot less and will be faster. ;-)

Lester Cheung over 8 years@MikeS while you could do a for loop, if you can use a find-xargs combo you will fork a lot less and will be faster. ;-) -

Mike S over 8 years@LesterCheung

Mike S over 8 years@LesterCheungfor f in *; do echo $f; donewon't fork at all (all builtins). So I don't know that a find-xargs combo will be faster; it hasn't been tested. Indeed, I don't know what the OP's problem set is. Maybefind /path/to/directorywon't be useful to him because it will return the file's pathname. Maybe he likes the simplicity of afor f in *loop. Regardless, the conversation is about line input limit- not efficiency. So let's stay on topic, which pertains to command line length. -

Derek Halden almost 7 yearsFWIW, the problem, as I recall, was just trying to write a shell in C, and determine how long I should allow inputs to be.

-

Gerrit over 6 yearsLittle caveat. The environment variables also count. sysconf manpage > It is difficult to use ARG_MAX because it is not specified how much > of the argument space for exec(3) is consumed by the user's > environment variables.

-

neuralmer over 6 years@user188737 I feel like it is a pretty big caveat in BUGS. For example

xargson macOS 10.12.6 limits how much it tries to put in oneexec()toARG_MAX - 4096. So scripts usingxargsmight work, until one day when someone puts too much stuff in the environment. Running into this now (work around it with:xargs -s ???). -

Chai T. Rex almost 6 yearsThis isn't about

Chai T. Rex almost 6 yearsThis isn't aboutread: "I.e. Is it possible to write a command in bash that is too long for the command line to execute?" -

Robert Siemer about 5 years@Jens Your answer talks about

fork()/exec()limitations, not about how much a shell can handle on an input line (interactive or not). – So this is not answering the question. (I do see that some commands to the shell invoke other programs and thus arguments get passed there, but this is a different story.) -

Jens about 5 years@RobertSiemer I don't understand your request. Only the person who asked can un-accept. I also disagree with you that this is not answering the question. Shell input limits are a consequence of fork()/exec() limits since the shell is mostly using these system calls (unless handling builtins) to execute commands.

-

Jens about 5 years@RobertSiemer In a comment to another answer, the person asking also states that he was writing a shell in C and wondering how much input for a command line should be allocated. I'd appreciate if you considered taking back the downvote, thanks!

-

Robert Siemer about 5 years@Jens Exactly! And how does your answer help with that? His shell has to establish its own limit (or be limited by hardware) on how long a line can be. And that contradicts your first sentence. There is no relation to ARG_MAX unless the shell author establishes one artificially. I'm aware of the fact that some lines will be calls to other programs and those

exec()calls are limited by ARG_MAX, but not the line containing that command. With bash you can use lines longer than ARG_MAX and they even work if shell built-ins are used, for example. -

Jens about 5 years@RobertSiemer The shell simply queries ARG_MAX, either at run-time, or better at compile time in a "configure" type script. How does my answer not help? What answer would you give?

-

Robert Siemer about 5 years@jens Why should the shell limit itself to ARG_MAX? I like the exercise Mike does in his “answer” (which shows that bash is not limited by ARG_MAX), even though it doesn’t answer the OP’s questions either.

-

Robert Siemer about 5 years@Derek Could you un-accept this answer? It is not accurate, if I’m allowed to say. (The OS does not limit the length of an input line in a shell. The shell can/could read as much input as the memory can handle.)

-

Richard over 4 yearsARG_MAX on my OpenBSD was greater than ARG_MAX on my linux machine and in spite of that the EXACT code works on linux but not OpenBSD.

-

Amir about 4 years@ChaiT.Rex you are sort of correct, but here is the thing: try running Bash interactively without Readline, i.e.

bash --noediting, and at the new prompt try running the commandecho somereallylongword, where somereallylongword is longer than 4090 characters. Tried on Ubuntu 18.04, the word got truncated, so obviously it does have something to do with Readline not being enabled. -

Mike S over 3 years@Amir Interesting! You are correct! I attempted to edit the answer, but then I realized that the -e option doesn't apply to bash in this context (in bash, it exits the shell immediately on error). And I'm not sure why Paul pivoted to read. Anyway, there is a buffer limit of between 4-5000 characters when bash is started with --noreadline. That's a side effect that I didn't know about or expect.

Mike S over 3 years@Amir Interesting! You are correct! I attempted to edit the answer, but then I realized that the -e option doesn't apply to bash in this context (in bash, it exits the shell immediately on error). And I'm not sure why Paul pivoted to read. Anyway, there is a buffer limit of between 4-5000 characters when bash is started with --noreadline. That's a side effect that I didn't know about or expect. -

user5359531 over 3 yearsis

ARG_MAXthe size in bytes or the number of characters? -

Jens over 3 years@user5359531 It's stated in the shaded part of my answer :-)

-

keen over 3 yearsupvote because read was the problem child I was chasing around the room and this answer got me to the right place. thanks!

-

Maëlan almost 3 yearsFascinating! But then, does Bash imposes a limit at all on the length of a command line, before or after expansion? The fact that “there is no maximum limit on the size of an array” (from

man bash), and the fact thatulimit -adoes not report anything related to line length, lend me to suspect that it does not. -

Mike S almost 3 years@Maëlan no the limit is to the exec system call, so this would be a kernel limitation, not a Bash limitation.

Mike S almost 3 years@Maëlan no the limit is to the exec system call, so this would be a kernel limitation, not a Bash limitation.