Best sysctl.conf configuration for high load - extremely busy content streaming server

Performance tuning and identifying bottle necks like this are a hard problem to solve, and frequently require a lot of information to diagnose. The key to the process is to go through the process it uses and see if you can find what resource is being exhausted. When you said the server is unresponsive for php, but html still serves, that is an interesting data point. What is different between how those are served? It might be subtle network buffer overruns, or it might be more basic than that. You might have simply exhausted the 20 child fcgi child process limit, and they are all busy serving data, while new requests are getting jammed into the listen queue (and timing out eventually) waiting for a fcgi php process to come up.

The real trick when trying to get visibility on the box is to log into the box when problems are occurring and start gathering information.

To find out how many php processes are running you should be able to run something like this:

ps auxgmww | grep php

And if you would like to get a count of them rather than counting them yourself, you can do something like this:

ps auxgmww | grep php | wc -l

Back to your original question about performance tuning, before changing syctl.conf you might want to see what your server is telling you when the problem is occurring, you can find this out by doing the following:

sysctl -a > sysctl.txt

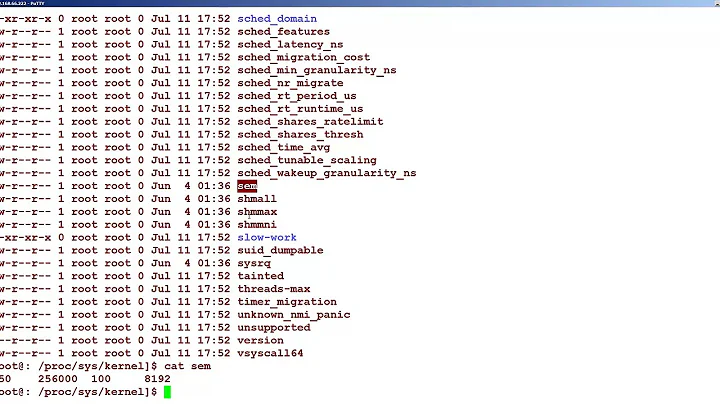

And then view your text file - it's a whole lot of data, but before tuning any given value, see if the sysctl output reports anything about what it's currently using for that tunable, and what it might be consuming. One example is open files, that you can see a sample output here:

fs.file-nr = 3456 0 102295

That tells us we are using 3456 file descriptors, but our limit is 102295, so we're nowhere near our limit. If the first number had been in the 100000 range, that would tell you that you are running out of file descriptors and that is what you need to tune.

Related videos on Youtube

Daniel Johnson

Updated on September 17, 2022Comments

-

Daniel Johnson over 1 year

What is the best sysctl.conf configuration for a high load, extremely busy content streaming server ? The server fetches the content from remote servers like amazon, s3, etc. then uses php to dynamically stream the content to user without saving it onto the hard drive. php uses CURL to fetch the file, then uses flush() to stream it simultaneously, so not much hard drive work... only network and bandwidth.

The server is quad core xeon, with 1Gbit full duplex NIC, 8gb RAM, and 500GBx2 in RAID. Server memory usage and cpu load is pretty low.

We're running debian lenny and lighttpd2 on it (yes I know its not released yet :-) ) with php 5.3.6 and php fastcgi with spawn-fcgi bind on 4 different unix sockets with 20 children each. Max fcgi requests is 20, with mod_balancer module in lighttpd2 configuration to balance the fastcgi requests among these 4 sockets in SQF (short queue first) configuration.

Our servers use a lot of bandwidth i.e network connection is busy all the time. Just after 100 to 200 parallel connections, the server starts to slow down and eventually becomes unresponsive, starts giving connection timeout errors. When we had cpanel, we never got timeout errors, so it cannot be a script issue. It must be a network configuration issue.

lighttpd2 configuration: worker processes = 8, keep alive requests is 32, keep alive idle timeout is 10 seconds, and max connections is 8192.

Our current sysctl.conf contents are:

net.ipv4.tcp_fin_timeout = 1 net.ipv4.tcp_tw_recycle = 1 # Increase maximum amount of memory allocated to shm kernel.shmmax = 1073741824 # This will increase the amount of memory available for socket input/output queues net.ipv4.tcp_rmem = 4096 25165824 25165824 net.core.rmem_max = 25165824 net.core.rmem_default = 25165824 net.ipv4.tcp_wmem = 4096 65536 25165824 net.core.wmem_max = 25165824 net.core.wmem_default = 65536 net.core.optmem_max = 25165824 net.ipv4.tcp_syncookies = 1 net.ipv4.tcp_max_orphans = 262144 net.ipv4.tcp_max_syn_backlog = 262144 net.ipv4.tcp_synack_retries = 2 net.ipv4.tcp_syn_retries = 2 # you shouldn't be using conntrack on a heavily loaded server anyway, but these are # suitably high for our uses, insuring that if conntrack gets turned on, the box doesn't die # net.ipv4.netfilter.ip_conntrack_max = 1048576 # net.nf_conntrack_max = 1048576 # For Large File Hosting Servers net.core.wmem_max = 1048576 net.ipv4.tcp_wmem = 4096 87380 524288-

coredump about 13 yearsYou have first to find what exactly is causing the unresponsiveness. It may not be anything related to

sysctls. Check if there are processes choking, memory lacking, etc.stracethe processes and see why/where they hang. -

Daniel Johnson about 13 yearsthey dont hang.. as I said, only .php files become dead. server status page works fine..

-

coredump about 13 yearsSo you have to discover why the php files 'die'. Are you serving those from lighttpd?

-

Daniel Johnson about 13 yearsand sometimes, they dont die even after 200 connections , but take hell lot of time to load the php file.. like right now, 225 connections and still serving the php files, but really slow, like 30 seconds to load the phpinfo page. check : dlserv6.linksnappy.com/info.php

-

Daniel Johnson about 13 years@coredump, yes from lighttpd with fastcgi , but how do I find out why they're dying? Oh wait, I just ran a benchmark, flooded the server with a different server to index.php 500 sequests per seconds, for 600 seconds, and it got a bit slow, but not that slow, but when a user downloads a file , with just 100 reqs per sec, it almost kills the server, so I guess its a network config issue now ? because it can serve normal php files with almost no problem

-

coredump about 13 years@bilal you must check how everything works together. It can be a locking issue, a shared resource (memory/IRQ) problem. It's not trivial to find the solution to a problem like this.

-

SiXoS almost 13 yearsCould it not be that all php children are busy serving reqs, so new reqs are queued up and sometimes timeout? You run fastcgi? If so, show us 10-fastcgi.conf

-