Bjoern is 4x faster than Nginx - how to tweak Nginx to handle Bjoern proxy_requests without performance loss?

I think that the answer is given in the article below.

https://news.ycombinator.com/item?id=2036661

For example, let's consider this thought experiment: Someone here mentioned Mongrel2 getting 4000 req/sec. Let's replace the name "Mongrel2" with "Server A" because this thought experiment is not limited to Mongrel2, but all servers. I assume he's benchmarking a hello world app on his laptop. Suppose that a hypothetical Server B gets "only" 2000 req/sec. One might now (mistakenly) conclude that:

Server B is a lot slower.

One should use Server A instead of Server B in high-traffic production environments.

Now put Server A behind HAProxy. HAproxy is known as a high-performance HTTP proxy server with minimal overhead. Benchmark this setup, and watch req/sec drop to about 2000-3000 (when benchmarked on a typical dual core laptop).

What just happened? Server B appears to be very slow. But the reality is that both Server A and Server B are so fast that doing even a minimum amount of extra work will have a significant effect on the req/sec number. In this case, the overhead of an extra context switch and a read()/write() call to the kernel is already enough to make the req/sec number drop by half. Any reasonably complex web app logic will make the number drop so much that the performance difference between the different servers become negligible.

Related videos on Youtube

user965091

I'm done with SO, I may occasionally come here to quickly look for questions though. I can't really bear how some users behave. I already have to bear some people where I work at because they think they are superior to me.

Updated on September 18, 2022Comments

-

user965091 over 1 year

user965091 over 1 yearI was trying to put Bjoern behind Nginx for easy load-balancing and DoS/DDoS attack mitigation.

To my dismay I not only discovered that it drops connections like chips (it varies between 20% and 50% of total connections), but it seems actually faster when not put behind it.

This was tested on a machine with 6GB RAM and Dual-Core 2Ghz cpu.

My app is this:

import bjoern,redis r = redis.StrictRedis(host='localhost', port=6379, db=0) val = r.get('test:7') def hello_world(environ, start_response): status = '200 OK' res = val response_headers = [ ('Content-type','text/plain'), ('Content-Length',str(len(res)))] start_response(status, response_headers) return [res] # despite the name this is not a hello world as you can see bjoern.run(hello_world, 'unix:/tmp/bjoern.sock')Nginx configuration:

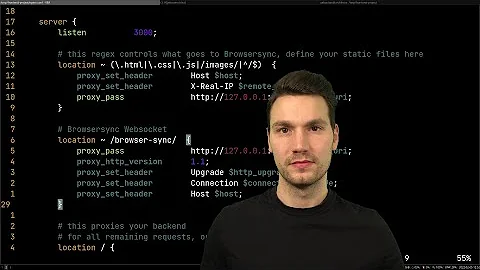

user www-data; worker_processes 2; worker_rlimit_nofile 52000; # worker_connections * 2 pid /run/nginx.pid; events { multi_accept on; worker_connections 18000; use epoll; } http { charset utf-8; client_body_timeout 65; client_header_timeout 65; client_max_body_size 10m; default_type application/octet-stream; keepalive_timeout 20; reset_timedout_connection on; send_timeout 65; server_tokens off; sendfile on; server_names_hash_bucket_size 64; tcp_nodelay off; tcp_nopush on; error_log /var/log/nginx/error.log; include /etc/nginx/conf.d/*.conf; include /etc/nginx/sites-enabled/*; }and virtual host:

upstream backend { server unix:/tmp/bjoern.sock; } server { listen 80; server_name _; error_log /var/log/nginx/error.log; location / { proxy_buffering off; proxy_redirect off; proxy_pass http://backend; } }The benchmark of Bjoern put behind Nginx through unix socket I get is this:

Benchmarking 127.0.0.1 (be patient) Completed 1000 requests Completed 2000 requests Completed 3000 requests Completed 4000 requests Completed 5000 requests Completed 6000 requests Completed 7000 requests Completed 8000 requests Completed 9000 requests Completed 10000 requests Finished 10000 requests Server Software: nginx Server Hostname: 127.0.0.1 Server Port: 80 Document Path: / Document Length: 148 bytes Concurrency Level: 1000 Time taken for tests: 0.983 seconds Complete requests: 10000 Failed requests: 3 (Connect: 0, Receive: 0, Length: 3, Exceptions: 0) Non-2xx responses: 3 Total transferred: 3000078 bytes HTML transferred: 1480054 bytes Requests per second: 10170.24 [#/sec] (mean) Time per request: 98.326 [ms] (mean) Time per request: 0.098 [ms] (mean, across all concurrent requests) Transfer rate: 2979.64 [Kbytes/sec] received Connection Times (ms) min mean[+/-sd] median max Connect: 0 15 4.8 15 35 Processing: 11 28 19.2 19 223 Waiting: 7 24 20.4 16 218 Total: 16 43 20.0 35 225 Percentage of the requests served within a certain time (ms) 50% 35 66% 38 75% 40 80% 40 90% 79 95% 97 98% 109 99% 115 100% 225 (longest request)10k requests per second, lesser failed requests this time but still..

When Bjoern is hit directly benchmarks results are the following:

After changing

bjoern.run(hello_world, 'unix:/tmp/bjoern.sock')tobjoern.run(hello_world, "127.0.0.1", 8000)Benchmarking 127.0.0.1 (be patient) Completed 1000 requests Completed 2000 requests Completed 3000 requests Completed 4000 requests Completed 5000 requests Completed 6000 requests Completed 7000 requests Completed 8000 requests Completed 9000 requests Completed 10000 requests Finished 10000 requests Server Software: Server Hostname: 127.0.0.1 Server Port: 8000 Document Path: / Document Length: 148 bytes Concurrency Level: 100 Time taken for tests: 0.193 seconds Complete requests: 10000 Failed requests: 0 Keep-Alive requests: 10000 Total transferred: 2380000 bytes HTML transferred: 1480000 bytes Requests per second: 51904.64 [#/sec] (mean) Time per request: 1.927 [ms] (mean) Time per request: 0.019 [ms] (mean, across all concurrent requests) Transfer rate: 12063.77 [Kbytes/sec] received Connection Times (ms) min mean[+/-sd] median max Connect: 0 0 0.3 0 4 Processing: 1 2 0.4 2 5 Waiting: 0 2 0.4 2 5 Total: 1 2 0.5 2 5 Percentage of the requests served within a certain time (ms) 50% 2 66% 2 75% 2 80% 2 90% 2 95% 3 98% 4 99% 4 100% 5 (longest request)50k requests per second and not even a failed request in this case.

I have extensively tweaked system variables such as somaxconn etc, if not I think I wouldn't get that many requests with Bjoern alone anyway.

How is it possible that Bjoern is so massively faster than Nginx?

I'm really concerned about not being able to use Nginx and benefit from the things outlined in the first line and hope you can help me find where the culprit is.

The short and concise question is: How to proxy_pass Bjoern to Nginx without losing in terms of performance? Should I just stay with Bjoern and achieve load-balancing and DoS/DDoS attack mitigation another way?

-

Michael Hampton almost 10 yearsThese tests are not comparable; they are testing two completely different things. One is simply serving a static piece of text. The other is actually doing work.

Michael Hampton almost 10 yearsThese tests are not comparable; they are testing two completely different things. One is simply serving a static piece of text. The other is actually doing work. -

user965091 almost 10 years@MichaelHampton Can you pinpoint precisely where? They are both executing the same script. Shouldn't Bjoern be slower then as it's the one "doing the work" while Nginx "proxying" it?

user965091 almost 10 years@MichaelHampton Can you pinpoint precisely where? They are both executing the same script. Shouldn't Bjoern be slower then as it's the one "doing the work" while Nginx "proxying" it? -

Michael Hampton almost 10 yearsBjoern isn't doing anything more than serving "hello world". If you have nginx do the same thing, then it will be faster too.

Michael Hampton almost 10 yearsBjoern isn't doing anything more than serving "hello world". If you have nginx do the same thing, then it will be faster too. -

user965091 almost 10 years@MichaelHampton And what is Nginx doing then, sitting idle?

user965091 almost 10 years@MichaelHampton And what is Nginx doing then, sitting idle? -

Michael Hampton almost 10 yearsYou are having nginx proxy all its connections! You already knew this, why did you ask?

Michael Hampton almost 10 yearsYou are having nginx proxy all its connections! You already knew this, why did you ask? -

user965091 almost 10 years@MichaelHampton My thought was that something like this was happening:

user965091 almost 10 years@MichaelHampton My thought was that something like this was happening:client -> nginx -> bjoern -> nginx -> client. So the conclusion is not to put Bjoern behind Nginx as it will degrade performance and just decide between Bjoern and Nginx? -

Michael Hampton almost 10 yearsEffort is wasted when you are going in the wrong direction!

Michael Hampton almost 10 yearsEffort is wasted when you are going in the wrong direction! -

user965091 almost 10 years@MichaelHampton Thank you for the tip! I'm already in the right path after the answer which clarified the issue.

user965091 almost 10 years@MichaelHampton Thank you for the tip! I'm already in the right path after the answer which clarified the issue.

-