Blocking yandex.ru bot

Solution 1

My current solution is this (for NGINX web server):

if ($http_user_agent ~* (Yandex) ) {

return 444;

}

This is case insensitive. It returns response 444.

This directive looks at the User Agent string and if "Yandex" is detected connection is closed without sending any headers. 444 is a custom error code understood by the Nginx daemon

Solution 2

I'm too young here (reputation) to post all the URLs I need to as hyperlinks, so pardon my parenthesized URLs, please.

The forum link from Dan Andreatta, and this other one, have some but not all of what you need. You'll want to use their method of finding the IP numbers, and script something to keep your lists fresh. Then you want something like this, to show you some known values including the sub-domain naming schemes they have been using. Keep a crontabbed eye on their IP ranges, maybe automate something to estimate a reasonable CIDR (I didn't find any mention of their actual allocation; could just be google fail @ me).

Find their IP range(s) as accurately as possible, so you don't have to waste time doing a reverse DNS look up while users are waiting for (http://yourdomain/notpornipromise), and instead you're only doing a comparison match or something. Google just showed me grepcidr , which looks highly relevant. From the linked page: "grepcidr can be used to filter a list of IP addresses against one or more Classless Inter-Domain Routing (CIDR) specifications, or arbitrary networks specified by an address range." I guess it is nice that its a purpose built code with known I/O, but you know that you can reproduce the function in a billion different ways.

The most, "general solution", I can think of for this and actually wish to share (speaking things into existence and all that) is for you to start writing a database of such offenders at your location(s), and spend some off-hours thinking and researching on ways to defend and counter attack the behavior. This takes you deeper into intrusion detection, pattern analysis, and honey nets, than the scope of this specific question truly warrants. However, within the scope of that research are countless answers to this question you have asked.

I found this due to Yandex's interesting behavior on one of my own sites. I wouldn't call what I see in my own log abusive, but spider50.yandex.ru consumed 2% of my visit count, and 1% of my bandwidth... I can see where the bot would be truly abusive to large files and forums and such, neither of which are available for abuse on the server I'm looking at today. What was interesting enough to warrant investigation was the bot looking at /robots.txt, then waiting 4 to 9 hours and asking for a /directory/ not in it, then waiting 4 to 9 hours, asking for /another_directory/, then maybe a few more, and /robots.txt again, repeat ad finitum. So far as frequency goes, I suppose they're well behaved enough, and the spider50.yandex.ru machine appeared to respect /robots.txt.

I'm not planning to block them from this server today, but I would if I shared Ross' experience.

For reference on the tiny numbers we're dealing with in my server's case, today:

Top 10 of 1315 Total Sites By KBytes

# Hits Files KBytes Visits Hostname

1 247 1.20% 247 1.26% 1990 1.64% 4 0.19% ip98-169-142-12.dc.dc.cox.net

2 141 0.69% 140 0.72% 1873 1.54% 1 0.05% 178.160.129.173

3 142 0.69% 140 0.72% 1352 1.11% 1 0.05% 162.136.192.1

4 85 0.41% 59 0.30% 1145 0.94% 46 2.19% spider50.yandex.ru

5 231 1.12% 192 0.98% 1105 0.91% 4 0.19% cpe-69-135-214-191.woh.res.rr.com

6 16 0.08% 16 0.08% 1066 0.88% 11 0.52% rate-limited-proxy-72-14-199-198.google.com

7 63 0.31% 50 0.26% 1017 0.84% 25 1.19% b3090791.crawl.yahoo.net

8 144 0.70% 143 0.73% 941 0.77% 1 0.05% user10.hcc-care.com

9 70 0.34% 70 0.36% 938 0.77% 1 0.05% cpe-075-177-135-148.nc.res.rr.com

10 205 1.00% 203 1.04% 920 0.76% 3 0.14% 92.red-83-54-7.dynamicip.rima-tde.net

That's in a shared host who doesn't even bother capping bandwidth anymore, and if the crawl took some DDoS-like form, they would probably notice and block it before I would. So, I'm not angry about that. In fact, I much prefer having the data they write in my logs to play with.

Ross, if you really are angry about the 2GB/day you're losing to Yandex, you might spampoison them. That's what it's there for! Reroute them from what you don't want them downloading, either by HTTP 301 directly to a spampoison sub-domain, or roll your own so you can control the logic and have more fun with it. That sort of solution gives you the tool to reuse later, when it's even more necessary.

Then start looking deeper in your logs for funny ones like this:

217.41.13.233 - - [31/Mar/2010:23:33:52 -0500] "GET /user/ HTTP/1.1" 404 15088 "http://www.google.com/" "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; MRA 5.1 (build 02228); .NET CLR 1.1.4322; InfoPath.2; .NET CLR 2.0.50727)"

217.41.13.233 - - [31/Mar/2010:23:33:54 -0500] "GET /user/ HTTP/1.1" 404 15088 "http://www.google.com/" "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; MRA 5.1 (build 02228); .NET CLR 1.1.4322; InfoPath.2; .NET CLR 2.0.50727)"

217.41.13.233 - - [31/Mar/2010:23:33:58 -0500] "GET /user/ HTTP/1.1" 404 15088 "http://www.google.com/" "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; MRA 5.1 (build 02228); .NET CLR 1.1.4322; InfoPath.2; .NET CLR 2.0.50727)"

217.41.13.233 - - [31/Mar/2010:23:34:00 -0500] "GET /user/ HTTP/1.1" 404 15088 "http://www.google.com/" "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; MRA 5.1 (build 02228); .NET CLR 1.1.4322; InfoPath.2; .NET CLR 2.0.50727)"

217.41.13.233 - - [31/Mar/2010:23:34:01 -0500] "GET /user/ HTTP/1.1" 404 15088 "http://www.google.com/" "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; MRA 5.1 (build 02228); .NET CLR 1.1.4322; InfoPath.2; .NET CLR 2.0.50727)"

217.41.13.233 - - [31/Mar/2010:23:34:03 -0500] "GET /user/ HTTP/1.1" 404 15088 "http://www.google.com/" "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; MRA 5.1 (build 02228); .NET CLR 1.1.4322; InfoPath.2; .NET CLR 2.0.50727)"

217.41.13.233 - - [31/Mar/2010:23:34:04 -0500] "GET /user/ HTTP/1.1" 404 15088 "http://www.google.com/" "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; MRA 5.1 (build 02228); .NET CLR 1.1.4322; InfoPath.2; .NET CLR 2.0.50727)"

217.41.13.233 - - [31/Mar/2010:23:34:05 -0500] "GET /user/ HTTP/1.1" 404 15088 "http://www.google.com/" "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; MRA 5.1 (build 02228); .NET CLR 1.1.4322; InfoPath.2; .NET CLR 2.0.50727)"

217.41.13.233 - - [31/Mar/2010:23:34:06 -0500] "GET /user/ HTTP/1.1" 404 15088 "http://www.google.com/" "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; MRA 5.1 (build 02228); .NET CLR 1.1.4322; InfoPath.2; .NET CLR 2.0.50727)"

217.41.13.233 - - [31/Mar/2010:23:34:09 -0500] "GET /user/ HTTP/1.1" 404 15088 "http://www.google.com/" "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; MRA 5.1 (build 02228); .NET CLR 1.1.4322; InfoPath.2; .NET CLR 2.0.50727)"

217.41.13.233 - - [31/Mar/2010:23:34:14 -0500] "GET /user/ HTTP/1.1" 404 15088 "http://www.google.com/" "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; MRA 5.1 (build 02228); .NET CLR 1.1.4322; InfoPath.2; .NET CLR 2.0.50727)"

217.41.13.233 - - [31/Mar/2010:23:34:16 -0500] "GET /user/ HTTP/1.1" 404 15088 "http://www.google.com/" "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; MRA 5.1 (build 02228); .NET CLR 1.1.4322; InfoPath.2; .NET CLR 2.0.50727)"

217.41.13.233 - - [31/Mar/2010:23:34:17 -0500] "GET /user/ HTTP/1.1" 404 15088 "http://www.google.com/" "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; MRA 5.1 (build 02228); .NET CLR 1.1.4322; InfoPath.2; .NET CLR 2.0.50727)"

217.41.13.233 - - [31/Mar/2010:23:34:18 -0500] "GET /user/ HTTP/1.1" 404 15088 "http://www.google.com/" "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; MRA 5.1 (build 02228); .NET CLR 1.1.4322; InfoPath.2; .NET CLR 2.0.50727)"

217.41.13.233 - - [31/Mar/2010:23:34:21 -0500] "GET /user/ HTTP/1.1" 404 15088 "http://www.google.com/" "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; MRA 5.1 (build 02228); .NET CLR 1.1.4322; InfoPath.2; .NET CLR 2.0.50727)"

217.41.13.233 - - [31/Mar/2010:23:34:23 -0500] "GET /user/ HTTP/1.1" 404 15088 "http://www.google.com/" "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; MRA 5.1 (build 02228); .NET CLR 1.1.4322; InfoPath.2; .NET CLR 2.0.50727)"

217.41.13.233 - - [31/Mar/2010:23:34:24 -0500] "GET /user/ HTTP/1.1" 404 15088 "http://www.google.com/" "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; MRA 5.1 (build 02228); .NET CLR 1.1.4322; InfoPath.2; .NET CLR 2.0.50727)"

217.41.13.233 - - [31/Mar/2010:23:34:26 -0500] "GET /user/ HTTP/1.1" 404 15088 "http://www.google.com/" "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; MRA 5.1 (build 02228); .NET CLR 1.1.4322; InfoPath.2; .NET CLR 2.0.50727)"

217.41.13.233 - - [31/Mar/2010:23:34:27 -0500] "GET /user/ HTTP/1.1" 404 15088 "http://www.google.com/" "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; MRA 5.1 (build 02228); .NET CLR 1.1.4322; InfoPath.2; .NET CLR 2.0.50727)"

217.41.13.233 - - [31/Mar/2010:23:34:28 -0500] "GET /user/ HTTP/1.1" 404 15088 "http://www.google.com/" "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; MRA 5.1 (build 02228); .NET CLR 1.1.4322; InfoPath.2; .NET CLR 2.0.50727)"

Hint: No /user/ directory, nor a hyperlink to such, exists on the server.

Solution 3

According to this forum, the yandex bot is well behaved and respects robots.txt.

In particular they say

The behaviour of Yandex is quite a lot like that of Google with regard to robots.txt .. The bot doesn't look at the robots.txt every single time it enters the domain.

Bots like Yandex, Baudi, and Sohu have all been fairly well behaved and as a result, are allowed. None of them have ever gone places I didn't want them to go, and parse rates don't break the bank with regard to bandwidth.

Personally I do not have issues with it, and googlebot is by far the most aggressive crawler for the sites I have.

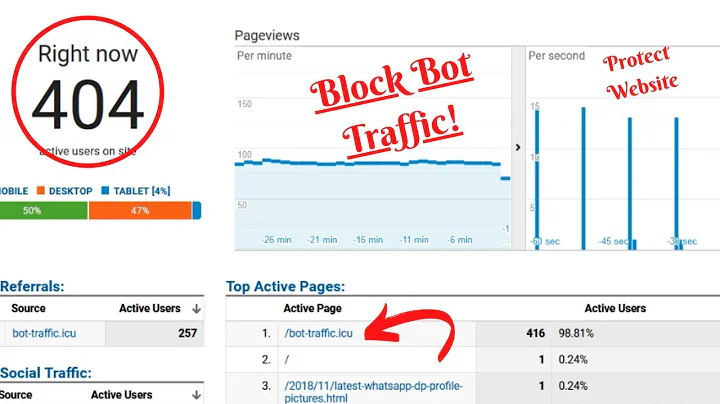

Related videos on Youtube

Ross

Updated on September 17, 2022Comments

-

Ross over 1 year

Ross over 1 yearI want to block all request from yandex.ru search bot. It is very traffic intensive (2GB/day). I first blocked one C class IP range, but it seems this bot appear from different IP ranges.

For example:

spider31.yandex.ru -> 77.88.26.27 spider79.yandex.ru -> 95.108.155.251 etc..

I can put some deny in robots.txt but not sure if it respect this. I am thinking of blocking a list of IP ranges.

Can somebody suggest some general solution.

-

Admin about 14 yearsWhat sort of platform/firewall is available in your environment?

Admin about 14 yearsWhat sort of platform/firewall is available in your environment? -

Admin about 14 years.htaccess on shared hosting

Admin about 14 years.htaccess on shared hosting -

Admin almost 14 yearsPlease stop referring to /24 size netblocks as "Class C networks". Classful routing is long dead.

Admin almost 14 yearsPlease stop referring to /24 size netblocks as "Class C networks". Classful routing is long dead.

-

-

Ross about 14 yearsIt is definitely not well behaving and works more like ddos attack. I do not want to relay on his mercy to respect the rules. On some of my web sites, I have no problems with it, but on 2 of them it make a lot of request for a single page multiple times in second.

Ross about 14 yearsIt is definitely not well behaving and works more like ddos attack. I do not want to relay on his mercy to respect the rules. On some of my web sites, I have no problems with it, but on 2 of them it make a lot of request for a single page multiple times in second. -

Dan Andreatta about 14 yearsIt could be someone just pretending to be the yandex bot. Do the IP addresses the request come from originate from a Yandex server?

-

Daniel about 14 yearsHere is some of their IP information: cqcounter.com/whois/index.php?query=213.180.199.34 cqcounter.com/whois/index.php?query=77.88.19.60 route: 213.180.192.0/19 descr: Yandex network route: 213.180.199.0/24 descr: Yandex enterprise network route: 77.88.0.0/18 descr: Yandex enterprise network

-

Ross about 14 yearsThanks, a lot of useful information. I will try some of this. Meanwhile for some of my web sites i put a white-list robots.txt for google, yahoo and msn only.

Ross about 14 yearsThanks, a lot of useful information. I will try some of this. Meanwhile for some of my web sites i put a white-list robots.txt for google, yahoo and msn only. -

Daniel about 14 yearsAh yes. Good call on the white list. You're welcome.

-

Admin almost 14 yearsThanks. I will try this. I currently block 2 IP ranges only, but who knows in the future.

Admin almost 14 yearsThanks. I will try this. I currently block 2 IP ranges only, but who knows in the future. -

Admin almost 14 yearsI use the same rules in my Apache configurations to stop Yandex as well. I tried robots.txt but they seemed to ignore it.

Admin almost 14 yearsI use the same rules in my Apache configurations to stop Yandex as well. I tried robots.txt but they seemed to ignore it. -

bigduke5 about 12 yearsYeah, could say so ;). This bots sucked on my last nerves. I bet Baidu China has more crawling servers like google would ever use. This looks more like flooding and spying to me.

![Yandex Private User Bot [1.1]](https://i.ytimg.com/vi/Tcvjpm3kMjY/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLA4W708YXAmDbNzMGDhCrMxk5lqxA)