bulk rename (or correctly display) files with special characters

Solution 1

I guess you see this � invalid character because the name contains a byte sequence that isn't valid UTF-8. File names on typical unix filesystems (including yours) are byte strings, and it's up to applications to decide on what encoding to use. Nowadays, there is a trend to use UTF-8, but it's not universal, especially in locales that could never live with plain ASCII and have been using other encodings since before UTF-8 even existed.

Try LC_CTYPE=en_US.iso88591 ls to see if the file name makes sense in ISO-8859-1 (latin-1). If it doesn't, try other locales. Note that only the LC_CTYPE locale setting matters here.

In a UTF-8 locale, the following command will show you all files whose name is not valid UTF-8:

grep-invalid-utf8 () {

perl -l -ne '/^([\000-\177]|[\300-\337][\200-\277]|[\340-\357][\200-\277]{2}|[\360-\367][\200-\277]{3}|[\370-\373][\200-\277]{4}|[\374-\375][\200-\277]{5})*$/ or print'

}

find | grep-invalid-utf8

You can check if they make more sense in another locale with recode or iconv:

find | grep-invalid-utf8 | recode latin1..utf8

find | grep-invalid-utf8 | iconv -f latin1 -t utf8

Once you've determined that a bunch of file names are in a certain encoding (e.g. latin1), one way to rename them is

find | grep-invalid-utf8 |

rename 'BEGIN {binmode STDIN, ":encoding(latin1)"; use Encode;}

$_=encode("utf8", $_)'

This uses the perl rename command available on Debian and Ubuntu. You can pass it -n to show what it would be doing without actually renaming the files.

Solution 2

I know this is an old question but i have been searching all night for a similar solution. I found a few helpful tips but they did not do exactly what i needed, so I had to mix and match a few to get the correct outcome I was looking for

to simply remove special characters and replace them with a (.) dot

for f in *.txt; do mv "$f" `echo $f | sed "s/[^a-zA-Z0-9.]/./g"`; done

to use in a cronjob I did the following to run every minute

*/1 * * * * cd /path/to/files/ && for f in *.txt; do mv "$f" `echo $f | sed "s/[^a-zA-Z0-9.]/./g"`; done >/dev/null 2>&1

I hope someone finds this helpful as it has made my day :)

Related videos on Youtube

RobbieV

Updated on September 17, 2022Comments

-

RobbieV over 1 year

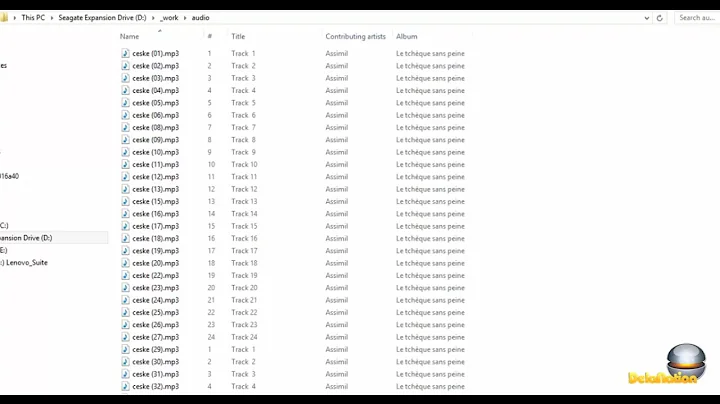

I have a bunch of directories and subdirectories that contain files with special characters, like this file:

robbie@phil:~$ ls test�sktest.txt test?sktest.txtFind reveals an escape sequence:

robbie@phil:~$ find test�sktest.txt -ls 424512 4000 -rwxr--r-x 1 robbie robbie 4091743 Jan 26 00:34 test\323sktest.txtThe only reason I can even type their names on the console is because of tab completion. This also means I can rename them manually (and strip the special character).

I've set LC_ALL to UTF-8, which does not seem to help (also not on a new shell):

robbie@phil:~$ echo $LC_ALL en_US.UTF-8I'm connecting to the machine using ssh from my mac. It's an Ubuntu install:

robbie@phil:~$ cat /etc/lsb-release DISTRIB_ID=Ubuntu DISTRIB_RELEASE=7.10 DISTRIB_CODENAME=gutsy DISTRIB_DESCRIPTION="Ubuntu 7.10"Shell is Bash, TERM is set to xterm-color.

These files have been there for quite a while, and they have not been created using that install of Ubuntu. So I don't know what the system encoding settings used to be.

I've tried things along the lines of:

find . -type f -ls | sed 's/[^a-zA-Z0-9]//g'But I can't find a solution that does everything I want:

- Identify all files that have undisplayable characters (the above ignores way too much)

- For all those files in a directory tree (recursively), execute mv oldname newname

- Optionally, the ability to transliterate special characters such as ä to a (not required, but would be awesome)

OR

- Correctly display all these files (and no errors in applications when trying to open them)

I have bits and pieces, like iterating over all files and moving them, but identifying the files and formatting them correctly for the mv command seems to be the hard part.

Any extra information as to why they do not display correctly, or how to "guess" the correct encoding are also welcome. (I've tried convmv but it doesn't seem to do exactly what I want: http://j3e.de/linux/convmv/)

-

RobbieV over 13 yearsThanks I will try some of these things later today! Looks like this will be the accepted answer :)

-

RobbieV over 13 yearsThe find | grep '[[:print:]]' command seems to simply return all files. Shouldn't UTF-8 be compatible with many other encodings with "normal" characters?

-

Gilles 'SO- stop being evil' over 13 years@RobbieV: I typoed and meant

Gilles 'SO- stop being evil' over 13 years@RobbieV: I typoed and meantgrep [^[:print:]]to search for unprintable characters. But I've just tested with GNU grep and invalid UTF-8 sequences aren't caught by[^[:print:]](which makes sense as they're not unprintable characters, they aren't characters at all). I've edited my post with a longer way of grepping lines with invalid utf8 sequences. Note that I've also fixed the direction of therecodeandiconvexamples. -

RobbieV over 13 yearsThat worked perfectly. Tried all the commands except the iconv one, and they all work as expected. Pure magic!

-

RobbieV over 13 yearsEven the suggested latin1 encoding was the correct one :)

-

Scott - Слава Україні almost 8 years(1) For clarity, you might want to change

Scott - Слава Україні almost 8 years(1) For clarity, you might want to change`…`to$(…)— see this, this, and this. (2) You should always quote your shell variable references (e.g.,"$f") unless you have a good reason not to, and you’re sure you know what you’re doing. This applies even toecho "$f" | sed …. It also applies to the entire$(…)(or`…`) expression; i.e.,mv "$f" "$(echo "$f" | sed "…")". … (Cont’d) -

Scott - Слава Україні almost 8 years(Cont’d) … (3) You should say

Scott - Слава Україні almost 8 years(Cont’d) … (3) You should saymv--"$f" …, to protect against filenames beginning with-. (4) If you have files named “foo♥bar.txt” and “foo♠bar.txt”, this will (try to) rename both of them to “foo.bar.txt”, possibly causing all but one of the files to be destroyed. (5) Why on earth would you want to do this once every minute? -

Topps70 almost 8 yearsI have a torrent script that auto downloads files. and sometimes some files have characters in them that throws the uploader off. so by simply renaming files with special characters my cron fixed all my problems and the uploader does its job smoothly.

-

Topps70 almost 8 yearsso (this fi'le tha,t was - down_loaded.ext) turns into (this.fi.le.tha.t.was.down.loaded.ext)

-

Marc.2377 over 5 yearsOn Arch Linux, you need perl-rename.

Marc.2377 over 5 yearsOn Arch Linux, you need perl-rename.