Can I copy large files faster without using the file cache?

Solution 1

There is the nocache utility, which can prepended to a command like ionice and nice. It works by preloading a library which adds posix_fadvise with the POSIX_FADV_DONTNEED flag to any open calls.

In simple terms, it advises the kernel that caching is not needed for that particular file; the kernel will then normally not cache the file. See here for the technical details.

It does wonders for any huge copy jobs, e. g. if you want to backup a multi terabyte disk in the background with the least possible impact on you running system, you can do something along nice -n19 ionice -c3 nocache cp -a /vol /vol2.

A package will be available in Ubuntu 13.10 and up. If you are on a previous release you can either install the 13.10 package or opt for this 12.04 backport by François Marier.

Solution 2

For single large files, use dd with direct I/O to bypass the file cache:

If you want to transfer one (or a few) large multi-gigabyte files, it's easy to do with dd:

dd if=/path/to/source of=/path/to/destination bs=4M iflag=direct oflag=direct

- The

directflags tellddto use the kernel's direct I/O option (O_DIRECT) while reading and writing, thus completely bypassing the file cache. - The

bsblocksize option must be set to a reasonably large value since to minimize the number of physical disk operationsddmust perform, since reads/writes are no longer cached and too many small direct operations can result in a serious slowdown.- Feel free to experiment with values from 1 to 32 MB; the setting above is 4 MB (

4M).

- Feel free to experiment with values from 1 to 32 MB; the setting above is 4 MB (

For multiple/recursive directory copies, unfortunately, there are no easily available tools; the usual cp,etc do not support direct I/O.

/e iflags & oflags changed to the correct iflag & oflag

Solution 3

You can copy a directory recursively with dd using find and mkdir

We need to workaround two problems:

-

dddoesn't know what to do with directories -

ddcan only copy one file at a time

First let's define input and output directories:

SOURCE="/media/source-dir"

TARGET="/media/target-dir"

Now let's cd into the source directory so find will report relative directories we can easily manipulate:

cd "$SOURCE"

Duplicate the directory tree from $SOURCE to $TARGET

find . -type d -exec mkdir -p "$TARGET{}" \;

Duplicate files from $SOURCE to $TARGET omitting write cache (but utilising read cache!)

find . -type f -exec dd if={} of="$TARGET{}" bs=8M oflag=direct \;

Please note that this won't preserve file modification times, ownership and other attributes.

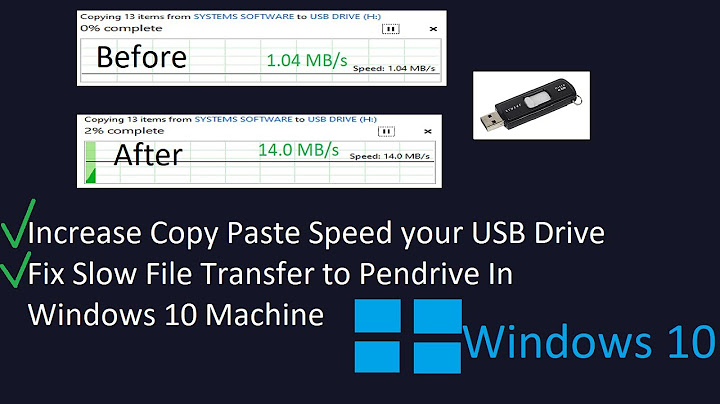

Related videos on Youtube

Veazer

Updated on September 18, 2022Comments

-

Veazer over 1 year

After adding the

preloadpackage, my applications seem to speed up but if I copy a large file, the file cache grows by more than double the size of the file.By transferring a single 3-4 GB virtualbox image or video file to an external drive, this huge cache seems to remove all the preloaded applications from memory, leading to increased load times and general performance drops.

Is there a way to copy large, multi-gigabyte files without caching them (i.e. bypassing the file cache)? Or a way to whitelist or blacklist specific folders from being cached?

-

nanofarad almost 12 yearsRecursive could be done with

nanofarad almost 12 yearsRecursive could be done withzsh's**operator.zshneeds to be installed manually from the repos. -

nanofarad almost 12 yearsActually, no.

nanofarad almost 12 yearsActually, no.dd's weird syntax fouls up the ** oprtator. You could still use a shell script that got arguments normally(dd.sh in.file out.filewith ** in the filenames) and gave the filenames toddusing$1,$2, etc, which should not be fouled by dd's weird syntax. -

Veazer almost 12 yearsI was hoping for something that could be done via the GUI, as well as a way to simply blacklist the 'no cache' folders, but this will have to do for now.

-

stolsvik over 11 yearsDirect makes is very slow, since it AFAIK also disables the readahead caches, which is probably not what you want, and is not realistic in a benchmarking scenario either. Use "iflag=nocache oflag=nocache" instead, which exactly says to the OS that you don't need the in-file or the out-file cached.