Can Linux "run out of RAM"?

Solution 1

What might be happening if a process is "killed due to low RAM"?

It's sometimes said that linux by default never denies requests for more memory from application code -- e.g. malloc().1 This is not in fact true; the default uses a heuristic whereby

Obvious overcommits of address space are refused. Used for a typical system. It ensures a seriously wild allocation fails while allowing overcommit to reduce swap usage.

From [linux_src]/Documentation/vm/overcommit-accounting (all quotes are from the 3.11 tree). Exactly what counts as a "seriously wild allocation" isn't made explicit, so we would have to go through the source to determine the details.

We could also use the experimental method in footnote 2 (below) to try and get some reflection of the heuristic -- based on that, my initial empirical observation is that under ideal circumstances (== the system is idle), if you don't have any swap, you'll be allowed to allocate about half your RAM, and if you do have swap, you'll get about half your RAM plus all of your swap. That is more or less per process (but note this limit is dynamic and subject to change because of state, see some observations in footnote 5).

Half your RAM plus swap is explicitly the default for the "CommitLimit" field in /proc/meminfo. Here's what it means -- and note it actually has nothing to do with the limit just discussed (from [src]/Documentation/filesystems/proc.txt):

CommitLimit: Based on the overcommit ratio ('vm.overcommit_ratio'), this is the total amount of memory currently available to be allocated on the system. This limit is only adhered to if strict overcommit accounting is enabled (mode 2 in 'vm.overcommit_memory'). The CommitLimit is calculated with the following formula: CommitLimit = ('vm.overcommit_ratio' * Physical RAM) + Swap For example, on a system with 1G of physical RAM and 7G of swap with a 'vm.overcommit_ratio' of 30 it would yield a CommitLimit of 7.3G.

The previously quoted overcommit-accounting doc states that the default vm.overcommit_ratio is 50. So if you sysctl vm.overcommit_memory=2,

you can then adjust vm.covercommit_ratio (with sysctl) and see the consequences.3 The default mode, when CommitLimit is not enforced and only "obvious overcommits of address space are refused", is when vm.overcommit_memory=0.

While the default strategy does have a heuristic per-process limit preventing the "seriously wild allocation", it does leave the system as a whole free to get seriously wild, allocation wise.4 This means at some point it can run out of memory and have to declare bankruptcy to some process(es) via the OOM killer.

What does the OOM killer kill? Not necessarily the process that asked for memory when there was none, since that's not necessarily the truly guilty process, and more importantly, not necessarily the one that will most quickly get the system out of the problem it is in.

This is cited from here which probably cites a 2.6.x source:

/*

* oom_badness - calculate a numeric value for how bad this task has been

*

* The formula used is relatively simple and documented inline in the

* function. The main rationale is that we want to select a good task

* to kill when we run out of memory.

*

* Good in this context means that:

* 1) we lose the minimum amount of work done

* 2) we recover a large amount of memory

* 3) we don't kill anything innocent of eating tons of memory

* 4) we want to kill the minimum amount of processes (one)

* 5) we try to kill the process the user expects us to kill, this

* algorithm has been meticulously tuned to meet the principle

* of least surprise ... (be careful when you change it)

*/

Which seems like a decent rationale. However, without getting forensic, #5 (which is redundant of #1) seems like a tough sell implementation wise, and #3 is redundant of #2. So it might make sense to consider this pared down to #2/3 and #4.

I grepped through a recent source (3.11) and noticed that this comment has changed in the interim:

/**

* oom_badness - heuristic function to determine which candidate task to kill

*

* The heuristic for determining which task to kill is made to be as simple and

* predictable as possible. The goal is to return the highest value for the

* task consuming the most memory to avoid subsequent oom failures.

*/

This is a little more explicitly about #2: "The goal is to [kill] the task consuming the most memory to avoid subsequent oom failures," and by implication #4 ("we want to kill the minimum amount of processes (one)).

If you want to see the OOM killer in action, see footnote 5.

1 A delusion Gilles thankfully rid me of, see comments.

2 Here's a straightforward bit of C which asks for increasingly large chunks of memory to determine when a request for more will fail:

#include <stdio.h>

#include <stdint.h>

#include <stdlib.h>

#define MB 1 << 20

int main (void) {

uint64_t bytes = MB;

void *p = malloc(bytes);

while (p) {

fprintf (stderr,

"%lu kB allocated.\n",

bytes / 1024

);

free(p);

bytes += MB;

p = malloc(bytes);

}

fprintf (stderr,

"Failed at %lu kB.\n",

bytes / 1024

);

return 0;

}

If you don't know C, you can compile this gcc virtlimitcheck.c -o virtlimitcheck, then run ./virtlimitcheck. It is completely harmless, as the process doesn't use any of the space it asks for -- i.e., it never really uses any RAM.

On a 3.11 x86_64 system with 4 GB system and 6 GB of swap, I failed at ~7400000 kB; the number fluctuates, so perhaps state is a factor. This is coincidentally close to the CommitLimit in /proc/meminfo, but modifying this via vm.overcommit_ratio does not make any difference. On a 3.6.11 32-bit ARM 448 MB system with 64 MB of swap, however, I fail at ~230 MB. This is interesting since in the first case the amount is almost double the amount of RAM, whereas in the second it is about 1/4 that -- strongly implying the amount of swap is a factor. This was confirmed by turning swap off on the first system, when the failure threshold went down to ~1.95 GB, a very similar ratio to the little ARM box.

But is this really per process? It appears to be. The short program below asks for a user defined chunk of memory, and if it succeeds, waits for you to hit return -- this way you can try multiple simultaneous instances:

#include <stdio.h>

#include <stdlib.h>

#define MB 1 << 20

int main (int argc, const char *argv[]) {

unsigned long int megabytes = strtoul(argv[1], NULL, 10);

void *p = malloc(megabytes * MB);

fprintf(stderr,"Allocating %lu MB...", megabytes);

if (!p) fprintf(stderr,"fail.");

else {

fprintf(stderr,"success.");

getchar();

free(p);

}

return 0;

}

Beware, however, that it is not strictly about the amount of RAM and swap regardless of use -- see footnote 5 for observations about the effects of system state.

3 CommitLimit refers to the amount of address space allowed for the system when vm.overcommit_memory = 2. Presumably then, the amount you can allocate should be that minus what's already committed, which is apparently the Committed_AS field.

A potentially interesting experiment demonstrating this is to add #include <unistd.h> to the top of virtlimitcheck.c (see footnote 2), and a fork() right before the while() loop. That is not guaranteed to work as described here without some tedious synchronization, but there is a decent chance it will, YMMV:

> sysctl vm.overcommit_memory=2

vm.overcommit_memory = 2

> cat /proc/meminfo | grep Commit

CommitLimit: 9231660 kB

Committed_AS: 3141440 kB

> ./virtlimitcheck 2&> tmp.txt

> cat tmp.txt | grep Failed

Failed at 3051520 kB.

Failed at 6099968 kB.

This makes sense -- looking at tmp.txt in detail you can see the processes alternate their bigger and bigger allocations (this is easier if you throw the pid into the output) until one, evidently, has claimed enough that the other one fails. The winner is then free to grab everything up to CommitLimit minus Committed_AS.

4 It's worth mentioning, at this point, if you do not already understand virtual addressing and demand paging, that what makes over commitment possible in the first place is that what the kernel allocates to userland processes isn't physical memory at all -- it's virtual address space. For example, if a process reserves 10 MB for something, that's laid out as a sequence of (virtual) addresses, but those addresses do not yet correspond to physical memory. When such an address is accessed, this results in a page fault and then the kernel attempts to map it onto real memory so that it can store a real value. Processes usually reserve much more virtual space than they actually access, which allows the kernel to make the most efficient use of RAM. However, physical memory is still a finite resource and when all of it has been mapped to virtual address space, some virtual address space has to be eliminated to free up some RAM.

5 First a warning: If you try this with vm.overcommit_memory=0, make sure you save your work first and close any critical applications, because the system will be frozen for ~90 seconds and some process will die!

The idea is to run a fork bomb that times out after 90 seconds, with the forks allocating space and some of them writing large amounts of data to RAM, all the while reporting to stderr.

#include <stdio.h>

#include <unistd.h>

#include <stdlib.h>

#include <sys/time.h>

#include <errno.h>

#include <string.h>

/* 90 second "Verbose hungry fork bomb".

Verbose -> It jabbers.

Hungry -> It grabs address space, and it tries to eat memory.

BEWARE: ON A SYSTEM WITH 'vm.overcommit_memory=0', THIS WILL FREEZE EVERYTHING

FOR THE DURATION AND CAUSE THE OOM KILLER TO BE INVOKED. CLOSE THINGS YOU CARE

ABOUT BEFORE RUNNING THIS. */

#define STEP 1 << 30 // 1 GB

#define DURATION 90

time_t now () {

struct timeval t;

if (gettimeofday(&t, NULL) == -1) {

fprintf(stderr,"gettimeofday() fail: %s\n", strerror(errno));

return 0;

}

return t.tv_sec;

}

int main (void) {

int forks = 0;

int i;

unsigned char *p;

pid_t pid, self;

time_t check;

const time_t start = now();

if (!start) return 1;

while (1) {

// Get our pid and check the elapsed time.

self = getpid();

check = now();

if (!check || check - start > DURATION) return 0;

fprintf(stderr,"%d says %d forks\n", self, forks++);

// Fork; the child should get its correct pid.

pid = fork();

if (!pid) self = getpid();

// Allocate a big chunk of space.

p = malloc(STEP);

if (!p) {

fprintf(stderr, "%d Allocation failed!\n", self);

return 0;

}

fprintf(stderr,"%d Allocation succeeded.\n", self);

// The child will attempt to use the allocated space. Using only

// the child allows the fork bomb to proceed properly.

if (!pid) {

for (i = 0; i < STEP; i++) p[i] = i % 256;

fprintf(stderr,"%d WROTE 1 GB\n", self);

}

}

}

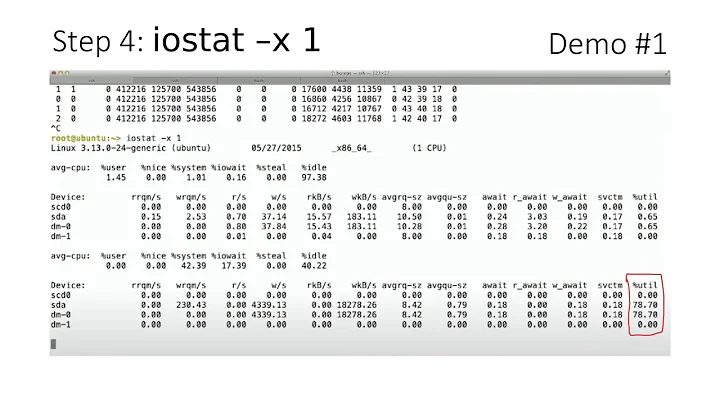

Compile this gcc forkbomb.c -o forkbomb. First, try it with sysctl vm.overcommit_memory=2 -- you'll probably get something like:

6520 says 0 forks

6520 Allocation succeeded.

6520 says 1 forks

6520 Allocation succeeded.

6520 says 2 forks

6521 Allocation succeeded.

6520 Allocation succeeded.

6520 says 3 forks

6520 Allocation failed!

6522 Allocation succeeded.

In this environment, this kind of fork bomb doesn't get very far. Note that the number in "says N forks" is not the total number of processes, it is the number of processes in the chain/branch leading up to that one.

Now try it with vm.overcommit_memory=0. If you redirect stderr to a file, you can do some crude analysis afterward, e.g.:

> cat tmp.txt | grep failed

4641 Allocation failed!

4646 Allocation failed!

4642 Allocation failed!

4647 Allocation failed!

4649 Allocation failed!

4644 Allocation failed!

4643 Allocation failed!

4648 Allocation failed!

4669 Allocation failed!

4696 Allocation failed!

4695 Allocation failed!

4716 Allocation failed!

4721 Allocation failed!

Only 15 processes failed to allocate 1 GB -- demonstrating that the heuristic for overcommit_memory = 0 is affected by state. How many processes were there? Looking at the end of tmp.txt, probably > 100,000. Now how may actually got to use the 1 GB?

> cat tmp.txt | grep WROTE

4646 WROTE 1 GB

4648 WROTE 1 GB

4671 WROTE 1 GB

4687 WROTE 1 GB

4694 WROTE 1 GB

4696 WROTE 1 GB

4716 WROTE 1 GB

4721 WROTE 1 GB

Eight -- which again makes sense, since at the time I had ~3 GB RAM free and 6 GB of swap.

Have a look at your system logs after you do this. You should see the OOM killer reporting scores (amongst other things); presumably this relates to oom_badness.

Solution 2

This won't happen to you if you only ever load 1G of data into memory. What if you load much much more? For example, I often work with huge files containing millions of probabilities which need to be loaded into R. This takes about 16GB of RAM.

Running the above process on my laptop will cause it to start swapping like crazy as soon as my 8GB of RAM have been filled. That, in turn, will slow everything down because reading from disk is much slower than reading from the RAM. What if I have a laptop with 2GB of RAM and only 10GB of free space? Once the process has taken all the RAM, it will also fill up the disk because it is writing to swap and I am left with no more RAM and no more space to swap into (people tend to limit swap to a dedicated partition rather than a swapfile for exactly that reason). That's where the OOM killer comes in and starts killing processes.

So, the system can indeed run out of memory. Furthermore, heavily swapping systems can become unusable long before this happens simply because of the slow I/O operations due to swapping. One generally wants to avoid swapping as much as possible. Even on high end servers with fast SSDs there is a clear decrease in performance. On my laptop, which has a classic 7200RPM drive, any significant swapping essentially renders the system unusable. The more it swaps, the slower it gets. If I don't kill the offending process quickly everything hangs until the OOM killer steps in.

Solution 3

Processes aren't killed when there is no more RAM, they are killed when they have been cheated this way:

- Linux kernel commonly allows processes to allocate (i.e. reserve) an amount of virtual memory which is larger than what is really available (part of RAM + all the swap area)

- as long as the processes only access a subset of the pages they have reserved, everything runs fine.

- if after some time, a process tries to access a page it owns but no more pages are free, an out of memory situation occurs

- The OOM killer selects one of the processes, not necessarily the one that requested a new page, and just kill it to recover virtual memory.

This might happen even while the system is not actively swapping, for example if the swap area is filled with sleeping daemons memory pages.

This never happens on OSes that do not overcommit memory. With them no random process is killed but the first process asking for virtual memory while it is exhausted has malloc (or similar) returning in error. It is thus given a chance to properly handle the situation. However, on these OSes, it might also happen the system runs out of virtual memory while there is still free RAM, which is quite confusing and generally misunderstood.

Solution 4

When the available RAM is exhausted, the kernel starts swapping out bits of processing to disk. Actually, the kernel starts swapping a when the RAM is near exhaustion: it starts swapping proactively when it has an idle moment, so as to be more responsive if an application suddenly requires more memory.

Note that the RAM isn't used only to store the memory of processes. On a typical healthy system, only about half the RAM is used by processes, and the other half is used for disk cache and buffers. This provides a good balance between running processes and file input/output.

The swap space is not infinite. At some point, if processes keep allocating more and more memory, the spillover data from RAM will fill the swap. When that happens, processes that try to request more memory see their request denied.

By default, Linux overcommits memory. This means that it will sometimes allow a process to run with memory that it has reserved, but not used. The main reason for having overcommitment is the way forking works. When a process launches a subprocess, the child process conceptually operates in a replica of the memory of the parent — the two processes initially have memory with the same content, but that content will diverge as the processes make changes each in their own space. To implement this fully, the kernel would have to copy all the memory of the parent. This would make forking slow, so the kernel practices copy-on-write: initially, the child shares all of its memory with the parent; whenever either process writes to a shared page, the kernel creates a copy of that page to break the sharing.

Often a child will leave many pages untouched. If the kernel allocated enough memory to replicate the parent's memory space on each fork, a lot of memory would be wasted in reservations that child processes aren't ever going to use. Hence overcommitting: the kernel only reserves part of that memory, based on an estimate of how many pages the child will need.

If a process tries to allocate some memory and there is not enough memory left, the process receives an error response and deals with it as it sees fit. If a process indirectly requests memory by writing to a shared page that must be unshared, it's a different story. There is no way to report this situation to the application: it believes that it has writable data there, and could even read it — it's just that writing involves some slightly more elaborate operation under the hood. If the kernel is unable to provide a new memory page, all it can do is kill the requesting process, or kill some other process to fill the memory.

You might think at this point that killing the requesting process is the obvious solution. But in practice, this is not so good. The process may be an important one that happens to only need to access one of its pages now, while there may be other, less important processes running. So the kernel includes complex heuristics to choose which processes to kill — the (in)famous OOM killer.

Solution 5

Just to add in another viewpoint from the other answers, many VPS's host several virtual machines on any given server. Any single VM will have a specified amount of RAM for their own use. Many providers offer "burst RAM", in which they can use RAM beyond the amount that they are designated. This is meant to be only for short-term use, and those who go beyond this amount of an extended amount of time may be penalized by the host killing off processes to bring down the amount of RAM in use so that others do not suffer from the host machine being overloaded.

Related videos on Youtube

themirror

Updated on September 18, 2022Comments

-

themirror over 1 year

I saw several posts around the web of people apparently complaining about a hosted VPS unexpectedly killing processes because they used too much RAM.

How is this possible? I thought all modern OS' provide "infinite RAM" by just using disk swap for whatever goes over the physical RAM. Is this correct?

What might be happening if a process is "killed due to low RAM"?

-

Admin over 10 yearsNo OS has infinite RAM. In addition to the physical RAM chips in the machine, OSes can -- usually, optionally -- use a so-called 'swap file' which is on disk. When a computer needs more memory than it has in RAM sticks, it swaps some stuff out to the swap file. But as the swap file reaches it's capacity -- either because you set a maximum size (typical) or the disk fills up -- you run out of virtual memory.

Admin over 10 yearsNo OS has infinite RAM. In addition to the physical RAM chips in the machine, OSes can -- usually, optionally -- use a so-called 'swap file' which is on disk. When a computer needs more memory than it has in RAM sticks, it swaps some stuff out to the swap file. But as the swap file reaches it's capacity -- either because you set a maximum size (typical) or the disk fills up -- you run out of virtual memory. -

Admin over 10 years@JohnDibling; so is there any reason one would want to limit the swap size other than to save disk space for the filesystem? In other words if I have a 20GB disk and only 1GB of files, is there any reason not to set my swap size to 19GB?

Admin over 10 years@JohnDibling; so is there any reason one would want to limit the swap size other than to save disk space for the filesystem? In other words if I have a 20GB disk and only 1GB of files, is there any reason not to set my swap size to 19GB? -

Admin over 10 yearsTo oversimplify things, I would say the two reasons to limit swap size are 1) to reduce disk consumption and 2) to increase performance. The latter might be more true under Windows than /*NIX, but then again, if you are using the swap space on your disk, your performance is degraded. Disk access is either slower than RAM, or much slower than RAM, depending on the system.

Admin over 10 yearsTo oversimplify things, I would say the two reasons to limit swap size are 1) to reduce disk consumption and 2) to increase performance. The latter might be more true under Windows than /*NIX, but then again, if you are using the swap space on your disk, your performance is degraded. Disk access is either slower than RAM, or much slower than RAM, depending on the system. -

Admin over 10 yearsSwap is not RAM. en.wikipedia.org/wiki/Random-access_memory The amount of RAM in your system is the amount of RAM in your system -- period. It is not an ambiguous or dynamic volume. It is absolutely fixed. "Memory" is a more ambiguous concept, but the distinction between RAM and other forms of storage is, as terdon (+1) points out, pretty significant. Disk swap cannot substitute for the performance of RAM by many orders of magnitude. A system which depends excessively on swap is at best temporary and in general: garbage.

Admin over 10 yearsSwap is not RAM. en.wikipedia.org/wiki/Random-access_memory The amount of RAM in your system is the amount of RAM in your system -- period. It is not an ambiguous or dynamic volume. It is absolutely fixed. "Memory" is a more ambiguous concept, but the distinction between RAM and other forms of storage is, as terdon (+1) points out, pretty significant. Disk swap cannot substitute for the performance of RAM by many orders of magnitude. A system which depends excessively on swap is at best temporary and in general: garbage. -

Admin over 10 yearsSo disk space is infinite now?

Admin over 10 yearsSo disk space is infinite now?

-

-

jlliagre over 10 yearsswapping out is not a solution (or even related) to memory over commitment. Memory allocation (eg: malloc) is about requesting virtual memory to be reserved, not physical one.

jlliagre over 10 yearsswapping out is not a solution (or even related) to memory over commitment. Memory allocation (eg: malloc) is about requesting virtual memory to be reserved, not physical one. -

goldilocks over 10 years@jillagre : "swapping out is not a solution (or even related) to memory over commitment" -> Yes, actually it is. Infrequently used pages are swapped out of RAM, leaving more RAM available to deal with page faults caused by demand paging/allocation (which is the mechanism that makes over commitment possible). The swapped out pages may also have to be demand paged back into RAM at some point.

goldilocks over 10 years@jillagre : "swapping out is not a solution (or even related) to memory over commitment" -> Yes, actually it is. Infrequently used pages are swapped out of RAM, leaving more RAM available to deal with page faults caused by demand paging/allocation (which is the mechanism that makes over commitment possible). The swapped out pages may also have to be demand paged back into RAM at some point. -

goldilocks over 10 years"Memory allocation (eg: malloc) is about requesting virtual memory to be reserved, not physical one." -> Right, but the kernel could (and optionally, will) say no when there are no physical mappings left. It certainly would not be because a process has run out of virtual address space (or at least not usually, since that is possible too, at least on 32-bit systems).

goldilocks over 10 years"Memory allocation (eg: malloc) is about requesting virtual memory to be reserved, not physical one." -> Right, but the kernel could (and optionally, will) say no when there are no physical mappings left. It certainly would not be because a process has run out of virtual address space (or at least not usually, since that is possible too, at least on 32-bit systems). -

Thomas Nyman over 10 yearsNo process is run from swap. The virtual memory is divided into equally sized distinct units called pages. When physical memory is freed, low priority pages are evicted from RAM. While pages in the file cache have file system backing, anonymous pages must be stored in swap. The priority of a page is not directly related to the priority of the process it belongs to, but how often it is used. If a running process tries to access a page not in physical memory, a page fault is generated and process is preempted in favor of another process while the needed page(s) are fetched from disk.

-

jlliagre over 10 yearsDemand paging is not what makes memory over commitment possible. Linux certainly over commit memory on systems with no swap area at all. You might be confusing memory over commitment and demand paging. If Linux says "no" to malloc with a 64 bit process, i.e. if it is not configured to always overcommit, that would be either because of memory corruption or because the sum of all memory reservations (whether mapped or not to RAM or disk) is over a threshold threshold depending on the configuration. This has nothing to do with RAM usage as it might happen even while there is still free RAM.

jlliagre over 10 yearsDemand paging is not what makes memory over commitment possible. Linux certainly over commit memory on systems with no swap area at all. You might be confusing memory over commitment and demand paging. If Linux says "no" to malloc with a 64 bit process, i.e. if it is not configured to always overcommit, that would be either because of memory corruption or because the sum of all memory reservations (whether mapped or not to RAM or disk) is over a threshold threshold depending on the configuration. This has nothing to do with RAM usage as it might happen even while there is still free RAM. -

goldilocks over 10 years"Demand paging is not what makes memory over commitment possible." -> Perhaps it would be better to say that it is virtual addressing which makes both demand paging and over commitment possible. "Linux certainly over commit memory on systems with no swap area at all." -> Obviously, since demand paging does not require swap; demand paging from swap is just a special instance of demand paging. Once again, swap is a solution to over commitment, not in the sense that it solves the problem, but in the sense that will help to prevent potential OOM events that might result from over commitment.

goldilocks over 10 years"Demand paging is not what makes memory over commitment possible." -> Perhaps it would be better to say that it is virtual addressing which makes both demand paging and over commitment possible. "Linux certainly over commit memory on systems with no swap area at all." -> Obviously, since demand paging does not require swap; demand paging from swap is just a special instance of demand paging. Once again, swap is a solution to over commitment, not in the sense that it solves the problem, but in the sense that will help to prevent potential OOM events that might result from over commitment. -

goldilocks over 10 years"over a threshold threshold depending on the configuration" Correct, and that threshold is the total swap space (!) plus a percentage of the system RAM. In other words, when overcommit is disabled, when the address space committed reaches that threshold it is an indication of RAM usage -- or at least, RAM commitment (see

goldilocks over 10 years"over a threshold threshold depending on the configuration" Correct, and that threshold is the total swap space (!) plus a percentage of the system RAM. In other words, when overcommit is disabled, when the address space committed reaches that threshold it is an indication of RAM usage -- or at least, RAM commitment (seesrc/Documentation/vm/overcommit-accounting). Again, it is out of (potential) physical mappings. So when overcommitment is discouraged, swap space increases the amount of space that can be committed. That's a solution to a problem. -

jlliagre over 10 yearsUsage != Commitment. Most of the RAM can still be unused while the threshold is hit. Please clarify what you mean with "memory" in your first and second sentence. As you rightly wrote in an earlier comment, "memory" is ambiguous. You should state whether it is RAM or virtual memory what you are talking about.

jlliagre over 10 yearsUsage != Commitment. Most of the RAM can still be unused while the threshold is hit. Please clarify what you mean with "memory" in your first and second sentence. As you rightly wrote in an earlier comment, "memory" is ambiguous. You should state whether it is RAM or virtual memory what you are talking about. -

goldilocks over 10 yearsIf overcommit is disabled, the allocation of virtual space leads to a commitment of RAM space -- because the maximum commitment is directly proportional to the amount of available RAM. So I (explicitly) equated usage with commitment. If you write an appointment on your calendar, you've committed yourself and that date is used up. If you want to define "usage" to mean "that day has actually passed", then by analogy RAM "usage" would mean physical pages that are actually mapped to virtual pages. But that is not what I was discussing.

goldilocks over 10 yearsIf overcommit is disabled, the allocation of virtual space leads to a commitment of RAM space -- because the maximum commitment is directly proportional to the amount of available RAM. So I (explicitly) equated usage with commitment. If you write an appointment on your calendar, you've committed yourself and that date is used up. If you want to define "usage" to mean "that day has actually passed", then by analogy RAM "usage" would mean physical pages that are actually mapped to virtual pages. But that is not what I was discussing. -

jlliagre over 10 yearsA RAM page containing data is used. If its content is irrelevant and can be overwritten, it is free. Same definition apply to the swap space. When a process call malloc, it asks for an amount of virtual memory. This virtual memory has no predefined physical "location", unlike your calendar analogy, it is just an amount (one day vs that day). The maximum memory that can be committed with no risk is equal to the size of disk space that can be used to paginate VM plus the size of non locked, non kernel RAM. It is then not directly proportional to the RAM size, just partially related.

jlliagre over 10 yearsA RAM page containing data is used. If its content is irrelevant and can be overwritten, it is free. Same definition apply to the swap space. When a process call malloc, it asks for an amount of virtual memory. This virtual memory has no predefined physical "location", unlike your calendar analogy, it is just an amount (one day vs that day). The maximum memory that can be committed with no risk is equal to the size of disk space that can be used to paginate VM plus the size of non locked, non kernel RAM. It is then not directly proportional to the RAM size, just partially related. -

goldilocks over 10 yearsI know what virtual address space is jilliagre. My point was, with overcommit disabled -- which is NOT the default scenario -- the metric used to limit the total amount of virtual address space that the system will allocate is explicitly limited by a fixed percentage of the total amount of physical RAM (plus swap) -- again, see

goldilocks over 10 yearsI know what virtual address space is jilliagre. My point was, with overcommit disabled -- which is NOT the default scenario -- the metric used to limit the total amount of virtual address space that the system will allocate is explicitly limited by a fixed percentage of the total amount of physical RAM (plus swap) -- again, seesrc/Documentation/vm/overcommit-accounting. That IS DIRECTLY PROPORTIONAL. "A fixed percent of something" is pretty much the definition of the term directly proportional. But this really no longer has anything to do with my answer... -

jlliagre over 10 yearsIf you know what I mean, why don't you correct the ambiguities that are present in your first two paragraphs ? By the way, "directly proportional to

jlliagre over 10 yearsIf you know what I mean, why don't you correct the ambiguities that are present in your first two paragraphs ? By the way, "directly proportional toa*x+b" is different to "directly proportional toa" unlessbis zero orbis itself proportional ofa, which might be the case for the OP but is not the usual one. Moreover, despite being documented that way, this setting doesn't really "disable overcommit" but actually "cap commitment". There is no way to be sure Linux will never overcommit unless you choose a very low (ideally null) overcommit_ratio, but then waste swap or even RAM. -

goldilocks over 10 yearsBecause I was not intending to write the definitive guide to linux mm. Ambiguities in other people's prose bug me too, but I understand the need for brevity. That the term memory can be colloquial and ambiguous does not make it useless or meaningless. For example, in the first paragraph, the distinction between virtual space and physical memory is not very important in context...

goldilocks over 10 yearsBecause I was not intending to write the definitive guide to linux mm. Ambiguities in other people's prose bug me too, but I understand the need for brevity. That the term memory can be colloquial and ambiguous does not make it useless or meaningless. For example, in the first paragraph, the distinction between virtual space and physical memory is not very important in context... -

goldilocks over 10 years...I could have written a preliminary paragraph introducing that distinction and gone from there, but the general point is that OOM killing happens on a default system because the kernel has overcommitted itself by not saying no. That's the general point. You don't actually need to understand demand paging to understand that -- although it does leave a bit of ambiguity about the details of how the kernel accomplishes this. Think about an infinite regress and the difference between clear and confusing.

goldilocks over 10 years...I could have written a preliminary paragraph introducing that distinction and gone from there, but the general point is that OOM killing happens on a default system because the kernel has overcommitted itself by not saying no. That's the general point. You don't actually need to understand demand paging to understand that -- although it does leave a bit of ambiguity about the details of how the kernel accomplishes this. Think about an infinite regress and the difference between clear and confusing. -

goldilocks over 10 years@jlliagre : Okay, I gave in and added a footnote. TBH I think I did a great job of avoiding the topic of virtual addressing as that's normally something I jump on -- to the point of distraction, perhaps ;) If you have issues with the footnote, lol, I am available to chat...

goldilocks over 10 years@jlliagre : Okay, I gave in and added a footnote. TBH I think I did a great job of avoiding the topic of virtual addressing as that's normally something I jump on -- to the point of distraction, perhaps ;) If you have issues with the footnote, lol, I am available to chat... -

Gilles 'SO- stop being evil' over 10 years“Linux by default never denies requests for more memory from application code”. No, this is wrong. By default, Linux applies an intermediate policy where it overcommits some memory but denies allocations that cannot be specified. There is a mode to never deny requests (except some ridiculously large requests), but it is not the default mode and it is only recommended for some niche use cases.

Gilles 'SO- stop being evil' over 10 years“Linux by default never denies requests for more memory from application code”. No, this is wrong. By default, Linux applies an intermediate policy where it overcommits some memory but denies allocations that cannot be specified. There is a mode to never deny requests (except some ridiculously large requests), but it is not the default mode and it is only recommended for some niche use cases. -

goldilocks over 10 years@Gilles By "cannot be specified" do you mean the amount? I'm not denying you are correct, since I notice on this 4 GB, 64-bit system I get turned down somewhere between 7-8 GB. So I'm happy to edit this, but any details you can provide would be appreciated.

goldilocks over 10 years@Gilles By "cannot be specified" do you mean the amount? I'm not denying you are correct, since I notice on this 4 GB, 64-bit system I get turned down somewhere between 7-8 GB. So I'm happy to edit this, but any details you can provide would be appreciated. -

Gilles 'SO- stop being evil' over 10 yearss/specified/satisfied/. You can check the kernel documentation or the source, the default for the sysctl

Gilles 'SO- stop being evil' over 10 yearss/specified/satisfied/. You can check the kernel documentation or the source, the default for the sysctlvm.overcommit_memoryis 0, meaning “use heuristics” (and not 1, meaning “(almost) always say yes”). -

goldilocks over 10 years@Gilles Cheers, and thanks again. Ironic that I actually cited that doc earlier. Anyway, I took some time to do this right -- if you have time, have a look at the (revised) first few paragraphs.

goldilocks over 10 years@Gilles Cheers, and thanks again. Ironic that I actually cited that doc earlier. Anyway, I took some time to do this right -- if you have time, have a look at the (revised) first few paragraphs. -

jlliagre over 10 yearsGood job goldilocks, you did your homework ;-) ! Thanks for finally removing your debatable first paragraphs.

jlliagre over 10 yearsGood job goldilocks, you did your homework ;-) ! Thanks for finally removing your debatable first paragraphs. -

SoreDakeNoKoto almost 5 yearsGreat answer but could you clarify one point: When overcommit-memory = 2, is the CommitLimit a system-wide value or is it per-process? That is, the sum of all the memory requested by and granted to all the running processes must not exceed CommitLimit, right? It makes more sense that it should be system-wide but I saw "per process" in your answer so I'm a bit confused. Thanks.

SoreDakeNoKoto almost 5 yearsGreat answer but could you clarify one point: When overcommit-memory = 2, is the CommitLimit a system-wide value or is it per-process? That is, the sum of all the memory requested by and granted to all the running processes must not exceed CommitLimit, right? It makes more sense that it should be system-wide but I saw "per process" in your answer so I'm a bit confused. Thanks.