Can we load Parquet file into Hive directly?

Solution 1

Get schema of the parquet file using parquet tools, for details check link http://kitesdk.org/docs/0.17.1/labs/4-using-parquet-tools-solution.html

and build table using the schema on the top of the file, for details check Create Hive table to read parquet files from parquet/avro schema

Solution 2

Getting the schema is crucial, as you will have to create the table with the appropriate schema first in Hive and then point it to the parquet files.

I had a similar problem, where I had data in one VM and had to move it to another. Here is my walkthrough:

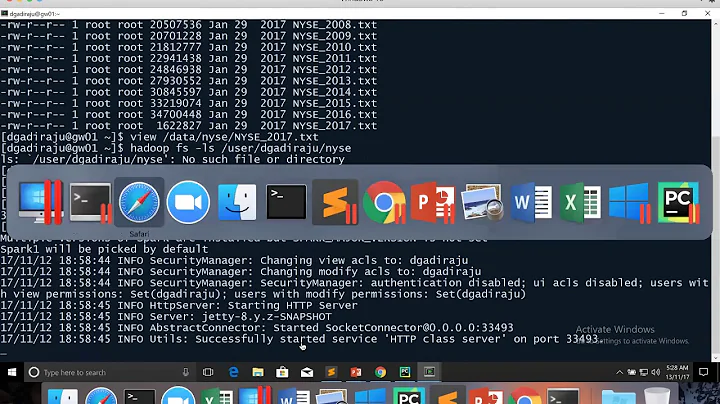

Find out about original Parquet files are (location and schema):

describe formatted users;andshow create table users;The latter will get you the schema right away and also point you to the location of HDFShdfs://hostname:port/apps/hive/warehouse/usersFind out about the partitioning of your table

show partitions users;-

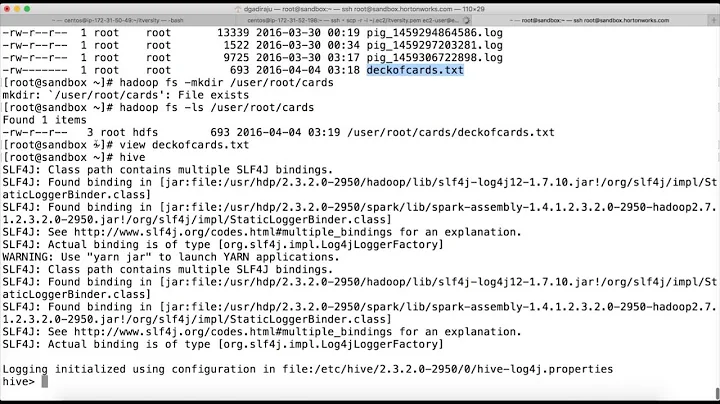

Copy the table's Parquet files from HDFS to a local directory

hdfs dfs -copyToLocal /apps/hive/warehouse/users Move them across to the other cluster/VM or where you want them to go

-

Create the users table on your destination

CREATE USERS ...by using the same schemaCREATE TABLE users ( name string, ... ) PARTITIONED BY (...) STORED AS PARQUET; -

Now, move the Parquet files in the respective folder (if needed find out about the location of the table you've just created)

hdfs dfs -ls /apps/hive/warehouse/users/ hdfs dfs -copyFromLocal ../temp/* /apps/hive/warehouse/ For each partition, you'll have to point Hive to the respective subdirectory:

alter table users add partition (sign_up_date='19991231') location '/apps/hive/warehouse/users/sign_up_date=19991231';(you might want to do this with a bash script)

That worked for me, hope it helps.

Solution 3

Don't know if it's a bit "hacky" but I use zeppelin (shipped with ambari). You can simply do the following in combination with spark2:

%spark2

import org.apache.spark.sql.SaveMode

var df = spark.read.parquet("hdfs:///my_parquet_files/*.parquet");

df.write.mode(SaveMode.Overwrite).saveAsTable("imported_table")

The advantage of this way is that you can also import many parquet files even if they have a different schema.

Related videos on Youtube

annunarcist

I enjoy learning/reading/watching anything that is new - be it about computer science concepts or history or politics or movies etc.

Updated on November 11, 2020Comments

-

annunarcist over 3 years

I know we can load parquet file using Spark SQL and using Impala but wondering if we can do the same using Hive. I have been reading many articles but I am still confused.

Simply put, I have a parquet file - say users.parquet. Now I am struck here on how to load/insert/import data from the users.parquet into hive (obviously into a table).

Please advise or point me in right direction if I am missing something obvious.

Creating hive table using parquet file metadata

https://phdata.io/examples-using-textfile-and-parquet-with-hive-and-impala/

-

Samson Scharfrichter over 8 yearsA trick I have done with ORC files (to clone a Prod table into a Test cluster, actually): create an non-partitioned table with the same exact structure; copy the data file(s) to the directory used by the new table; voila, the table is populated. Might work with Parquet too.

Samson Scharfrichter over 8 yearsA trick I have done with ORC files (to clone a Prod table into a Test cluster, actually): create an non-partitioned table with the same exact structure; copy the data file(s) to the directory used by the new table; voila, the table is populated. Might work with Parquet too.

-

-

soMuchToLearnAndShare over 4 yearsi believe, above way is creating hive managed table instead of external table.

soMuchToLearnAndShare over 4 yearsi believe, above way is creating hive managed table instead of external table. -

yuzhen almost 4 yearscould you show us the complete code? I have no idea where to set up hive connection in this code

-

Fabian almost 4 yearsMy answer js from 2017 and meanwhile it could be that lot of stuff changed in spark, but If I remember correctly you don't need to set up your connection if you use zeppelin like I wrote (you can use the interpreter settings etc.) .. if you dont use zeppelin please search for setting up hive connection because I'm not really up to date with the lastest spark/hive version

Fabian almost 4 yearsMy answer js from 2017 and meanwhile it could be that lot of stuff changed in spark, but If I remember correctly you don't need to set up your connection if you use zeppelin like I wrote (you can use the interpreter settings etc.) .. if you dont use zeppelin please search for setting up hive connection because I'm not really up to date with the lastest spark/hive version -

Vitalis over 3 yearsI used this same pipeline coded as a python ELT pipeline.

Vitalis over 3 yearsI used this same pipeline coded as a python ELT pipeline.