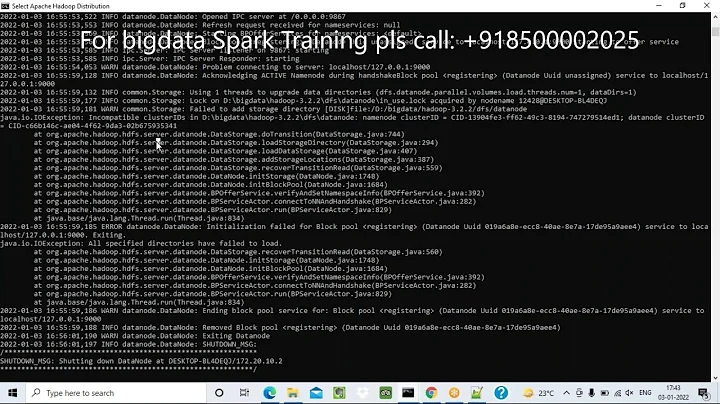

Cannot initialize cluster exception while running job on Hadoop 2

Solution 1

You have uppercased Yarn, which is probably why it can not resolve it. Try the lowercase version that is suggested in the official documentation.

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

Solution 2

I was having similar issue, but yarn was not the issue. After adding following jars into my classpath issue got resolved:

- hadoop-mapreduce-client-jobclient-2.2.0.2.0.6.0-76

- hadoop-mapreduce-client-common-2.2.0.2.0.6.0-76

- hadoop-mapreduce-client-shuffle-2.2.0.2.0.6.0-76

Solution 3

Looks like i had a lucky day and went with this exception through 'all' of those causes. Summary:

- wrong mapreduce.framework.name (see above)

- missing mapreduce job-client jars (see above)

- wrong version (see Cannot initialize Cluster. Please check your configuration for mapreduce.framework.name and the correspond server addresses-submiting job2remoteClustr )

- my configured 'yarn.ipc.client.factory.class' wasn't in the classpath of the yarn server's (just on the client)

Solution 4

In my case i was trying to use sqoop and ran into this error. Turns out that i was pointing to the latest version of hadoop 2.0 available from CDH repo for which sqoop was not supported. The version of cloudera was 2.0.0-cdh4.4.0 which had yarn support build in.

When i used 2.0.0-cdh4.4.0 under hadoop-0.20 the problem went away.

Hope this helps.

Related videos on Youtube

SpeedBirdNine

I am a software engineer and hobbyist gamer. Other than that I like reading and exploring new technologies. Me on Twitter My favorite questions: What is the single most influential book every programmer should read? Why is subtracting these two times (in 1927) giving a strange result? MVVM: Tutorial from start to finish? What is the worst gotcha in C# or .NET? Learning game programming byte + byte = int… why? Fastest sort of fixed length 6 int array Where do you go to tickle your brain (to get programming challenges)? What modern C++ libraries should be in my toolbox? Why is my program slow when looping over exactly 8192 elements? Learning to Write a Compiler

Updated on June 04, 2022Comments

-

SpeedBirdNine almost 2 years

SpeedBirdNine almost 2 yearsThe question is linked to my previous question All the daemons are running, jps shows:

6663 JobHistoryServer 7213 ResourceManager 9235 Jps 6289 DataNode 6200 NameNode 7420 NodeManagerbut the

wordcountexample keeps on failing with the following exception:ERROR security.UserGroupInformation: PriviledgedActionException as:root (auth:SIMPLE) cause:java.io.IOException: Cannot initialize Cluster. Please check your configuration for mapreduce.framework.name and the correspond server addresses. Exception in thread "main" java.io.IOException: Cannot initialize Cluster. Please check your configuration for mapreduce.framework.name and the correspond server addresses. at org.apache.hadoop.mapreduce.Cluster.initialize(Cluster.java:120) at org.apache.hadoop.mapreduce.Cluster.<init>(Cluster.java:82) at org.apache.hadoop.mapreduce.Cluster.<init>(Cluster.java:75) at org.apache.hadoop.mapreduce.Job$9.run(Job.java:1238) at org.apache.hadoop.mapreduce.Job$9.run(Job.java:1234) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:415) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1491) at org.apache.hadoop.mapreduce.Job.connect(Job.java:1233) at org.apache.hadoop.mapreduce.Job.submit(Job.java:1262) at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:1286) at WordCount.main(WordCount.java:80) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.hadoop.util.RunJar.main(RunJar.java:212)Since it says the problem is in configuration, I am posting the configuration files here. The intention is to create a single node cluster.

yarn-site.xml

<?xml version="1.0"?> <configuration> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name> <value>org.apache.hadoop.mapred.ShuffleHandler</value> </property> </configuration>core-site.xml

<configuration> <property> <name>fs.default.name</name> <value>hdfs://localhost:9000</value> </property> </configuration>hdfs-site.xml

<configuration> <property> <name>dfs.replication</name> <value>1</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>file:/home/hduser/yarn/yarn_data/hdfs/namenode</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:/home/hduser/yarn/yarn_data/hdfs/datanode</value> </property> </configuration>mapred-site.xml

<configuration> <property> <name>mapreduce.framework.name</name> <value>Yarn</value> </property> </configuration>Please tell what is missing or what am I doing wrong.

-

Pratik Khadloya over 9 yearsDid you add the jars using CLASSPATH variable or libjars ?

-

neeraj over 9 yearsjust add those lib into yours build path i.e classpath

-

falconepl over 9 yearsIf you are using Maven or some other build tool, it's sufficient to specify

falconepl over 9 yearsIf you are using Maven or some other build tool, it's sufficient to specifyhadoop-mapreduce-client-jobclientas the only dependency from this list. Bothcommonandshufflearejobclient's dependencies, so you don't have to specify them as your project's dependencies. -

Spike over 7 years@bat_rock, i had the same issue. your solution worked for me :-)

-

Jon Andrews almost 7 years@neeraj How to add jar files to classpath?

Jon Andrews almost 7 years@neeraj How to add jar files to classpath?

![[ Fix ] DataNode not starting in Hadoop 2.7.3 || java.io.IOException: Incompatible clusterIDs](https://i.ytimg.com/vi/KPd5w6JIruE/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLBDCCGS2_tlFD2tquCkufIXxWmiow)