Clustering text in Python

Solution 1

The quality of text-clustering depends mainly on two factors:

Some notion of similarity between the documents you want to cluster. For example, it's easy to distinguish between newsarticles about sports and politics in vector space via tfidf-cosine-distance. It's a lot harder to cluster product-reviews in "good" or "bad" based on this measure.

The clustering method itself. You know how many cluster there'll be? Ok, use kmeans. You don't care about accuracy but want to show a nice tree-structure for navigation of search-results? Use some kind of hierarchical clustering.

There is no text-clustering solution, that would work well under any circumstances. And therefore it's probably not enough to take some clustering software out of the box and throw your data at it.

Having said that, here's some experimental code i used some time ago to play around with text-clustering. The documents are represented as normalized tfidf-vectors and the similarity is measured as cosine distance. The clustering method itself is majorclust.

import sys

from math import log, sqrt

from itertools import combinations

def cosine_distance(a, b):

cos = 0.0

a_tfidf = a["tfidf"]

for token, tfidf in b["tfidf"].iteritems():

if token in a_tfidf:

cos += tfidf * a_tfidf[token]

return cos

def normalize(features):

norm = 1.0 / sqrt(sum(i**2 for i in features.itervalues()))

for k, v in features.iteritems():

features[k] = v * norm

return features

def add_tfidf_to(documents):

tokens = {}

for id, doc in enumerate(documents):

tf = {}

doc["tfidf"] = {}

doc_tokens = doc.get("tokens", [])

for token in doc_tokens:

tf[token] = tf.get(token, 0) + 1

num_tokens = len(doc_tokens)

if num_tokens > 0:

for token, freq in tf.iteritems():

tokens.setdefault(token, []).append((id, float(freq) / num_tokens))

doc_count = float(len(documents))

for token, docs in tokens.iteritems():

idf = log(doc_count / len(docs))

for id, tf in docs:

tfidf = tf * idf

if tfidf > 0:

documents[id]["tfidf"][token] = tfidf

for doc in documents:

doc["tfidf"] = normalize(doc["tfidf"])

def choose_cluster(node, cluster_lookup, edges):

new = cluster_lookup[node]

if node in edges:

seen, num_seen = {}, {}

for target, weight in edges.get(node, []):

seen[cluster_lookup[target]] = seen.get(

cluster_lookup[target], 0.0) + weight

for k, v in seen.iteritems():

num_seen.setdefault(v, []).append(k)

new = num_seen[max(num_seen)][0]

return new

def majorclust(graph):

cluster_lookup = dict((node, i) for i, node in enumerate(graph.nodes))

count = 0

movements = set()

finished = False

while not finished:

finished = True

for node in graph.nodes:

new = choose_cluster(node, cluster_lookup, graph.edges)

move = (node, cluster_lookup[node], new)

if new != cluster_lookup[node] and move not in movements:

movements.add(move)

cluster_lookup[node] = new

finished = False

clusters = {}

for k, v in cluster_lookup.iteritems():

clusters.setdefault(v, []).append(k)

return clusters.values()

def get_distance_graph(documents):

class Graph(object):

def __init__(self):

self.edges = {}

def add_edge(self, n1, n2, w):

self.edges.setdefault(n1, []).append((n2, w))

self.edges.setdefault(n2, []).append((n1, w))

graph = Graph()

doc_ids = range(len(documents))

graph.nodes = set(doc_ids)

for a, b in combinations(doc_ids, 2):

graph.add_edge(a, b, cosine_distance(documents[a], documents[b]))

return graph

def get_documents():

texts = [

"foo blub baz",

"foo bar baz",

"asdf bsdf csdf",

"foo bab blub",

"csdf hddf kjtz",

"123 456 890",

"321 890 456 foo",

"123 890 uiop",

]

return [{"text": text, "tokens": text.split()}

for i, text in enumerate(texts)]

def main(args):

documents = get_documents()

add_tfidf_to(documents)

dist_graph = get_distance_graph(documents)

for cluster in majorclust(dist_graph):

print "========="

for doc_id in cluster:

print documents[doc_id]["text"]

if __name__ == '__main__':

main(sys.argv)

For real applications, you would use a decent tokenizer, use integers instead of token-strings and don't calc a O(n^2) distance-matrix...

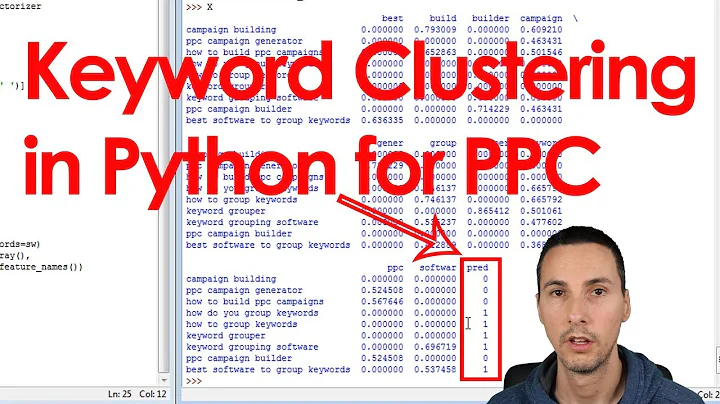

Solution 2

It seems to be possible by using simple UNIX command line tools to extract the text contents of those documents into text files, then using a pure Python solution for the actual clustering.

I found a code snippet for clustering data in general:

http://www.daniweb.com/code/snippet216641.html

A Python package for this:

http://python-cluster.sourceforge.net/

Another python package (used mainly for bioinformatics):

http://bonsai.ims.u-tokyo.ac.jp/~mdehoon/software/cluster/software.htm#pycluster

Related videos on Youtube

Dan

Updated on April 23, 2020Comments

-

Dan about 4 years

I need to cluster some text documents and have been researching various options. It looks like LingPipe can cluster plain text without prior conversion (to vector space etc), but it's the only tool I've seen that explicitly claims to work on strings.

Are there any Python tools that can cluster text directly? If not, what's the best way to handle this?

-

fviktor over 14 yearsInformation on what "text clustering" is: www2.parc.com/istl/projects/ia/sg-clustering.html

-

fviktor over 14 yearsIf you need help on extracting the text contents from various document types, then please add another question, since that's another part of the task, I think. It'd allow for better separation of issues IMHO.

-

ealdent over 14 yearsMallet also will work on text files without you having to do anything, assuming you have a directory full of them (with one doc per file). I recommend just using nltk, a python library. You'll need to do a little pre-processing on the files, but it's not painful.

-