Comparing floating-point 0

Solution 1

Well, first off it isn't really a matter of the C++ standard. Rather what is at issue is your floating-point model standard (most likely IEEE).

For IEEE floats, that is probably safe, as float(0) should result in the same number as 0.0f, and that multiplied by any other number should also be 0.0f.

What isn't really safe is doing other floating point ops (eg: adds and subtracts with non-whole numbers) and checking them against 0.0f.

Solution 2

AFAIK, It won't necessarily, it could also end up very close to 0.

It is generally best to compare against an epsilon. I use a function like this for doing such comparisons:

float EpsilonEqual( float a, float b, float epsilon )

{

return fabsf( a - b ) < epsilon;

}

Solution 3

NaNs and Infinites can screw up such comparisions, as others have already mentioned.

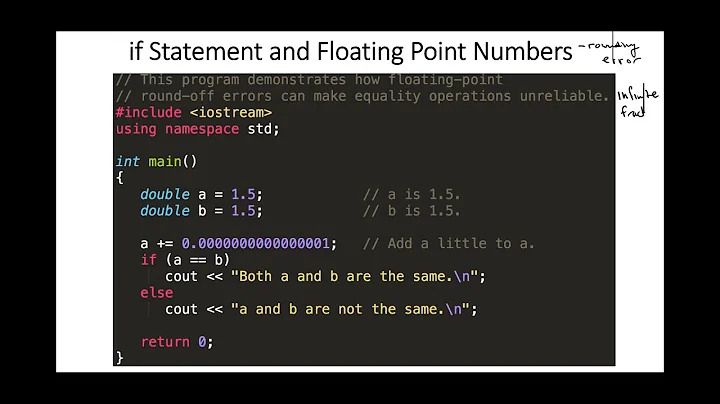

However, there is further pitfall: in C++ you can not rely on a compile time expression of float type, comparing equal to the same expression evaluated at run time.

The reason for that is that C++ allows extended precision for fp computations, in any willy-nilly way. Example:

#include <iostream>

// This provides sufficent obfuscation so that g++ doesn't just inline results.

bool obfuscatedTrue() { return true; }

int main()

{

using namespace std;

double const a = (obfuscatedTrue()? 3.0 : 0.3);

double const b = (obfuscatedTrue()? 7.0 : 0.7);

double const c = a/b;

cout << (c == a/b? "OK." : "\"Wrong\" comparision result.") << endl;

}

Results with one particular compiler:

C:\test> g++ --version | find "++"

g++ (TDM-2 mingw32) 4.4.1

C:\test> g++ fp_comparision_problem.cpp & a

"Wrong" comparision result.

C:\test> g++ -O fp_comparision_problem.cpp & a

OK.

C:\test> _

Cheers & hth.,

– Alf

Solution 4

With that particular statement, you can be pretty sure the result will be 0 and the comparison be true - I don't think the C++ standard actually prescribes it, but any reasonable implementation of floating point types will have 0 work like that.

However, for most other calculations, the result cannot be expected to be exactly equal to a literal of the mathematically correct result:

Why don’t my numbers, like 0.1 + 0.2 add up to a nice round 0.3, and instead I get a weird result like 0.30000000000000004?

Because internally, computers use a format (binary floating-point) that cannot accurately represent a number like 0.1, 0.2 or 0.3 at all.

When the code is compiled or interpreted, your “0.1” is already rounded to the nearest number in that format, which results in a small rounding error even before the calculation happens.

Read The Floating-Point Guide for detailed explanations and how to do comparisons with expected values correctly.

Related videos on Youtube

Comments

-

NEO_FRESHMAN almost 2 years

NEO_FRESHMAN almost 2 yearsIf foo is of float type, is the following expression valid/recommended?

(0.0f == foo * float(0))Will it have the expected (mathematical) value regardless of foo's value?

Does the C++ standard defines the behavior or is it implementation specific?

-

NEO_FRESHMAN over 13 years@rursw1 More interested if there's a guarantee from standard

NEO_FRESHMAN over 13 years@rursw1 More interested if there's a guarantee from standard -

John Dibling over 13 years@rursw1: That's not gonna work.

John Dibling over 13 years@rursw1: That's not gonna work. -

Martin York over 13 yearsSo the above will not hold where foo is NaN or infinity!

Martin York over 13 yearsSo the above will not hold where foo is NaN or infinity!

-

-

Michael Borgwardt over 13 yearsIEEE 754 mandates that -0.0f == +0.0f, even though the bit values are different.

-

Mike Seymour over 13 yearsMultiplying any finite IEEE value by zero will give zero. If

foois infinity or NaN, then the multiplication result is NaN, and the comparison result is false. -

Goz over 13 years@Michael: Fair enough. Doesn't detract from the point though. I'll remove the reference however.

-

hobbs over 13 yearsI'm guessing that the comment here is only referring to the fact that x*0 is NaN if x is NaN.

-

T.E.D. over 13 years@Mike Seymour - Good point. Perhaps that line was meant to be a check that foo is a valid float value.

T.E.D. over 13 years@Mike Seymour - Good point. Perhaps that line was meant to be a check that foo is a valid float value. -

Pascal Cuoq about 10 yearsAt what higher precision do you fear that the result of

Pascal Cuoq about 10 yearsAt what higher precision do you fear that the result offoo * float(0)might be different from the result of the multiplication done at the precision of the type? -

supercat about 10 yearsIs there any usefulness to allowing compilers to produce results other than those which would be produced by coercing the operands of comparison operators to their compile-time types (e.g. allowing

someFloat == otherFloat / 3.0fto be evaluated as though it were(double)someFloat == (double)otherFloat / 3.0)? It seems to me that in the vast majority of cases where the latter is "faster", it would also yield useless results. -

Cheers and hth. - Alf about 10 years@PascalCuoq: the asnwer contains a complete exqample, with output. and so the idea that this is something that I "fear" that "might" happen, appears to indicate that you have not read the answer. hence, i suggest that you do.

Cheers and hth. - Alf about 10 years@PascalCuoq: the asnwer contains a complete exqample, with output. and so the idea that this is something that I "fear" that "might" happen, appears to indicate that you have not read the answer. hence, i suggest that you do. -

Cheers and hth. - Alf about 10 years@supercat: I only know of one way it is useful, namely an architecture where floating point operations are computed asynchronously and at higher precision than the platform's common

Cheers and hth. - Alf about 10 years@supercat: I only know of one way it is useful, namely an architecture where floating point operations are computed asynchronously and at higher precision than the platform's commondoubletype. That might sound esoteric, but it's how ordinary PCs work. They were originally based on a trio of "co-processors": the 8086 main processor, or its 8088 incarnation with 8-bit multiplexed bus; the 8087 "math" co-processor, dealing asynchronously with floating point operations; and the 8089 "i/o" co-processor, which AFAIK were never used. The physical packaging has changed, but not the logical design. -

supercat about 10 years@Cheersandhth.-Alf: I certainly recognize that allowing such freedom may make code "faster"; I fail to see, though, how the expression

(phaseOfMoon() ? (float)f1 == (float)(f2 / 3.0f) ? (double)f1 == (double)(f2 / 3.0))could be useful for much of anything even if it could be computed a thousand times as fast as(float)f1 == (float)(f2 / 3.0f). -

Cheers and hth. - Alf about 10 years@supercat: Your response comment is actively misleading readers, yielding the impression that you are responding to things I have written. But your alleged quote "faster" is not a quote of me. And your example is not an example I have given, nor is it meaningful in itself. Nor is the emphasized "useful" meaningful in this context, and as it applies to your own invented example it is not relevant to anything (only that you can construct non-useful examples, perhaps?). In short your comment is misleading, argumentative and noisy. Consequently I have marked your comment for mod attention.

Cheers and hth. - Alf about 10 years@supercat: Your response comment is actively misleading readers, yielding the impression that you are responding to things I have written. But your alleged quote "faster" is not a quote of me. And your example is not an example I have given, nor is it meaningful in itself. Nor is the emphasized "useful" meaningful in this context, and as it applies to your own invented example it is not relevant to anything (only that you can construct non-useful examples, perhaps?). In short your comment is misleading, argumentative and noisy. Consequently I have marked your comment for mod attention. -

supercat about 10 years@Cheersandhth.-Alf: I'm obviously not making myself clear. I understand that there are architectures where rounding higher-precision types to

floatprecision before a comparison would make things slower (implying that omitting such conversion could make them faster). What I was wondering is when it's useful to compare things for equality without any guarantee that they are rounded to some particular precision. Presumably the standards committee thought that allowing C compilers to omit the rounding step would be a useful optimization in some contexts; I wonder when those would be. -

Cheers and hth. - Alf about 10 years@supercat: re "omit the rounding step", I suspect that rounding to elss precision after each operation may not always produce the same result as performing the calculation directly in less precision. Coupled with practical need to support cross-compilation, where the target fp unit is not available at copmile time, his is then a reasonable rationale for not requiring such (most likely not practically achievable) exact correspondence between compile time and run time operations. However, this is just educated, informed guesswork. But it's possible that the rationale is discussed in D&E.

Cheers and hth. - Alf about 10 years@supercat: re "omit the rounding step", I suspect that rounding to elss precision after each operation may not always produce the same result as performing the calculation directly in less precision. Coupled with practical need to support cross-compilation, where the target fp unit is not available at copmile time, his is then a reasonable rationale for not requiring such (most likely not practically achievable) exact correspondence between compile time and run time operations. However, this is just educated, informed guesswork. But it's possible that the rationale is discussed in D&E.