conditional aggregation using pyspark

14,832

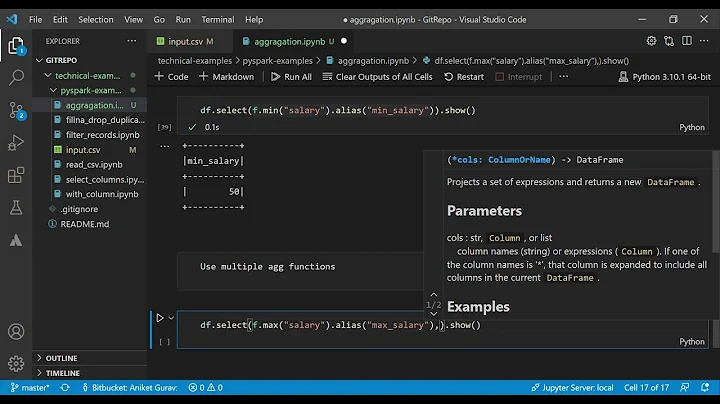

You can translate your SQL code directly into DataFrame primitives:

from pyspark.sql.functions import when, sum, avg, col

(df

.groupBy("a", "b", "c", "d") # group by a,b,c,d

.agg( # select

when(col("c") < 10, sum("e")) # when c <=10 then sum(e)

.when(col("c").between(10 ,20), avg("c")) # when c between 10 and 20 then avg(e)

.otherwise(0)) # else 0.00

Related videos on Youtube

Author by

Reddy

Updated on June 04, 2022Comments

-

Reddy almost 2 years

consider the below as the dataframe

a b c d e africa 123 1 10 121.2 africa 123 1 10 321.98 africa 123 2 12 43.92 africa 124 2 12 43.92 usa 121 1 12 825.32 usa 121 1 12 89.78 usa 123 2 10 32.24 usa 123 5 21 43.92 canada 132 2 13 63.21 canada 132 2 13 89.23 canada 132 3 21 85.32 canada 131 3 10 43.92now I want to convert the below case statement to equivalent statement in PYSPARK using dataframes.

we can directly use this in case statement using hivecontex/sqlcontest nut looking for the traditional pyspark nql query

select case when c <=10 then sum(e) when c between 10 and 20 then avg(e) else 0.00 end from table group by a,b,c,dRegards Anvesh