Convolution Vs Correlation

Solution 1

You will likely get a much better answer on dsp stack exchange but... for starters I have found a number of similar terms and they can be tricky to pin down definitions.

- Correlation

- Cross correlation

- Convolution

- Correlation coefficient

- Sliding dot product

- Pearson correlation

1, 2, 3, and 5 are very similar

4,6 are similar

Note that all of these terms have dot products rearing their heads

You asked about Correlation and Convolution - these are conceptually the same except that the output is flipped in convolution. I suspect that you may have been asking about the difference between correlation coefficient (such as Pearson) and convolution/correlation.

Prerequisites

I am assuming that you know how to compute the dot-product. Given two equal sized vectors v and w each with three elements, the algebraic dot product is v[0]*w[0]+v[1]*w[1]+v[2]*w[2]

There is a lot of theory behind the dot product in terms of what it represents etc....

Notice the dot product is a single number (scalar) representing the mapping between these two vectors/points v,w In geometry frequently one computes the cosine of the angle between two vectors which uses the dot product. The cosine of the angle between two vectors is between -1 and 1 and can be thought of as a measure of similarity.

Correlation coefficient (Pearson)

Correlation coefficient between equal length v,w is simply the dot product of two zero mean signals (subtract mean v from v to get zmv and mean w from w to get zmw - here zm is shorthand for zero mean) divided by the magnitudes of zmv and zmw.

to produce a number between -1 and 1. Close to zero means little correlation, close to +/- 1 is high correlation. it measures the similarity between these two vectors.

See http://en.wikipedia.org/wiki/Pearson_product-moment_correlation_coefficient for a better definition.

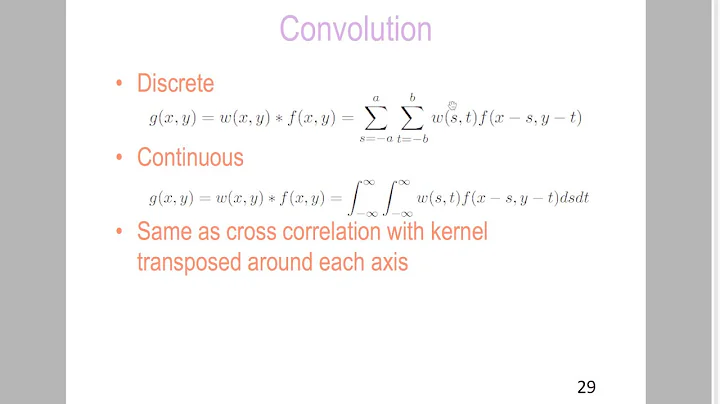

Convolution and Correlation

When we want to correlate/convolve v1 and v2 we basically are computing a series of dot-products and putting them into an output vector. Let's say that v1 is three elements and v2 is 10 elements. The dot products we compute are as follows:

output[0] = v1[0]*v2[0]+v1[1]*v2[1]+v1[2]*v2[2]

output[1] = v1[0]*v2[1]+v1[1]*v2[2]+v1[2]*v2[3]

output[2] = v1[0]*v2[2]+v1[1]*v2[3]+v1[2]*v2[4]

output[3] = v1[0]*v2[3]+v1[1]*v2[4]+v1[2]*v2[5]

output[4] = v1[0]*v2[4]+v1[1]*v2[5]+v1[2]*v2[6]

output[5] = v1[0]*v2[7]+v1[1]*v2[8]+v1[2]*v2[9]

output[6] = v1[0]*v2[8]+v1[1]*v2[9]+v1[2]*v2[10] #note this is

#mathematically valid but might give you a run time error in a computer implementation

The output can be flipped if a true convolution is needed.

output[5] = v1[0]*v2[0]+v1[1]*v2[1]+v1[2]*v2[2]

output[4] = v1[0]*v2[1]+v1[1]*v2[2]+v1[2]*v2[3]

output[3] = v1[0]*v2[2]+v1[1]*v2[3]+v1[2]*v2[4]

output[2] = v1[0]*v2[3]+v1[1]*v2[4]+v1[2]*v2[5]

output[1] = v1[0]*v2[4]+v1[1]*v2[5]+v1[2]*v2[6]

output[0] = v1[0]*v2[7]+v1[1]*v2[8]+v1[2]*v2[9]

Notice that we have less than 10 elements in the output as for simplicity I am computing the convolution only where both v1 and v2 are defined

Notice also that the convolution is simply a number of dot products. There has been considerable work over the years to be able to speed up convolutions. The sweeping dot products are slow and can be sped up by first transforming the vectors into the fourier basis space and then computing a single vector multiplication then inverting the result, though I won't go into that here...

You might want to look at these resources as well as googling: Calculating Pearson correlation and significance in Python

Solution 2

The best answer I got were from this document:http://www.cs.umd.edu/~djacobs/CMSC426/Convolution.pdf

I'm just going to copy the excerpt from the doc:

"The key difference between the two is that convolution is associative. That is, if F and G are filters, then F*(GI) = (FG)*I. If you don’t believe this, try a simple example, using F=G=(-1 0 1), for example. It is very convenient to have convolution be associative. Suppose, for example, we want to smooth an image and then take its derivative. We could do this by convolving the image with a Gaussian filter, and then convolving it with a derivative filter. But we could alternatively convolve the derivative filter with the Gaussian to produce a filter called a Difference of Gaussian (DOG), and then convolve this with our image. The nice thing about this is that the DOG filter can be precomputed, and we only have to convolve one filter with our image.

In general, people use convolution for image processing operations such as smoothing, and they use correlation to match a template to an image. Then, we don’t mind that correlation isn’t associative, because it doesn’t really make sense to combine two templates into one with correlation, whereas we might often want to combine two filter together for convolution."

Solution 3

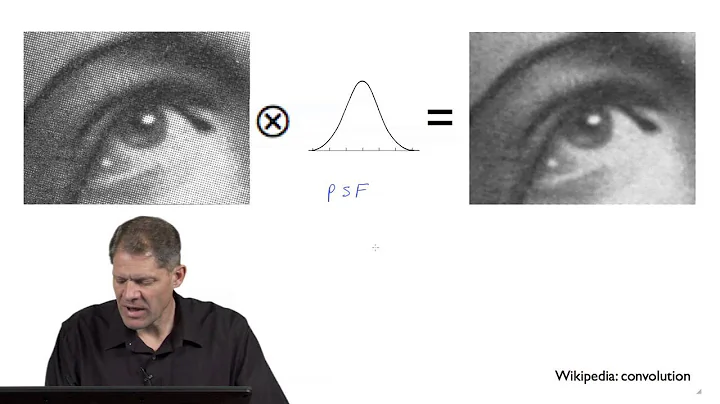

Convolution is just like correlation, except that we flip over the filter before correlating

Related videos on Youtube

Varo

Updated on July 09, 2022Comments

-

Varo almost 2 years

Can anyone explain me the similarities and differences, of the Correlation and Convolution ? Please explain the intuition behind that, not the mathematical equation(i.e, flipping the kernel/impulse).. Application examples in the image processing domain for each category would be appreciated too

-

Paul R over 10 yearsThis question appears to be off-topic because it is about DSP theory and belongs on dsp.stackexchange.com

-

-

Ali almost 7 yearsThis answer adds nothing new to the answers above!

Ali almost 7 yearsThis answer adds nothing new to the answers above! -

lucidbrot over 6 yearsbut it's short and pretty much what I was looking for. What this answer lacks is how to flip it. I believe it's both vertically and horizontally flipping

lucidbrot over 6 yearsbut it's short and pretty much what I was looking for. What this answer lacks is how to flip it. I believe it's both vertically and horizontally flipping

![Convolution vs. Correlation in Signal Processing and Deep Learning [DSP #10]](https://i.ytimg.com/vi/R7cn9b7BNyk/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLAH70hP1oH-UoSONbtXsor-GbhAHA)