correct xargs parallel usage

Solution 1

I'd be willing to bet that your problem is python. You didn't say what kind of processing is being done on each file, but assuming you are just doing in-memory processing of the data, the running time will be dominated by starting up 30 million python virtual machines (interpreters).

If you can restructure your python program to take a list of files, instead of just one, you will get a huge improvement in performance. You can then still use xargs to further improve performance. For example, 40 processes, each processing 1000 files:

find ./data -name "*.json" -print0 |

xargs -0 -L1000 -P 40 python Convert.py

This isn't to say that python is a bad/slow language; it's just not optimized for startup time. You'll see this with any virtual machine-based or interpreted language. Java, for example, would be even worse. If your program was written in C, there would still be a cost of starting a separate operating system process to handle each file, but it would be much less.

From there you can fiddle with -P to see if you can squeeze out a bit more speed, perhaps by increasing the number of processes to take advantage of idle processors while data is being read/written.

Solution 2

So firstly, consider the constraints:

What is the constraint on each job? If it's I/O you can probably get away with multiple jobs per CPU core up till you hit the limit of I/O, but if it's CPU intensive, its going to be worse than pointless running more jobs concurrently than you have CPU cores.

My understanding of these things is that GNU Parallel would give you better control over the queue of jobs etc.

See GNU parallel vs & (I mean background) vs xargs -P for a more detailed explanation of how the two differ.

Related videos on Youtube

Yan Zhu

Updated on September 18, 2022Comments

-

Yan Zhu over 1 year

I am using

xargsto call a python script to process about 30 million small files. I hope to usexargsto parallelize the process. The command I am using is:find ./data -name "*.json" -print0 | xargs -0 -I{} -P 40 python Convert.py {} > log.txtBasically,

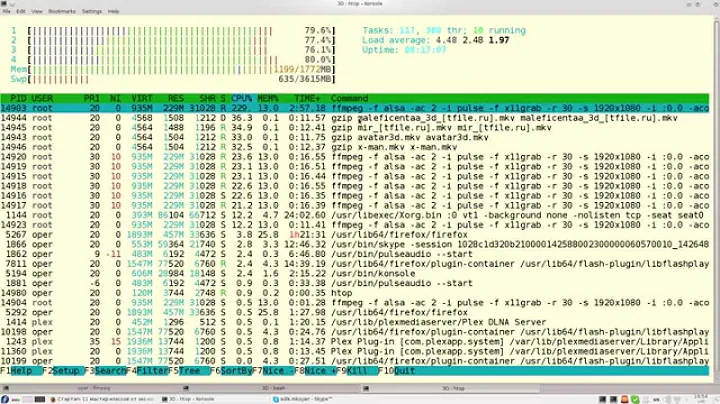

Convert.pywill read in a small json file (4kb), do some processing and write to another 4kb file. I am running on a server with 40 CPU cores. And no other CPU-intense process is running on this server.By monitoring htop (btw, is there any other good way to monitor the CPU performance?), I find that

-P 40is not as fast as expected. Sometimes all cores will freeze and decrease almost to zero for 3-4 seconds, then will recover to 60-70%. Then I try to decrease the number of parallel processes to-P 20-30, but it's still not very fast. The ideal behavior should be linear speed-up. Any suggestions for the parallel usage of xargs ?-

Fox about 9 yearsWhat kind of processing does the script do? Any database/network/io involved? How long does it run?

-

PSkocik about 9 yearsI second @OleTange. That is the expected behavior if you run as many processes as you have cores and your tasks are IO bound. First the cores will wait on IO for their task (sleep), then they will process, and then repeat. If you add more processes, then the additional processes that currently aren't running on a physical core will have kicked off parallel IO operations, which will, when finished, eliminate or at least reduce the sleep periods on your cores.

-

Bichoy about 9 years1- Do you have hyperthreading enabled? 2- in what you have up there, log.txt is actually overwritten with each call to convert.py ... not sure if this is the intended behavior or not.

-

Ole Tange almost 9 years

Ole Tange almost 9 yearsxargs -Pand>is opening up for race conditions because of the half-line problem gnu.org/software/parallel/… Using GNU Parallel instead will not have that problem.

-